Executive Summary

As Large Language Model (LLM) infrastructure matures, the attack surface is shifting from traditional management interfaces (“Control Plane”) to the actual flow of inference data (“Data Plane”).

CVE-2025-62164 represents a paradigm-shifting vulnerability in vLLM, the industry-standard engine for high-throughput LLM serving. This flaw allows attackers to weaponize the /v1/completions endpoint by injecting malicious prompt embeddings. By exploiting insecure deserialization mechanisms within PyTorch’s loading logic, an attacker can trigger memory corruption, leading to Denial of Service (DoS) and potential Remote Code Execution (RCE)—all without needing valid API keys (depending on deployment configuration).

This analysis breaks down the technical root cause, provides a conceptual Proof of Concept (PoC), and outlines immediate remediation steps for AI platform engineers.

The Attack Vector: Why Embeddings Are Dangerous

In standard LLM interactions, users send text. However, advanced inference engines like vLLM support embedding inputs (tensor data) directly via the API. This is designed for performance optimization and multi-modal workflows, but it opens a dangerous door: Direct Object Deserialization.

The vulnerability resides in how vLLM processes these incoming tensors. Specifically, the engine implicitly trusts the structure of the serialized data provided by the user, assuming it to be a harmless mathematical representation.

The Vulnerable Code Path

The critical flaw exists within vllm/entrypoints/renderer.py inside the _load_and_validate_embed function.

Python

# Simplified representation of the vulnerable logic

import torch

import io

import pybase64

def _load_and_validate_embed(embed: bytes):

# DANGER: Deserializing untrusted binary streams

tensor = torch.load(

io.BytesIO(pybase64.b64decode(embed, validate=True)),

weights_only=True, # The false sense of security

map_location=torch.device("cpu"),

)

return tensor

While weights_only=True is intended to prevent the execution of arbitrary Python code (a common Pickle vulnerability), it is not sufficient to prevent memory corruption when dealing with specific PyTorch tensor types.

Technical Deep Dive: Exploiting Sparse Tensors

The core of CVE-2025-62164 leverages a disconnect between PyTorch’s safety flags and its handling of Sparse Tensors.

- The PyTorch 2.8+ Shift: Newer versions of PyTorch default to skipping expensive integrity checks for sparse tensors to improve performance.

- The Bypass: An attacker can construct a malformed “Sparse COO” (Coordinate Format) tensor. Even with

weights_only=True,torch.loadwill deserialize this structure. - Memory Corruption: Because the indices of the sparse tensor are not validated against the declared size during loading, subsequent operations (like converting the tensor to dense format or moving it to GPU memory) result in an Out-of-Bounds (OOB) Write.

This OOB write crashes the Python interpreter immediately (DoS). With sophisticated heap spraying and memory layout manipulation, this primitive can be escalated to gain control of the instruction pointer, achieving RCE.

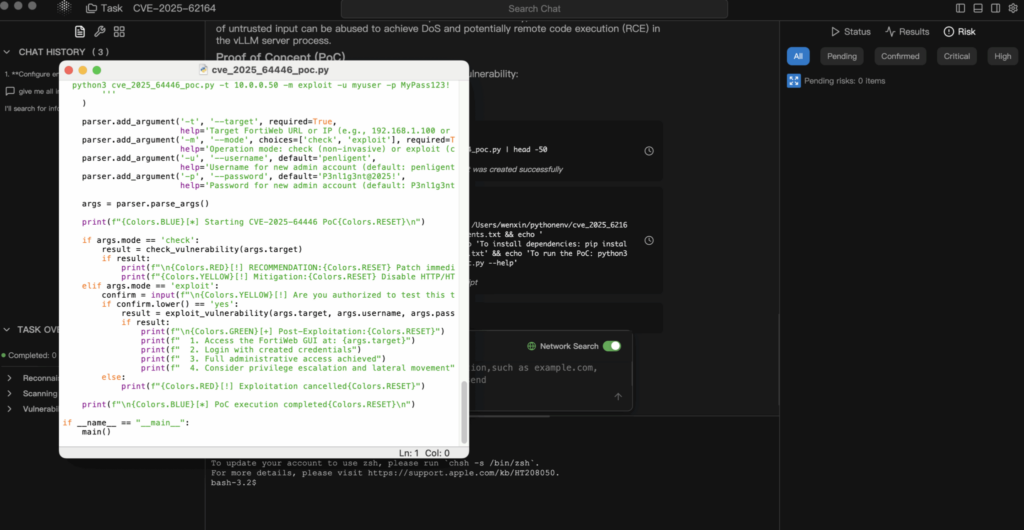

Proof of Concept (PoC) Analysis

Disclaimer: This PoC is for educational and defensive purposes only.

1. Constructing the Payload

The attacker creates a serialized PyTorch tensor that violates internal consistency constraints.

Python

import torch

import io

import base64

def generate_exploit_payload():

buffer = io.BytesIO()

# Create a Sparse Tensor designed to trigger OOB write upon access

# The specific indices would be crafted to point outside allocated memory

# malformed_tensor = torch.sparse_coo_tensor(indices=..., values=..., size=...)

# For demonstration, we simulate the serialization

# In a real attack, this buffer contains the binary pickle stream

torch.save(malformed_tensor, buffer)

# Encode for JSON transport

return base64.b64encode(buffer.getvalue()).decode('utf-8')

2. The Exploit Request

The attacker sends this payload to the standard completion endpoint.

POST http://target-vllm-instance:8000/v1/completions

JSON

{

"model": "meta-llama/Llama-2-7b-hf",

"prompt": {

"embedding": "<BASE64_MALICIOUS_PAYLOAD>"

},

"max_tokens": 10

}

3. The Result

- Best Case: The vLLM worker process encounters a segmentation fault and crashes. If the orchestrator (e.g., Kubernetes) restarts it, the attacker can simply resend the request, creating a persistent denial of service.

- Worst Case: The memory corruption overwrites function pointers, allowing the attacker to execute shellcode within the container context.

Impact Assessment

- Availability (High): This is a trivial-to-execute DoS. A single request can take down an inference node. In clustered environments, an attacker can iterate through nodes to degrade the entire cluster.

- Confidentiality & Integrity (Critical): If RCE is achieved, the attacker gains access to the environment variables (often containing Hugging Face tokens, S3 keys, or WandB keys) and the proprietary model weights loaded in memory.

Remediation & Mitigation

1. Upgrade Immediately

The vulnerability is patched in vLLM v0.11.1.

- Action: Update your Docker images or PyPI packages to the latest version immediately.

- Fix Logic: The patch implements strict validation logic that rejects unsafe tensor formats before they interact with the memory allocator.

2. Input Sanitization (WAF/Gateway Level)

If you cannot upgrade immediately, you must block the attack vector at the gateway.

- Action: Configure your API Gateway (Nginx, Kong, Traefik) to inspect incoming JSON bodies.

- Rule: Block any request to

/v1/completionswhere thepromptfield contains an object with anembeddingkey.

3. Network Segmentation

Ensure your inference server is not directly exposed to the public internet. Access should be mediated by a backend service that sanitizes inputs and handles authentication.

Conclusion

CVE-2025-62164 serves as a wake-up call for AI Security. We can no longer treat “Models” and “Embeddings” as inert data. In the era of AI, data is code, and deserializing it requires the same level of scrutiny as executing a binary executable.

For teams running Pen-testing on AI infrastructure (like Penligent.ai), checking for exposed serialization endpoints in inference engines should now be a standard part of the engagement scope.

Author’s Note: Keep your AI infrastructure secure. Always validate inputs, never trust serialized data, and keep your vLLM versions pinned to the latest stable release.