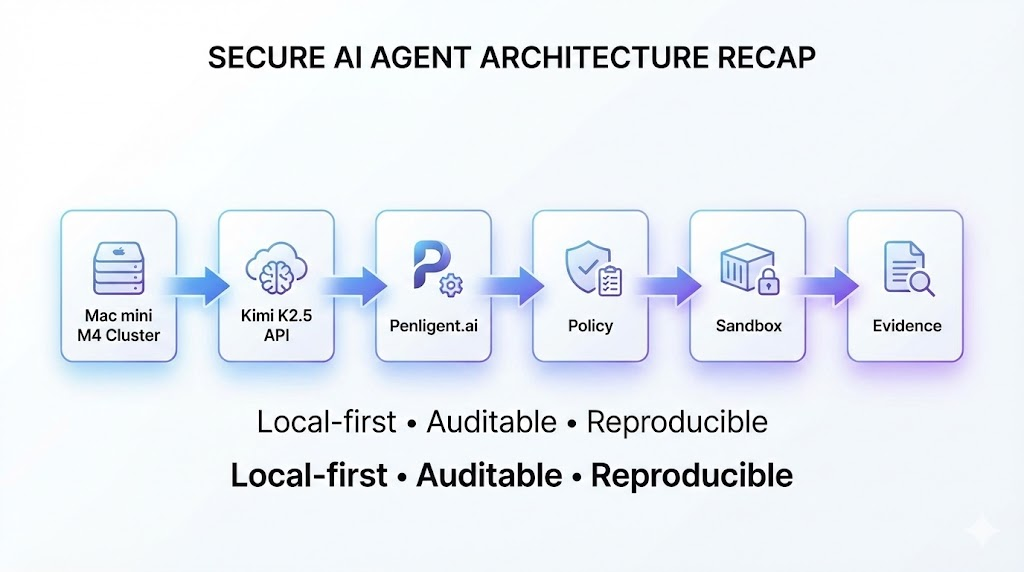

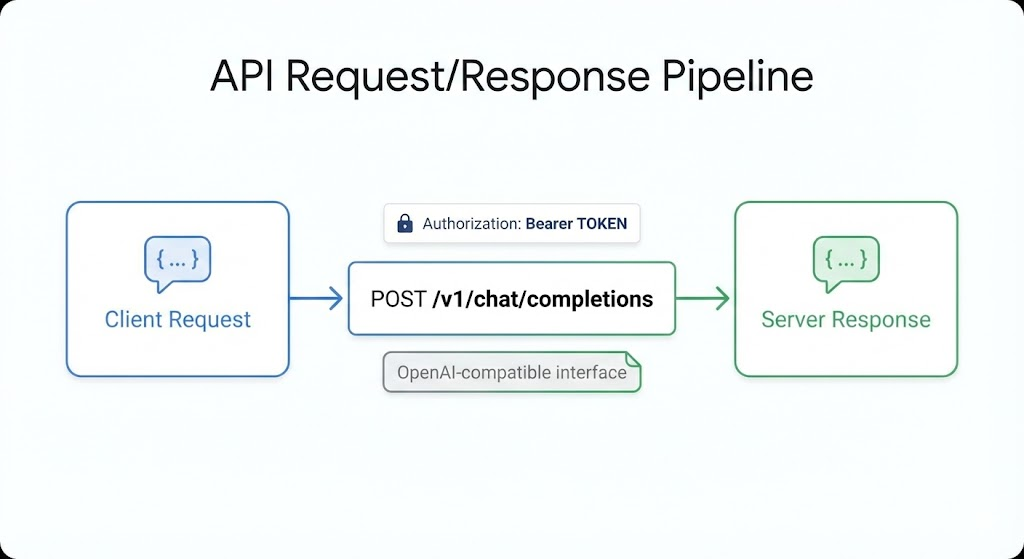

This tutorial walks you through a real, reproducible “Route A” architecture: your agent orchestration, tool execution, evidence storage, and audit logs run locally on a Mac mini M4 cluster, while reasoning runs through the official Kimi (Moonshot) K2.5 API via an OpenAI-compatible interface. Moonshot’s own docs explicitly support OpenAI SDK compatibility and show the canonical POST /v1/chat/completions workflow. (Moonshot AI)

The intent here is authorized security testing only (your own systems, labs, or targets you have explicit permission to test). The goal is not “weaponization”; the goal is a minimal, controllable, auditable agentic pipeline that you can evolve into a customer-ready local deployment.

1) Why Route A is the “absolutely feasible” route on Mac mini M4

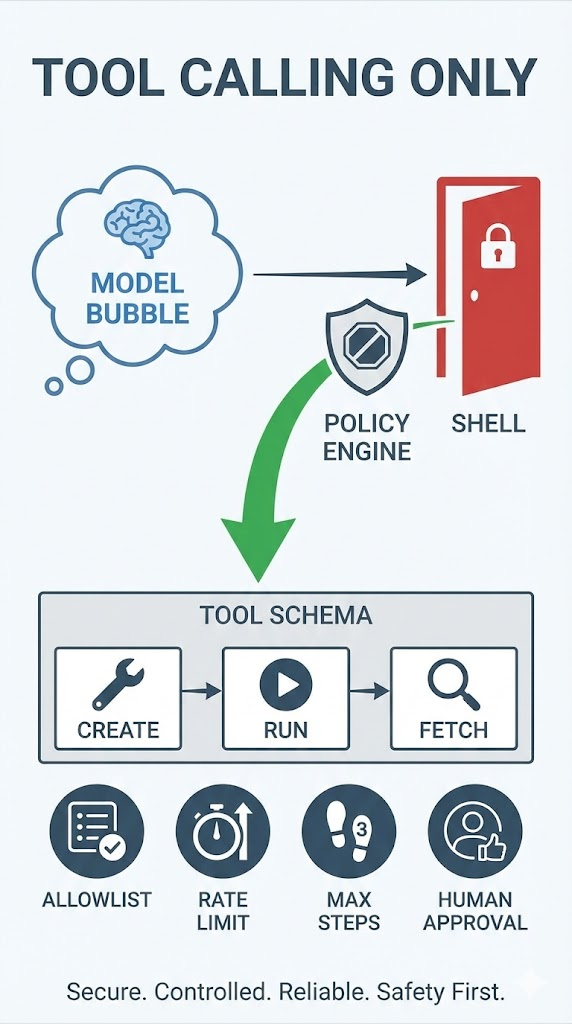

K2.5’s official API gives you a stable, vendor-maintained inference layer with OpenAI-compatible semantics; you can build your local platform so that the model never directly runs shell commands. Instead, the model can only call whitelisted tools (functions), and every tool call is enforced by your local policy engine and executed in a sandbox worker.

Moonshot also provides detailed “migrating from OpenAI” guidance listing the compatible interfaces (including /v1/chat/completions) and examples for using the Kimi models through the platform endpoint. (Moonshot AI)

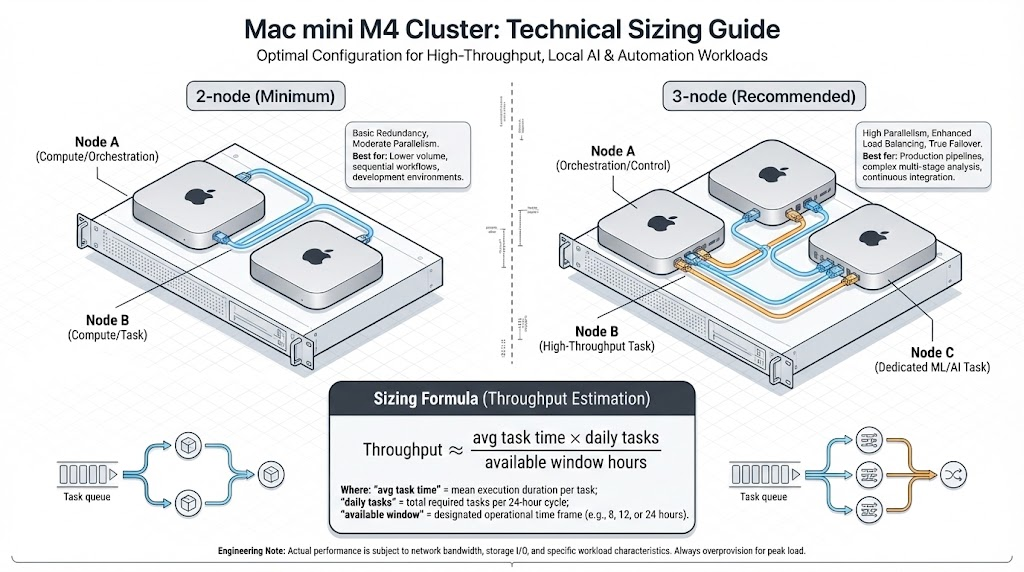

2) Hardware planning: how many Mac mini M4 machines do you need?

In Route A, you are no hosting K2.5 weights locally, so the deciding factor is tool execution throughput (workers, sandboxes, report generation), not GPU inference capacity.

Apple’s official specs show Mac mini (M4) and Mac mini (M4 Pro) configurations with different unified memory ceilings; in practice, higher memory helps you run more concurrent containers and keep logs/indices resident. (GitHub)

For a minimal, production-shaped setup:

- 2 machines (minimum viable): One “control plane” node (gateway + orchestrator + queue + observability), plus one “worker” node (sandbox execution + Penligent integration).

- 3 machines (recommended for demos and parallelism): One control plane, two workers. This makes “parallel agent plans” and “parallel scans” feel stable in front of customers.

If you’re sizing for throughput, use a simple engineering heuristic: estimate the average task runtime T, expected daily tasks N, and desired completion window W. You’ll want worker capacity roughly proportional to (T × N) / W, then add headroom for log shipping and report generation.

3) Reference architecture (local-first, model-via-API)

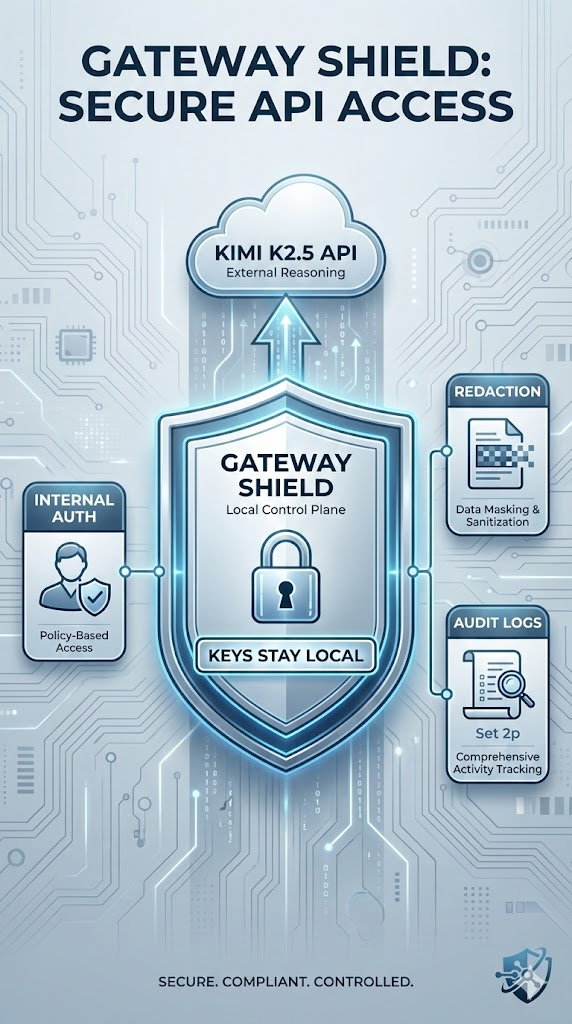

You will build six components:

- Model Gateway (local): a tiny OpenAI-compatible proxy that (a) authenticates internal callers, (b) redacts/filters outbound prompts, (c) forwards to Moonshot Kimi endpoint, and (d) logs audit metadata.

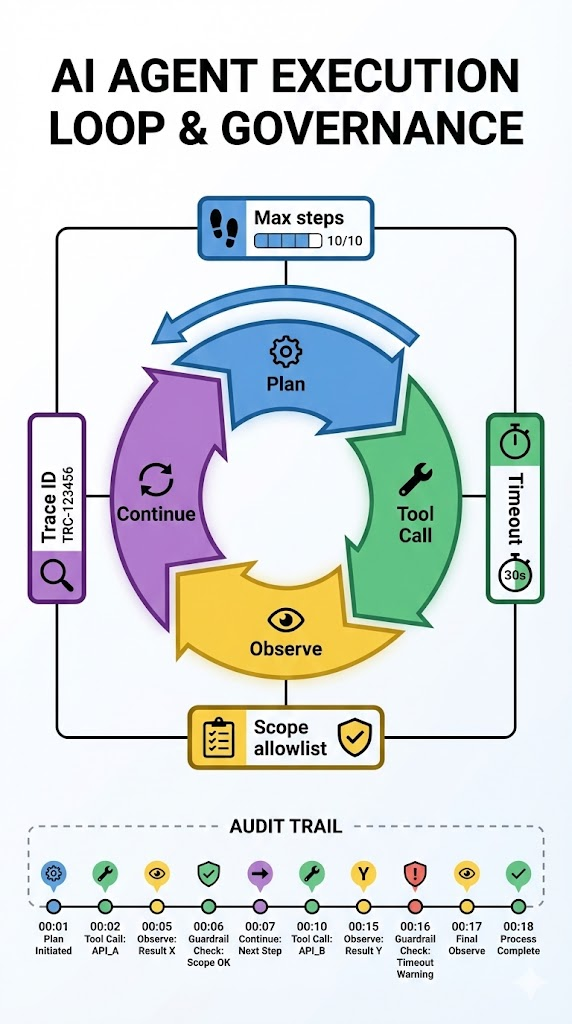

- Agent Orchestrator (local): the “agent loop” (plan → tool call → observe → continue) that never executes commands directly—only calls tools.

- Policy Engine (local): allowlist targets, rate limits, maximum steps, and hard blocks for dangerous classes of actions.

- Sandbox Workers (local): run each task in a controlled container environment; call Penligent; collect evidence and report artifacts.

- Evidence & Reports Storage (local): local disk, NAS, or MinIO; every task gets a trace ID and immutable artifact pointers.

- Audit Logs (local): append-only records for every model request and tool execution step.

Moonshot’s platform docs emphasize OpenAI compatibility and show canonical Kimi API usage; you’ll leverage that as the inference substrate. (Moonshot AI)

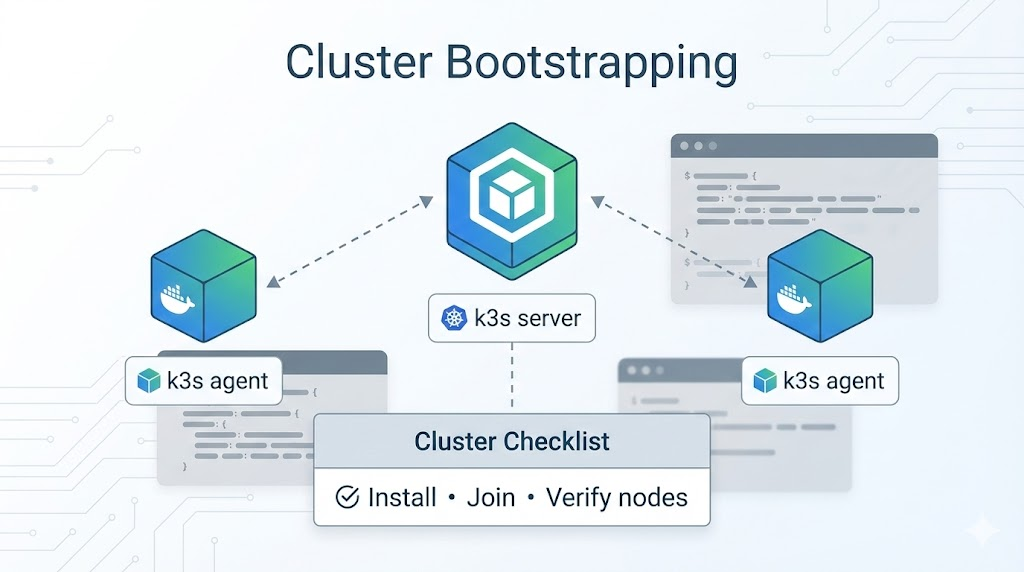

4) Cluster setup: k3s on Mac mini M4 (2–3 nodes)

You puede do everything with Docker Compose, but k3s gives you the “cluster shape” you’ll want for real deployments: scaling workers, isolating namespaces, rolling updates, and service discovery.

4.1 Prerequisites on each Mac

Install command line tools and basic dependencies:

xcode-select --install

/bin/bash -c "$(curl -fsSL <https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh>)"

brew install git jq wget [email protected]

For containers, use Docker Desktop if your team is familiar with it, or Colima if you want a more server-like runtime. Either works for the tutorial; what matters is you can build and run containers reliably.

4.2 Install k3s server on the control node

En m4-control:

curl -sfL <https://get.k3s.io> | sh -s - server --write-kubeconfig-mode 644

sudo kubectl get nodes -o wide

sudo cat /var/lib/rancher/k3s/server/node-token

4.3 Join worker nodes

En m4-worker-1 (and m4-worker-2 if you have 3 nodes):

export K3S_URL="https://<CONTROL_NODE_IP>:6443"

export K3S_TOKEN="<PASTE_NODE_TOKEN_HERE>"

curl -sfL <https://get.k3s.io> | sh -s - agent

Back on m4-control:

sudo kubectl get nodes -o wide

5) Kimi K2.5 API basics you will rely on

Moonshot’s docs show that:

- The platform API uses an HTTP endpoint with Bearer token auth.

- It is compatible with OpenAI SDK for most APIs.

- The canonical chat completion endpoint is

POST /v1/chat/completions. - The docs include a “start using Kimi API” guide and examples for the Kimi models. (Moonshot AI)

Also, K2.5 has examples and scripts publicly referenced in the model card / community assets (e.g., “thinking” vs “instant” usage patterns). (Hugging Face)

We’ll structure the gateway so you can keep the model name configurable and avoid hard-coding assumptions.

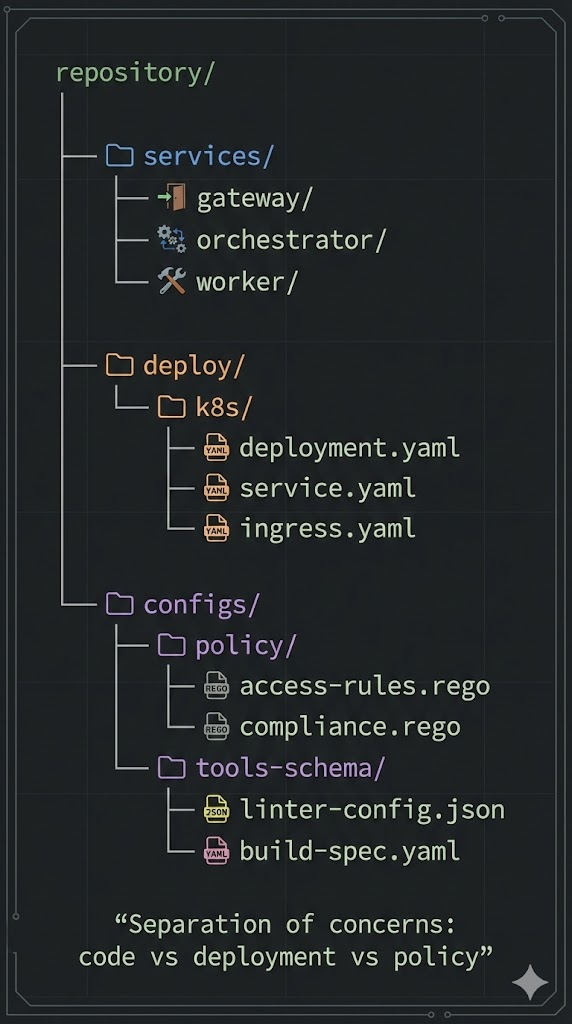

6) Build the repository structure

Create a repo folder:

local-agentic-hacker/

deploy/

00-namespace.yaml

01-secrets.yaml

02-configmap.yaml

10-gateway.yaml

20-worker.yaml

30-orchestrator.yaml

services/

gateway/

app.py

requirements.txt

Dockerfile

orchestrator/

app.py

requirements.txt

Dockerfile

worker/

app.py

penligent_connector.py

requirements.txt

Dockerfile

configs/

policy.yaml

tools_schema.json

This layout keeps “what runs” (services) and “how it’s deployed” (deploy) cleanly separated.

7) The Model Gateway (local): OpenAI-compatible proxy to Kimi

The gateway is deliberately small. Its job is to keep all other local services from needing direct internet keys and to enforce consistent logging and optional redaction.

Moonshot shows that Kimi API is OpenAI-compatible, so this approach maps cleanly to /v1/chat/completions. (Moonshot AI)

7.1 services/gateway/app.py

import os

import time

from typing import Any, Dict, Optional

import httpx

from fastapi import FastAPI, Header, HTTPException, Request

MOONSHOT_BASE_URL = os.environ.get("MOONSHOT_BASE_URL", "<https://api.moonshot.ai/v1>")

MOONSHOT_API_KEY = os.environ.get("MOONSHOT_API_KEY", "")

# Internal auth for your own cluster services

GATEWAY_INTERNAL_KEY = os.environ.get("GATEWAY_INTERNAL_KEY", "change-me")

app = FastAPI(title="Local Model Gateway (Kimi K2.5 via Moonshot API)")

def redact(text: str) -> str:

# Minimal example: you can expand this with regex rules

if not isinstance(text, str):

return ""

# Don't leak secrets in logs

return text[:2000]

@app.post("/v1/chat/completions")

async def chat_completions(req: Request, authorization: Optional[str] = Header(default=None)):

# Internal caller auth

if authorization != f"Bearer {GATEWAY_INTERNAL_KEY}":

raise HTTPException(status_code=401, detail="Unauthorized")

if not MOONSHOT_API_KEY:

raise HTTPException(status_code=500, detail="MOONSHOT_API_KEY not set")

payload: Dict[str, Any] = await req.json()

# Audit metadata (no raw prompt by default)

audit = {

"ts": int(time.time()),

"model": payload.get("model"),

"messages_count": len(payload.get("messages", [])),

"has_tools": bool(payload.get("tools")),

}

print("[GATEWAY_AUDIT]", audit)

headers = {

"Authorization": f"Bearer {MOONSHOT_API_KEY}",

"Content-Type": "application/json",

}

# Optional: minimal redaction before sending upstream

# Example: truncate user content fields

messages = payload.get("messages", [])

for m in messages:

if "content" in m and isinstance(m["content"], str):

m["content"] = redact(m["content"])

payload["messages"] = messages

async with httpx.AsyncClient(timeout=180) as client:

r = await client.post(f"{MOONSHOT_BASE_URL}/chat/completions", headers=headers, json=payload)

if r.status_code >= 400:

raise HTTPException(status_code=r.status_code, detail=r.text[:800])

return r.json()

7.2 Requirements and Dockerfile

services/gateway/requirements.txt:

fastapi==0.115.0

uvicorn==0.30.6

httpx==0.27.2

services/gateway/Dockerfile:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY app.py .

CMD ["uvicorn", "app:app", "--host", "0.0.0.0", "--port", "8080"]

8) Policy + Tools: make the agent controllable by design

The key principle is: the model cannot “do things” directly. It can only request tool calls. Your policy decides whether tools can be called, and with what parameters.

8.1 configs/policy.yaml

allowlist:

- "<http://juiceshop.local>"

- "<http://10.0.0.50:3000>"

limits:

default_max_requests: 800

default_max_duration_sec: 1800

default_rate_limit_rps: 3.0

hard_blocks:

- "dos"

- "credential_stuffing"

- "mass_scan_outside_scope"

- "data_exfiltration"

human_in_the_loop:

require_confirm_for:

- "high_impact"

8.2 configs/tools_schema.json

This is an OpenAI-style tool schema. Kimi supports tool calling, as described in Moonshot docs, so the structure is what you’d expect in OpenAI compatible APIs. (Moonshot AI)

[

{

"type": "function",

"function": {

"name": "create_pentest_task",

"description": "Create a new authorized task in the local worker / Penligent connector. Target must be allowlisted.",

"parameters": {

"type": "object",

"properties": {

"target": { "type": "string" },

"scope": { "type": "string" },

"notes": { "type": "string" },

"limits": {

"type": "object",

"properties": {

"max_requests": { "type": "integer" },

"max_duration_sec": { "type": "integer" },

"rate_limit_rps": { "type": "number" }

},

"required": ["max_requests", "max_duration_sec", "rate_limit_rps"]

}

},

"required": ["target", "scope", "limits"]

}

}

},

{

"type": "function",

"function": {

"name": "run_pentest_task",

"description": "Execute the task in sandbox worker.",

"parameters": {

"type": "object",

"properties": {

"task_id": { "type": "string" }

},

"required": ["task_id"]

}

}

},

{

"type": "function",

"function": {

"name": "fetch_task_result",

"description": "Fetch final result and artifact pointers.",

"parameters": {

"type": "object",

"properties": {

"task_id": { "type": "string" }

},

"required": ["task_id"]

}

}

}

]

9) The Orchestrator: a minimal “agent loop” that’s safe and auditable

The orchestrator is where you enforce the runtime constraints: maximum steps, target allowlist, rate limits, and tool whitelisting.

Moonshot’s docs show the OpenAI-compatible interface and provide tool calling guidance; we’ll use the standard tool call flow. (Moonshot AI)

9.1 services/orchestrator/app.py

import json

import os

import time

from typing import Any, Dict, Optional

import httpx

import yaml

from fastapi import FastAPI, HTTPException

GATEWAY_URL = os.environ.get("GATEWAY_URL", "<http://model-gateway:8080/v1/chat/completions>")

GATEWAY_KEY = os.environ.get("GATEWAY_INTERNAL_KEY", "change-me")

WORKER_URL = os.environ.get("WORKER_URL", "<http://worker:8081>")

MODEL_NAME = os.environ.get("MODEL_NAME", "kimi-k2.5") # set to your actual model name in Moonshot

POLICY_PATH = os.environ.get("POLICY_PATH", "/configs/policy.yaml")

TOOLS_PATH = os.environ.get("TOOLS_PATH", "/configs/tools_schema.json")

app = FastAPI(title="Local Agent Orchestrator")

def load_policy() -> Dict[str, Any]:

with open(POLICY_PATH, "r", encoding="utf-8") as f:

return yaml.safe_load(f)

def load_tools() -> Any:

with open(TOOLS_PATH, "r", encoding="utf-8") as f:

return json.load(f)

def is_allowed_target(target: str, policy: Dict[str, Any]) -> bool:

for a in policy.get("allowlist", []):

if target.startswith(a):

return True

return False

async def call_model(messages: Any, tools: Any) -> Dict[str, Any]:

payload = {

"model": MODEL_NAME,

"messages": messages,

"tools": tools,

"tool_choice": "auto"

}

headers = {"Authorization": f"Bearer {GATEWAY_KEY}"}

async with httpx.AsyncClient(timeout=180) as client:

r = await client.post(GATEWAY_URL, headers=headers, json=payload)

if r.status_code >= 400:

raise HTTPException(status_code=500, detail=f"Model gateway error: {r.text[:500]}")

return r.json()

async def call_worker(path: str, body: Dict[str, Any]) -> Dict[str, Any]:

async with httpx.AsyncClient(timeout=1800) as client:

r = await client.post(f"{WORKER_URL}{path}", json=body)

if r.status_code >= 400:

raise HTTPException(status_code=500, detail=f"Worker error: {r.text[:500]}")

return r.json()

@app.post("/run")

async def run_agent(input: Dict[str, Any]):

policy = load_policy()

tools = load_tools()

target = input.get("target", "")

goal = input.get("goal", "Run an authorized security test and produce a report.")

context = input.get("context", "")

if not is_allowed_target(target, policy):

raise HTTPException(status_code=400, detail="Target not in allowlist.")

messages = [

{

"role": "system",

"content": (

"You are an orchestrator for AUTHORIZED security testing only. "

"Stay within scope and rate limits. "

"Do NOT output exploit payloads or instructions. "

"Use tools only via provided functions, and focus on safe verification, evidence collection, and reporting."

)

},

{

"role": "user",

"content": f"Target: {target}\\nGoal: {goal}\\nContext: {context}\\nProceed using tools."

}

]

max_steps = 10

trace = {

"trace_id": f"trace-{int(time.time())}",

"start_ts": int(time.time()),

"target": target,

"steps": []

}

task_id: Optional[str] = None

for step in range(max_steps):

resp = await call_model(messages, tools)

msg = resp["choices"][0]["message"]

trace["steps"].append({"step": step, "assistant": msg})

# If no tool calls, return the final text

if "tool_calls" not in msg:

return {"trace": trace, "final": msg.get("content", ""), "task_id": task_id}

for tc in msg["tool_calls"]:

fn = tc["function"]["name"]

args = json.loads(tc["function"]["arguments"])

# Local hard enforcement

if fn == "create_pentest_task":

if not is_allowed_target(args.get("target", ""), policy):

raise HTTPException(status_code=400, detail="Tool target not allowed.")

limits = args.get("limits") or {}

limits.setdefault("max_requests", policy["limits"]["default_max_requests"])

limits.setdefault("max_duration_sec", policy["limits"]["default_max_duration_sec"])

limits.setdefault("rate_limit_rps", policy["limits"]["default_rate_limit_rps"])

args["limits"] = limits

w = await call_worker("/create", args)

task_id = w["task_id"]

tool_result = w

elif fn == "run_pentest_task":

if not task_id:

raise HTTPException(status_code=400, detail="No task_id yet.")

tool_result = await call_worker("/run", {"task_id": task_id})

elif fn == "fetch_task_result":

if not task_id:

raise HTTPException(status_code=400, detail="No task_id yet.")

tool_result = await call_worker("/result", {"task_id": task_id})

else:

raise HTTPException(status_code=400, detail=f"Unknown tool: {fn}")

# Feed tool output back to the model

messages.append(msg)

messages.append({

"role": "tool",

"tool_call_id": tc["id"],

"content": json.dumps(tool_result, ensure_ascii=False)

})

# Optional early stop if result contains report pointers

if task_id and any("report" in (m.get("content","") if isinstance(m, dict) else "") for m in messages[-3:]):

break

return {"trace": trace, "task_id": task_id}

services/orchestrator/requirements.txt:

fastapi==0.115.0

uvicorn==0.30.6

httpx==0.27.2

PyYAML==6.0.2

services/orchestrator/Dockerfile:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY app.py .

CMD ["uvicorn", "app:app", "--host", "0.0.0.0", "--port", "8082"]

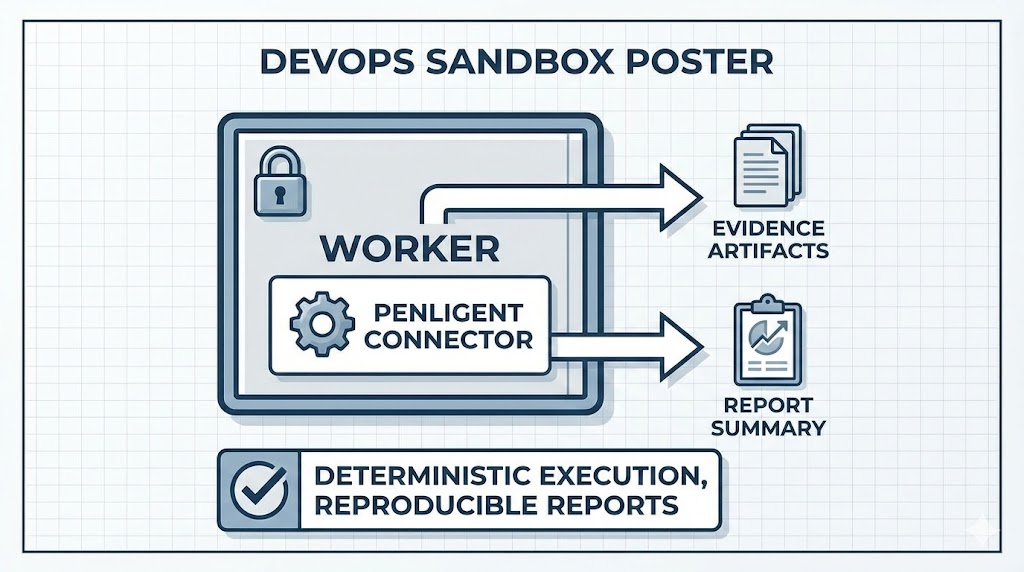

10) The Worker: local sandbox execution + Penligent connector

The worker simulates “execution plane” responsibilities: create task, run task, collect evidence, write report pointer.

Since you asked for a complete tutorial without back-and-forth, the connector below is intentionally structured so that it runs end-to-end even before you wire the real Penligent API: it will generate a demo report artifact to prove the pipeline. You can then replace one internal function with your real Penligent integration and everything else stays the same.

10.1 services/worker/app.py

import os

import time

import uuid

from typing import Any, Dict

from fastapi import FastAPI, HTTPException

from penligent_connector import run_with_penligent

app = FastAPI(title="Sandbox Worker")

TASKS: Dict[str, Dict[str, Any]] = {}

EVIDENCE_DIR = os.environ.get("EVIDENCE_DIR", "/evidence")

@app.post("/create")

async def create_task(body: Dict[str, Any]):

task_id = str(uuid.uuid4())

TASKS[task_id] = {

"task_id": task_id,

"status": "created",

"created_ts": int(time.time()),

"input": body,

"result": None,

}

return {"task_id": task_id, "status": "created"}

@app.post("/run")

async def run_task(body: Dict[str, Any]):

task_id = body.get("task_id", "")

if task_id not in TASKS:

raise HTTPException(status_code=404, detail="task not found")

task = TASKS[task_id]

if task["status"] in ("running", "done"):

return {"task_id": task_id, "status": task["status"]}

task["status"] = "running"

inp = task["input"]

result = await run_with_penligent(inp, evidence_dir=EVIDENCE_DIR)

task["result"] = result

task["status"] = "done"

task["done_ts"] = int(time.time())

return {"task_id": task_id, "status": "done", "summary": result.get("summary", {})}

@app.post("/result")

async def fetch_result(body: Dict[str, Any]):

task_id = body.get("task_id", "")

if task_id not in TASKS:

raise HTTPException(status_code=404, detail="task not found")

task = TASKS[task_id]

if task["status"] != "done":

return {"task_id": task_id, "status": task["status"]}

return {"task_id": task_id, "status": "done", "result": task["result"]}

10.2 services/worker/penligent_connector.py

import json

import os

import time

from typing import Any, Dict

import httpx

PENLIGENT_BASE_URL = os.environ.get("PENLIGENT_BASE_URL", "")

PENLIGENT_API_KEY = os.environ.get("PENLIGENT_API_KEY", "")

async def run_with_penligent(task: Dict[str, Any], evidence_dir: str) -> Dict[str, Any]:

target = task["target"]

scope = task.get("scope", "")

limits = task.get("limits", {})

notes = task.get("notes", "")

ts = int(time.time())

evidence_path = os.path.join(evidence_dir, f"task-{ts}")

os.makedirs(evidence_path, exist_ok=True)

# If real Penligent API is configured, call it

if PENLIGENT_BASE_URL and PENLIGENT_API_KEY:

headers = {"Authorization": f"Bearer {PENLIGENT_API_KEY}"}

payload = {

"target": target,

"scope": scope,

"limits": limits,

"notes": notes

}

async with httpx.AsyncClient(timeout=1800) as client:

r = await client.post(f"{PENLIGENT_BASE_URL}/api/task/run", headers=headers, json=payload)

if r.status_code >= 400:

return {

"summary": {"target": target, "error": r.text[:500]},

"evidence_path": evidence_path

}

data = r.json()

report_path = os.path.join(evidence_path, "penligent_report.json")

with open(report_path, "w", encoding="utf-8") as f:

json.dump(data, f, ensure_ascii=False, indent=2)

return {

"summary": {

"target": target,

"issues_count": data.get("issues_count", 0),

"highlights": data.get("highlights", [])[:10]

},

"report_path": report_path,

"evidence_path": evidence_path

}

# Otherwise generate a demo artifact to validate the pipeline

report_path = os.path.join(evidence_path, "report.md")

content = (

f"# Demo Report (Pipeline Validation)\\n\\n"

f"Target: {target}\\n\\n"

f"Scope: {scope}\\n\\n"

f"Notes: {notes}\\n\\n"

f"Limits: {json.dumps(limits)}\\n\\n"

f"## Summary\\n\\n"

f"This is a placeholder report for validating the local-first agent pipeline.\\n"

f"Replace PENLIGENT_BASE_URL/PENLIGENT_API_KEY and implement your real Penligent call.\\n"

)

with open(report_path, "w", encoding="utf-8") as f:

f.write(content)

return {

"summary": {"target": target, "issues_count": 0, "highlights": ["demo-only"]},

"report_path": report_path,

"evidence_path": evidence_path

}

services/worker/requirements.txt:

fastapi==0.115.0

uvicorn==0.30.6

httpx==0.27.2

services/worker/Dockerfile:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY app.py penligent_connector.py .

CMD ["uvicorn", "app:app", "--host", "0.0.0.0", "--port", "8081"]

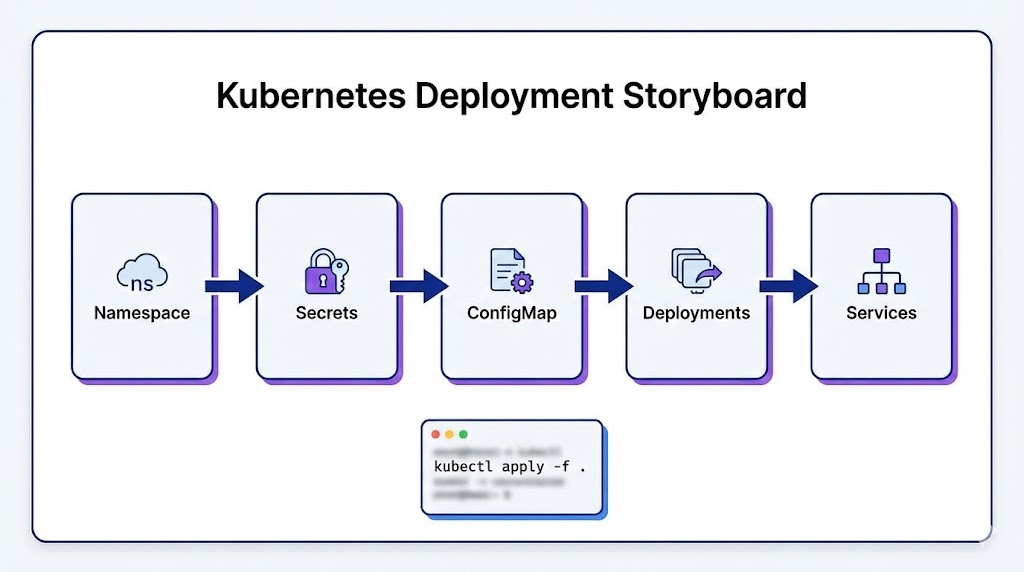

11) Deploy to k3s: namespaces, secrets, configmaps, services

11.1 Namespace

deploy/00-namespace.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: agentic-local

Apply:

kubectl apply -f deploy/00-namespace.yaml

11.2 Secrets (Moonshot API key + internal gateway key)

Moonshot’s docs show Authorization: Bearer $MOONSHOT_API_KEY usage patterns for Kimi API requests. (Moonshot AI)

deploy/01-secrets.yaml:

apiVersion: v1

kind: Secret

metadata:

name: moonshot-secret

namespace: agentic-local

type: Opaque

stringData:

api_key: "REPLACE_WITH_YOUR_MOONSHOT_API_KEY"

---

apiVersion: v1

kind: Secret

metadata:

name: internal-secret

namespace: agentic-local

type: Opaque

stringData:

gateway_key: "REPLACE_WITH_A_LONG_RANDOM_INTERNAL_KEY"

Apply:

kubectl apply -f deploy/01-secrets.yaml

11.3 ConfigMap (policy + tool schema)

deploy/02-configmap.yaml:

apiVersion: v1

kind: ConfigMap

metadata:

name: agentic-configs

namespace: agentic-local

data:

policy.yaml: |

allowlist:

- "<http://10.0.0.50:3000>"

limits:

default_max_requests: 800

default_max_duration_sec: 1800

default_rate_limit_rps: 3.0

hard_blocks:

- "dos"

- "credential_stuffing"

- "mass_scan_outside_scope"

- "data_exfiltration"

human_in_the_loop:

require_confirm_for:

- "high_impact"

tools_schema.json: |

[

{

"type": "function",

"function": {

"name": "create_pentest_task",

"description": "Create a new authorized task in the local worker / Penligent connector. Target must be allowlisted.",

"parameters": {

"type": "object",

"properties": {

"target": { "type": "string" },

"scope": { "type": "string" },

"notes": { "type": "string" },

"limits": {

"type": "object",

"properties": {

"max_requests": { "type": "integer" },

"max_duration_sec": { "type": "integer" },

"rate_limit_rps": { "type": "number" }

},

"required": ["max_requests", "max_duration_sec", "rate_limit_rps"]

}

},

"required": ["target", "scope", "limits"]

}

}

},

{

"type": "function",

"function": {

"name": "run_pentest_task",

"description": "Execute the task in sandbox worker.",

"parameters": {

"type": "object",

"properties": { "task_id": { "type": "string" } },

"required": ["task_id"]

}

}

},

{

"type": "function",

"function": {

"name": "fetch_task_result",

"description": "Fetch final result and artifact pointers.",

"parameters": {

"type": "object",

"properties": { "task_id": { "type": "string" } },

"required": ["task_id"]

}

}

}

]

Apply:

kubectl apply -f deploy/02-configmap.yaml

11.4 Gateway deployment

deploy/10-gateway.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: model-gateway

namespace: agentic-local

spec:

replicas: 1

selector:

matchLabels:

app: model-gateway

template:

metadata:

labels:

app: model-gateway

spec:

containers:

- name: model-gateway

image: yourrepo/model-gateway:latest

ports:

- containerPort: 8080

env:

- name: MOONSHOT_BASE_URL

value: "<https://api.moonshot.ai/v1>"

- name: MOONSHOT_API_KEY

valueFrom:

secretKeyRef:

name: moonshot-secret

key: api_key

- name: GATEWAY_INTERNAL_KEY

valueFrom:

secretKeyRef:

name: internal-secret

key: gateway_key

---

apiVersion: v1

kind: Service

metadata:

name: model-gateway

namespace: agentic-local

spec:

selector:

app: model-gateway

ports:

- port: 8080

targetPort: 8080

11.5 Worker deployment

deploy/20-worker.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: worker

namespace: agentic-local

spec:

replicas: 1

selector:

matchLabels:

app: worker

template:

metadata:

labels:

app: worker

spec:

containers:

- name: worker

image: yourrepo/worker:latest

ports:

- containerPort: 8081

env:

- name: EVIDENCE_DIR

value: "/evidence"

- name: PENLIGENT_BASE_URL

value: "" # set when you wire real Penligent

- name: PENLIGENT_API_KEY

value: "" # set via Secret in real deployment

volumeMounts:

- name: evidence

mountPath: /evidence

volumes:

- name: evidence

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: worker

namespace: agentic-local

spec:

selector:

app: worker

ports:

- port: 8081

targetPort: 8081

11.6 Orchestrator deployment

deploy/30-orchestrator.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: orchestrator

namespace: agentic-local

spec:

replicas: 1

selector:

matchLabels:

app: orchestrator

template:

metadata:

labels:

app: orchestrator

spec:

containers:

- name: orchestrator

image: yourrepo/orchestrator:latest

ports:

- containerPort: 8082

env:

- name: GATEWAY_URL

value: "<http://model-gateway:8080/v1/chat/completions>"

- name: GATEWAY_INTERNAL_KEY

valueFrom:

secretKeyRef:

name: internal-secret

key: gateway_key

- name: WORKER_URL

value: "<http://worker:8081>"

- name: MODEL_NAME

value: "kimi-k2.5"

- name: POLICY_PATH

value: "/configs/policy.yaml"

- name: TOOLS_PATH

value: "/configs/tools_schema.json"

volumeMounts:

- name: configs

mountPath: /configs

volumes:

- name: configs

configMap:

name: agentic-configs

---

apiVersion: v1

kind: Service

metadata:

name: orchestrator

namespace: agentic-local

spec:

selector:

app: orchestrator

ports:

- port: 8082

targetPort: 8082

Apply all:

kubectl apply -f deploy/10-gateway.yaml

kubectl apply -f deploy/20-worker.yaml

kubectl apply -f deploy/30-orchestrator.yaml

kubectl -n agentic-local get pods -o wide

12) Validate end-to-end with a local lab target (recommended: OWASP Juice Shop)

Run a legal lab target inside your network. For example, run OWASP Juice Shop on one node:

docker run -d --name juiceshop -p 3000:3000 bkimminich/juice-shop

Add the target to your allowlist in policy.yaml (or in the ConfigMap).

Then port-forward the orchestrator and run a request:

kubectl -n agentic-local port-forward svc/orchestrator 8082:8082

Now call the agent:

curl -s <http://127.0.0.1:8082/run> \\

-H "Content-Type: application/json" \\

-d '{

"target": "<http://10.0.0.50:3000>",

"goal": "Perform an authorized security assessment within scope and produce a report summary.",

"context": "Use safe verification only. Do not output exploit payloads."

}'

If you haven’t wired Penligent yet, the worker will still generate a demo report artifact, proving your agent loop + tool calls + evidence pointer pipeline works. Once you wire Penligent, the same pipeline produces real results.

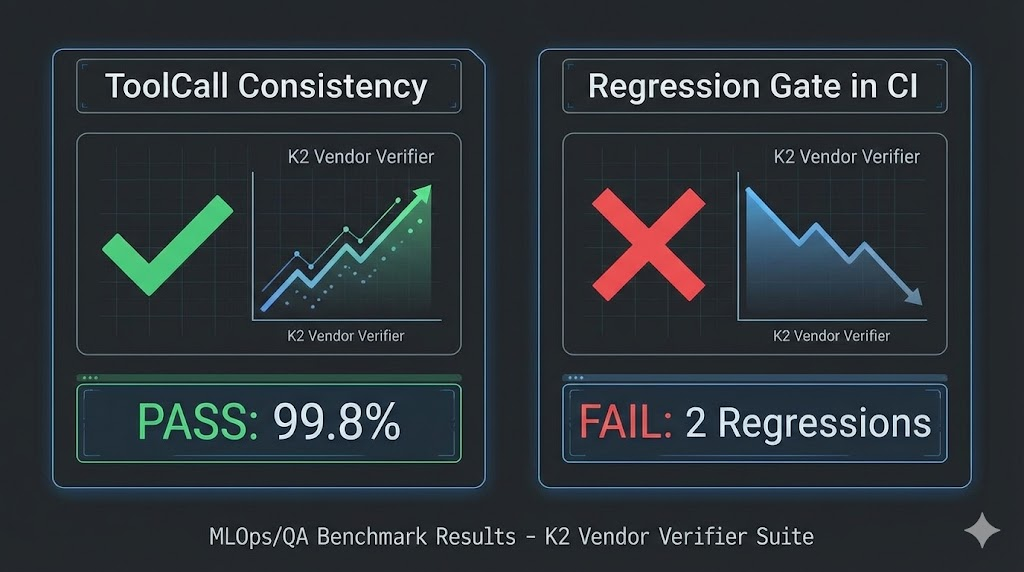

13) Make tool calling reliable: use K2 Vendor Verifier (K2VV) as a deployment gate

Agentic systems often fail on subtle tool-call inconsistencies. Moonshot created K2 Vendor Verifier specifically to monitor and improve the quality/consistency of K2 API implementations and tool calling. (GitHub)

In a real deployment, treat tool-call stability as an SLO. The simplest pattern is:

- Run K2VV in CI against your gateway + upstream.

- Track JSON schema validity, tool_call formatting errors, stop reasons, and “missing tool call” regressions.

- Block release if the score falls below your baseline.

This is one of the easiest ways to justify “production-grade agentic platform” to serious security buyers.

14) Security controls you should not skip even in the minimal system

If you plan to put “Agentic Hacker” in a product brochure, your first customer will ask how you prevent harm. The minimum story that’s credible looks like this:

Your system enforces a strict target allowlist, a strict tool allowlist, strict rate limits, strict max steps, and strict human-in-the-loop hooks for high-impact operations. The model cannot bypass these constraints because it never runs commands—it only requests tool calls, and those are checked locally before execution.

Moonshot’s Kimi platform supports tool calling and long context; your local controls are what make it safe to operationalize. (Moonshot AI)

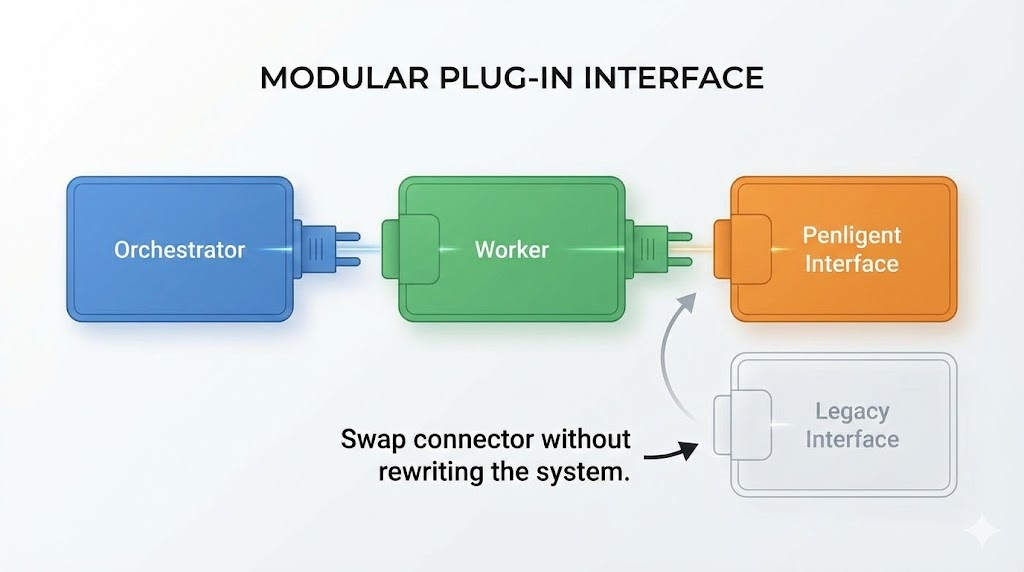

15) Where Penligent.ai “plugs in” and why this tutorial stays valid

In this tutorial, Penligent is integrated at exactly one boundary: the worker’s run_with_penligent() function. Whether Penligent is:

- an internal HTTP API you host,

- a local binary or Dockerized worker you distribute,

- a hybrid “task create → poll → fetch report” workflow,

…the orchestrator and gateway don’t need to change. This separation is what makes the build absolutely feasible today and commercializable tomorrow.

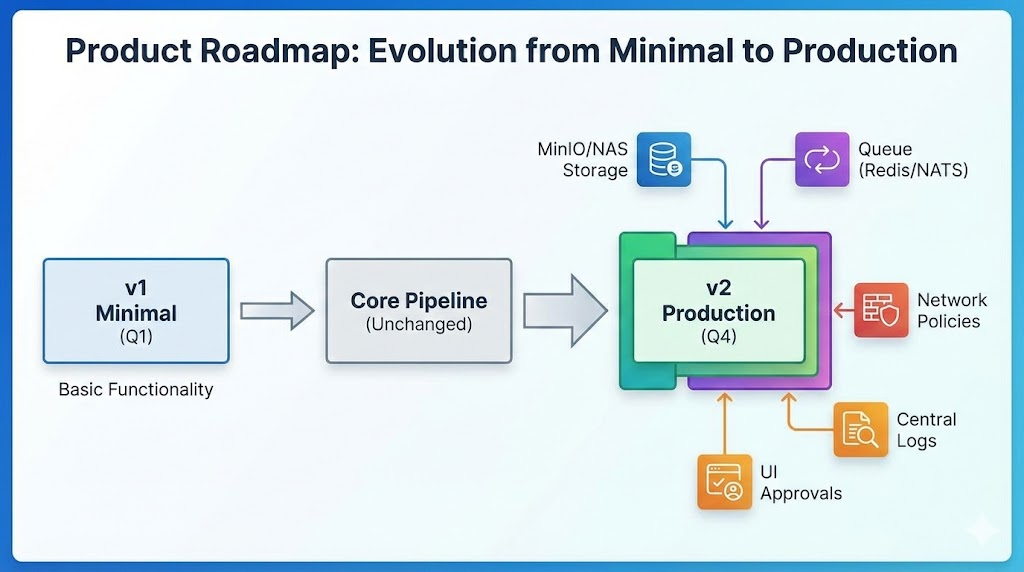

16) Production-shaping upgrades

If you want to evolve beyond “minimal” while staying local-first:

- Replace

emptyDirevidence storage with MinIO (S3-compatible) and return object URLs as artifact pointers. - Add a job queue (Redis + RQ/Celery or NATS) so the worker can run tasks asynchronously and survive restarts.

- Add egress network policies so workers can only reach allowlisted targets.

- Add structured audit logs (JSON lines) and a log pipeline.

- Add a UI that shows “tool calls pending approval” for human-in-the-loop actions.

These don’t change the architectural contract: model via Moonshot API, control locally.

17) Final acceptance checklist

A minimal local-first agentic security platform is “real” only if:

- A target not in allowlist is rejected.

- The model cannot execute arbitrary commands (only tool schema calls).

- Tool calls are logged with a trace ID and timestamps.

- Evidence artifacts are produced and have stable pointers.

- Rate limits and max steps are enforced locally.

- API keys are stored only in Kubernetes secrets, never inside images or logs.

- The system still runs end-to-end when the worker restarts (no single-point “state in memory” in production).

18) Summary

You now have a complete, reproducible Route A tutorial:

- A Mac mini M4 cluster runs the control plane and worker plane.

- A local OpenAI-compatible gateway forwards to the official Kimi API (

/v1/chat/completions) with internal auth and audit logging. (Moonshot AI) - An orchestrator implements a safe tool-calling agent loop.

- A worker executes tasks locally and integrates with Penligent through a single connector boundary.

- K2 Vendor Verifier provides a practical way to measure and enforce tool-call reliability. (GitHub)

If you paste this into a repo and build/push the three images, you can deploy it on k3s today and demonstrate a local-first “agentic hacker” pipeline using authorized lab targets, then swap in the real Penligent task interface when you’re ready—without rewriting the system.