Introduction: The Paradigm Shift from Script Kiddies to Agentic Swarms

In the historical timeline of cybersecurity offense and defense, we are currently navigating a violent fault line. For the past decade, Automated Penetration Testing relied heavily on predefined scripts, static scanning rules, and limited Fuzzing. However, with the rise of Large Language Models (LLMs)—specifically those possessing Long-Context capabilities and strong Reasoning faculties like Kimi K2.5—the boundaries of offensive security are being fundamentally reconstructed.

We are no longer building a simple “tool”; we are engineering a “Digital Worker.”

This whitepaper provides a profound dissection of an architectural pattern we call “Route A”: “Cloud Brain, Local Body.” We utilize a Mac Mini M4 Cluster to construct a high-concurrency, low-power, absolutely controllable Local Execution Environment. This environment leverages the cloud-based reasoning of Kimi K2.5 via OpenAI-compatible protocols to orchestrate command and control, while ultimately directing the Penligent.ai core offensive engine to execute specific tactical maneuvers.

This is not merely a deployment tutorial; it is an architectural manifesto on how to build AI Red Team infrastructure that is enterprise-compliant, possesses absolute data sovereignty, and is unstoppable in its efficacy.

Architectural Philosophy: Why “Local-First” and Mac Mini M4?

When designing an Agentic Hacker system, we face a core contradiction: The Reasoning Capability of the Model vs. The Safety of Operational Execution.

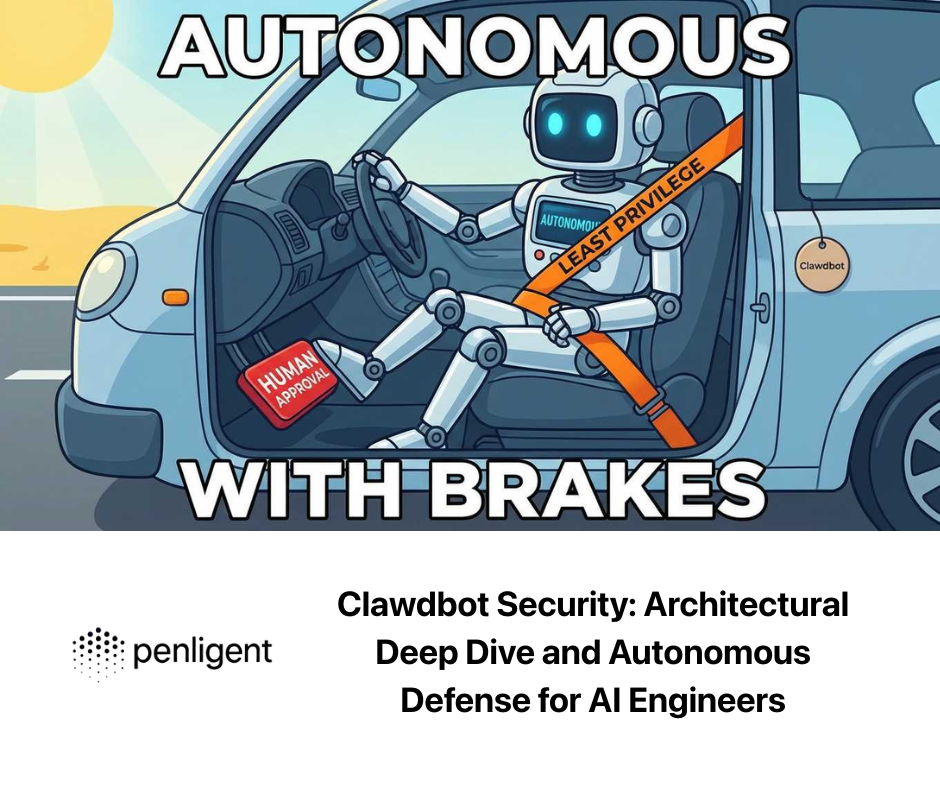

Physical Isolation of “Brain” and “Hand”

Allowing an LLM to run Shell commands directly on a cloud server is “security suicide.” Once a model suffers from hallucinations or falls victim to Prompt Injection, a cloud instance can easily become a jump host for attackers.

The Core Philosophy of “Route A”:

- The Brain (Intelligence): This can be cloud-based (e.g., Kimi K2.5). It is responsible for pure logical reasoning, plan formulation, and tool selection. It nunca touches the target network directly, nor can it execute code.

- The Body (Kinetic Layer): This must be local (The Mac Mini M4 Cluster). It is responsible for receiving instructions from the “Brain,” passing them through a strict Policy Engine, and executing them within an isolated Sandbox.

- The Senses (Perception): Feedback loops provided via the Penligent.ai interface, including execution results, logs, and vulnerability reports.

Why the Mac Mini M4 Cluster?

In the realm of Edge Computing and Local AI Inference, Apple Silicon’s M4 chip offers a nearly perfect “Sweet Spot”:

- High Concurrency Container Density: The single-core performance of the M4 is immense. For running the Kubernetes (k3s) control plane, API Gateways, and a high volume of Docker containers (Workers), it offers a superior performance-to-watt ratio compared to similarly priced x86 mini-PCs.

- Unified Memory Architecture: While “Route A” relies on cloud models for reasoning, the M4’s high memory bandwidth (120GB/s+) leaves ample headroom for deploying future local Verifier Models o Bases de datos vectoriales (RAG), essential for long-term memory.

- Physical Controllability: For a Red Team, data must be physically in hand. A cluster composed of 2-3 Mac Minis can easily fit into a backpack, allowing for deployment within a client’s intranet or an isolated lab, achieving true Data Sovereignty.

The Hardware Foundation: Building Your “Portable Data Center”

To support a commercial-grade Agentic system, precise hardware planning is required.

Cluster Topology Design

We recommend the following “Production-Shaped Setup” as a minimum viable configuration:

- Node A (Control Plane –

m4-control):- Role: Kubernetes Master, API Gateway, Policy Engine, Redis (Queue).

- Spec: Mac Mini M4 (16GB/24GB RAM).

- Responsibility: Scheduling the “Thinking.” It handles all HTTP requests and maintains task queues but does not run heavy scanning tasks.

- Node B/C (Worker Plane –

m4-worker-x):- Role: Sandbox Executor, Penligent Connector.

- Spec: Mac Mini M4 Pro (24GB/48GB RAM) or base M4.

- Responsibility: The “Action.” These nodes run high-density Docker containers, where each container acts as an isolated hacker workstation responsible for calling Penligent.ai APIs or executing specific network requests.

Compute & Throughput Estimation Model

In security operations, hardware scale depends on Task Concurrency rather than Model Parameter size (since the model is in the cloud).

The capacity formula is:

$$Capacity \approx \frac{T \times N}{W} + Overhead$$

Where:

- $T$: Average Task Runtime (e.g., a complete asset reconnaissance takes 15 minutes).

- $N$: Expected Daily Tasks (e.g., scanning 100 targets per day).

- $W$: Desired Completion Window (e.g., must finish within 4 hours).

Based on this, the multi-core performance of the Mac Mini M4 allows us to easily parallelize 20-50 lightweight Worker containers on a single machine—efficiency that traditional virtual machines struggle to match.

The Software Ecosystem: The Cloud-Native Hacker Stack

We do not use simple scripts; we employ a Cloud-Native technology stack to build this hacking platform. This ensures scalability, self-healing capabilities, and version control.

OS & Orchestration Layer: macOS + k3s

Running Kubernetes on macOS sounds counter-intuitive, but paired with Docker Desktop or Colima, it is the fastest path to production. k3s is a CNCF-certified lightweight K8s distribution that fits perfectly in edge scenarios.

Key Deployment Actions:

- **Control Node Initialization:**Bash

curl -sfL <https://get.k3s.io> | sh -s - server --write-kubeconfig-mode 644 - Worker Node Joining: Utilizing the

K3S_TOKENallows for seamless scaling. When your penetration testing workload spikes, simply acquire a new Mac Mini, plug in the ethernet, run one command, and compute power is instantly added to the swarm.

Microservices Architecture

We decouple the system into six distinct microservices, each hosted in Docker containers:

- Model Gateway (Local): The system’s “Firewall.”

- Agent Orchestrator: The system’s “Central Nervous System.”

- Policy Engine: The system’s “Constitution.”

- Sandbox Workers: The system’s “Hands.”

- Evidence Storage: The system’s “Memory.”

- Audit Logs: The system’s “Black Box.”

Deep Dive into Core Components

This is the “Deep Water” zone of the tutorial. We analyze the code logic and the “Why” behind the design.

Model Gateway: Not Just a Proxy, A “Filter”

En Gateway is the single egress point for all requests sent to Kimi K2.5. Its purpose is not just forwarding, but Interception.

Deep Design Considerations:

- Data Redaction: Before a Prompt is sent to the Moonshot API, the Gateway must scan and replace sensitive PII (Personally Identifiable Information), hardcoded passwords, or out-of-scope IP addresses.

- Cost Control (Rate Limiting): Agents often fall into infinite loops. The Gateway layer must enforce a Token Bucket algorithm to limit token consumption per minute, preventing API billing explosions.

- Audit Trail: Every conversation with the AI must be recorded. This is not just for debugging, but for legal proof that “The AI did not go rogue.”

Python

`# services/gateway/app.py – Deep Dive @app.post(“/v1/chat/completions”) async def chat_completions(req: Request): payload = await req.json()

# 1. Audit: Log raw request metadata (excluding sensitive content)

log_audit_metadata(payload)

# 2. Sanitize: Execute Red Team specific redaction logic

# Example: Replace client real domain with placeholders if the model

# doesn't need the real domain for reasoning logic.

sanitized_payload = redact_sensitive_info(payload)

# 3. Forward: Attach Moonshot API Key (This Key NEVER leaves the Gateway container)

async with httpx.AsyncClient() as client:

response = await client.post(

f"{MOONSHOT_BASE_URL}/chat/completions",

headers={"Authorization": f"Bearer {MOONSHOT_API_KEY}"},

json=sanitized_payload

)

return response.json()`

Policy Engine: Code is Law

In Agentic Security, the Trust Boundary is paramount. The Model (Kimi) is untrusted because it may hallucinate; external input is untrusted. Only the Policy Engine is trusted.

Deep Meaning of Policy Configuration (policy.yaml):

YAML

`allowlist:

- “http://target-company.com” # Strictly limit target scope

- “http://192.168.1.0/24“

hard_blocks:

- “rm -rf” # Ban destructive commands

- “drop database” # Ban data wiping

- “exfiltrate” # Ban data exfiltration behaviors

human_in_the_loop: threshold: “high” # High-risk actions require manual approval actions: – “exploit_execution” – “mass_scan”`

This engine is not a simple Regex matcher; it serves as a semantic analyzer. When the Agent attempts to call run_pentest_task, the Policy Engine intercepts the call, parses parameters, and ensures the target is in the allowlist and the action type is not in hard_blocks before granting passage.

Orchestrator: Implementation of the OODA Loop

The Orchestrator does not execute scans; it is responsible for Thinking. It maintains the Agent’s State Machine.

The advantage of Kimi K2.5’s Long Context is fully realized here.

Traditional Agents need to constantly compress history, leading to “forgetting” previous test steps. K2.5 supports ultra-long Context, allowing the Orchestrator to feed the entire Execution Trace, Tool Outputs, and even Target Site HTML Source Code to the model, enabling decisions based on global information.

Orchestrator Pseudo-Code Logic:

- Observe: Retrieve current task status and output from the previous tool.

- Orient: Assemble status into a Prompt, send to Gateway (Kimi).

- Decide: Kimi returns

tool_calls(por ejemplocall_penligent_scan(target="api.target.com")). - Compruébalo: Orchestrator sends Tool Call to Policy Engine for verification.

- Act: Upon verification, dispatch task to Worker node for execution.

- Loop: Worker returns result, Orchestrator updates state, return to Step 1.

Real-World Integration: Calling Penligent.ai

This is the critical link where the “Brain” commands the “High-Precision Weaponry.” Penligent.ai is not just a tool; it is an AI-Enhanced Automated Offensive Platform.

Defining the Tool Schema

To let Kimi understand how to use Penligent, we must precisely define the OpenAI-format JSON Schema.

JSON

{ "type": "function", "function": { "name": "create_penligent_task", "description": "Invoke Penligent.ai's advanced scanning engine for a specific target. Returns a task ID.", "parameters": { "type": "object", "properties": { "target": { "type": "string", "description": "Domain or IP to scan" }, "scan_profile": { "type": "string", "enum": ["recon", "vuln_scan", "full_attack"], "description": "The aggression level of the Penligent scan." } }, "required": ["target", "scan_profile"] } } }

The Connector

In the Worker node, penligent_connector.py acts as the adapter. It is not just a simple API call; it handles State Polling y Result Standardization.

When Kimi decides to “Scan the target for vulnerabilities”:

- Connector initiates

POST /api/v1/tasksto Penligent API. - Connector suspends (or asynchronously polls), waiting for Penligent to complete complex fingerprinting and vulnerability verification flows.

- Penligent returns a structured vulnerability report (JSON).

- Connector extracts key info (e.g., “SQL Injection found, param id, Payload follows…”). It does not feed the entire 100-page report to the model but extracts Actionable Intelligence to return to the Orchestrator.

This is the “Profound” integration: The Agent isn’t blindly trying payloads; it is conducting an orchestra, directing a powerful professional engine, and formulating the next lateral movement or exploitation plan based on that engine’s feedback.

Advanced Optimization & Security Considerations

K2 Vendor Verifier: Ensuring Tool Call Stability

In production environments, a model “misbehaving” is the biggest Bug. Moonshot provides the K2 Vendor Verifier, a toolchain to verify if Model Tool Call outputs conform to Schema specifications.

In the CI/CD pipeline, we must integrate this tool to ensure that every Prompt adjustment or system upgrade does not break the Agent’s accuracy in calling tools. This is crucial for converting a “Demo” into a “Product.”

Localized Prompt Engineering

Kimi K2.5 performs exceptionally well in complex reasoning. In the System Prompt, we must emphasize:

- Role Definition: “You are not just a hacker; you are a ‘Responsible Security Auditor’.”

- Chain of Thought (CoT) Enforcement: Require the model to output a

<thought>etiqueta antes de issuing a Tool Call, explaining por qué it wants to execute this operation. This assists human auditors in the “Human-in-the-loop” phase to quickly decide whether to approve the action.

Why “Absolute Sovereignty”?

En esta arquitectura, all data flow is under your control:

- Prompt: Sanitized via Gateway.

- Ejecución: Inside Docker on local Mac Minis.

- Results: Stored on local disk or private MinIO.

- Model: Although utilizing an API, due to protocol-level isolation, the model itself cannot “steal” your vulnerability data because it only sees sanitized fragments and tool execution summaries.

Future Outlook: Moving Toward Route B (Fully Local)

While today we rely on Kimi K2.5’s API for peak reasoning capabilities, the potential of the Mac M4 Cluster goes far beyond this. With the maturation of frameworks like Ollama y MLX, and the performance improvements of distilled Open Source models (e.g., Llama 3, Mistral), the future architecture will evolve into:

- Small Model (Local): Running 7B/13B models on the M4 NPU to handle high-frequency, simple tactical decisions (e.g., judging if a port is open).

- Large Model (Cloud): Only upgrading via Gateway to call Kimi K2.5 when encountering complex logic or requiring deep reasoning.

This will form the ultimate Edge-Cloud Hybrid morphology, combining extreme response speed with deep intelligence.

Conclusión

By deploying this system based on the Mac Mini M4 Cluster and Penligent.ai, you gain more than just an automation tool. You gain a Red Team Special Forces Unit that is on standby 24/7, strictly disciplined, possesses top-tier cloud intelligence, yet maintains absolute private data sovereignty.

This is the Tao of Offense and Defense in the Agentic AI Era: Governing the Power of Intelligence with the Discipline of Architecture.

Referencias: