Security teams have learned the hard way that the products marketed as “remote support,” “privileged access,” or “remote access” rarely behave like ordinary line-of-business apps once they’re deployed. They sit at the intersection of authentication, session handling, device reachability, and operator trust. That intersection is exactly where an attacker wants to stand, because the blast radius is measured in administrator reach, not in a single compromised page or a single leaked table.

That’s the frame you should bring to CVE-2026-1731 BeyondTrust. BeyondTrust’s own advisory describes a critical pre-authentication remote code execution condition, triggered through specially crafted client requests, that can allow an unauthenticated attacker to execute operating system commands in the context of the site user, with the familiar but consequential downstream impacts: unauthorized access, data exfiltration, and service disruption. (BeyondTrust)

The reason this CVE keeps showing up in high-click headlines isn’t just the near-max severity score. It’s the operational narrative that engineers recognize instantly: “pre-auth,” “critical,” “patch now,” “PoC,” “in the wild.” Those words are the shorthand for a narrowing window where your environment is either provably not exposed, or it’s being treated as a target by scanners that don’t care who you are. The Hacker News’ reference coverage explicitly reports active exploitation being observed and describes a flow where attackers probe portal-related functionality and follow up with a WebSocket-style channel setup, based on third-party observations. (Noticias Hacker)

This article is written for people who need to walk into a post-incident review and defend their decisions with evidence. It’s also written for the folks who have to do the work: inventory, exposure validation, patching, containment, log hunting, and “prove it” artifacts that survive scrutiny.

What CVE-2026-1731 is, in plain terms

You can summarize the vulnerability in a single sentence, but the details matter because they dictate the response.

BeyondTrust identifies CVE-2026-1731 as a pre-authentication remote code execution vulnerability en BeyondTrust Remote Support (RS) y certain older versions of Privileged Remote Access (PRA). The root class is Inyección de comandos del sistema operativo (CWE-78), and the successful outcome is command execution in the context of the product’s site user. (BeyondTrust)

NVD mirrors that framing: an unauthenticated remote attacker may be able to execute OS commands via specially crafted requests, again emphasizing the site user context. (nvd.nist.gov)

Rapid7’s ETR write-up tracks the same core claims and is useful as a second source when you’re writing internal advisories or aligning remediation guidance for large fleets, because it restates the vendor position in “security operations language.” (Rápido7)

If you’ve been around enough incidents, you’ll notice how quickly a command injection in a gateway-class product stops being an “application bug” and becomes an “identity boundary bug.” Remote support tooling is the kind of surface where execution is rarely the final step; it’s the first step in converting trust into reach.

Why the “CVSS 9.9” headline is less important than the timeline

Severity scores are good at telling you “this is bad.” They are not good at telling you “what you should do next Monday morning.”

For CVE-2026-1731, the timeline is the part that should drive your triage decisions. BeyondTrust’s advisory includes concrete statements about patch availability and observed activity. It notes that patches were applied broadly for customers (and that environments subscribed to update services are handled differently), while also warning self-hosted operators who are not on automatic updates to apply patches manually and, if they’re on very old versions, to upgrade into supported release lines before patching. (BeyondTrust)

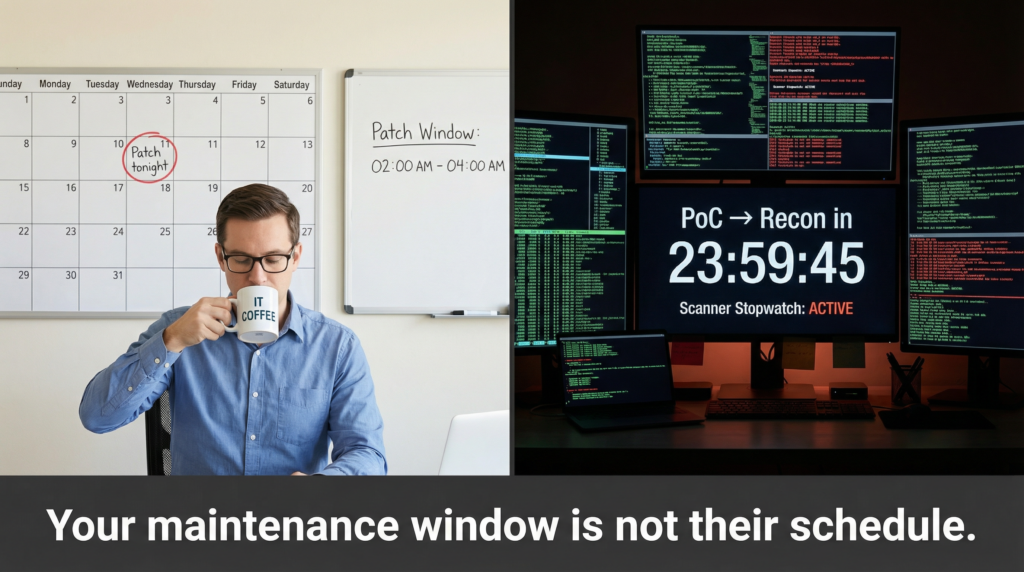

Separately, public reporting and threat intel commentary describe a familiar progression: PoC appears or technical detail becomes actionable, reconnaissance begins quickly, and exploitation attempts follow on the heels of scanning. GreyNoise explicitly describes reconnaissance beginning within roughly a day of PoC visibility, which is the kind of empirical “time-to-probe” number that changes how you justify emergency windows. (GreyNoise)

The Hacker News reference post then pushes the narrative further into the “in-the-wild” zone, pointing to exploitation being observed and describing a pattern where portal-related probing and WebSocket channel establishment are part of the activity trail being discussed by researchers. (Noticias Hacker)

Even when sources disagree on the extent of exploitation at a precise moment (which happens frequently early in an incident), you should treat the combination of “pre-auth RCE + internet-facing remote access surface + PoC/recon telemetry” as the trigger for a response posture that assumes the window is already closing.

The highest-click language around this CVE, and what it signals operationally

If you skim the major coverage and vendor-adjacent writeups, you’ll see the same click-driving phrases repeated: “critical,” “pre-auth,” “unauthenticated,” “ejecución remota de código,” “in-the-wild exploitation,” “PoC released,” “patch now,” “identity infrastructure."

Those phrases show up because they map to the questions security leaders ask under pressure, and because they compress complex reasoning into something headline-sized. When SecurityWeek writes that threat actors began targeting the vulnerability shortly after PoC release, it’s not giving you a new exploit detail; it’s telling you that the exploitation economics have shifted from “researchers only” to “anyone with a scanner.” (SecurityWeek)

When SC Media frames it as exposing identity infrastructure, it’s highlighting the real business risk: these products often sit on the path to privileged access and lateral movement. (SC Media)

When Cybersecurity Dive mentions early signs of exploitation and connects the story to broader identity-perimeter failures, it’s reinforcing the same conclusion: you triage this like a gateway incident, not a minor app patch. (Cybersecurity Dive)

Keep the language, but translate it into work. “Patch now” becomes “patch and prove.” “In the wild” becomes “assess compromise if you were exposed during the window.” “Pre-auth” becomes “exposure is the first risk; version is the second; evidence is the third.”

The first job is not “patching.” The first job is proving whether you are reachable.

There’s a trap in modern vulnerability response: teams rush to patch before they can even say which assets matter. That’s how you end up in a meeting where someone asks, “Are we exposed?” and the room goes quiet while Jira updates scroll by.

For gateway-class products, reachability is the primary risk amplifier. A fully unpatched instance behind strict network allowlisting behaves very differently from a fully patched instance that is accidentally exposed with weak edge controls and poor logging.

So the first job is to build an exposure picture that you can defend.

In practice, that means you take your asset inventory, your DNS surface, your TLS certificate patterns, your reverse proxy configurations, and your firewall/NAT rules, and you reconcile them until the set stops changing. If you have a neat CMDB, that’s a great starting point, but it’s rarely the truth. The truth is what the internet can hit.

A pragmatic technique that works in real organizations is to validate “from the outside,” not from your corporate network. Engineers often test reachability from a mobile hotspot or a cloud shell so they’re not fooled by internal routing. It’s not fancy; it’s reliable.

If you need a lightweight, non-exploitative check that can be run as part of an exposure sweep, you can use a script that does only what your browser would do: resolve a hostname, establish TLS, fetch a landing page, and record status codes plus headers. The point isn’t to fingerprint the product beyond necessity; the point is to create an evidence trail that says “this hostname is externally reachable” or “this hostname is not externally reachable.”

#!/usr/bin/env bash

# Safe reachability sweep: no exploit, no special payloads.

# Usage: ./reachability_sweep.sh hosts.txt

set -euo pipefail

INPUT="${1:-hosts.txt}"

OUT="reachability_$(date -u +%Y%m%dT%H%M%SZ).csv"

echo "host,ip,tls_sni,http_code,server,location" > "$OUT"

while IFS= read -r host; do

[[ -z "${host}" || "${host}" =~ ^# ]] && continue

ip="$(dig +short "$host" A | head -n 1 | tr -d '\\r')"

[[ -z "$ip" ]] && ip=""

# Use HTTPS by default; follow redirects; short timeout to avoid hanging.

# Capture a few headers for evidence.

resp="$(curl -skL --max-time 8 -D - -o /dev/null "<https://$>{host}/" || true)"

code="$(printf "%s" "$resp" | awk 'tolower($0) ~ /^http\\/|^http/ {c=$2} END{print c+0}')"

server="$(printf "%s" "$resp" | awk -F': ' 'tolower($1)=="server"{print $2}' | tail -n 1 | tr -d '\\r' | tr ',' ' ')"

loc="$(printf "%s" "$resp" | awk -F': ' 'tolower($1)=="location"{print $2}' | tail -n 1 | tr -d '\\r' | tr ',' ' ')"

echo "${host},${ip},${host},${code},${server:-},${loc:-}" >> "$OUT"

done < "$INPUT"

echo "Wrote: $OUT"

If your organization has a policy against running even a benign curl sweep, you can achieve the same outcome from your edge logs and DNS tooling, but you lose the tactile “external truth” that makes incident calls easier.

Patching is the second job, and “patching with proof” is the only version that counts

Once you know what is reachable, patching becomes tractable. But the mistake teams repeat is treating patching as a checkbox rather than a claim that requires proof.

BeyondTrust’s guidance is explicit about patch distribution and how self-hosted customers may need to manually apply fixes if they’re not subscribed to automatic updates. It also signals that older versions might need to be upgraded before patching is possible in a supported way. (BeyondTrust)

Rapid7’s ETR reinforces the same remediation posture: identify RS and PRA versions, prioritize internet-facing, apply vendor patches, and handle older versions by upgrading into supported branches. (Rápido7)

Here’s what “patch with proof” looks like in a way that survives audit and postmortem:

You can show the pre- and post-change build identifiers; you can show the patch package or vendor bulletin reference used; you can show that the asset’s exposure status changed, if it changed; you can show that logging was increased during the high-risk period.

That last part—temporarily increasing logging—is often overlooked. It’s one of the cheapest ways to add confidence if you later need to assert “no evidence of exploitation,” and it’s especially important when public coverage suggests exploitation attempts are happening quickly after PoC availability. (Noticias Hacker)

If you were internet-facing during the window, you should treat “compromise assessment” as a normal step, not an accusation

There’s a cultural problem in some orgs where “assess compromise” is interpreted as “we must be breached.” That’s not the right mental model.

A compromise assessment is simply the engineering response to uncertainty. When you have a pre-auth RCE in a boundary product, and multiple sources describe rapid reconnaissance and targeting shortly after PoC, your uncertainty is not small. (GreyNoise)

So you do the sane thing: you contain risk, you preserve evidence, you hunt for patterns, and you rotate credentials that could make a partial intrusion durable.

Containment, in a practical sense, is about shrinking the attacker’s options faster than they can iterate. Many teams start by removing direct internet reachability, then restricting access to a known allowlist (or to a VPN / zero trust proxy), then validating that access control actually took effect by testing from outside.

Evidence preservation is about making sure your remediation doesn’t destroy the data you need to answer questions later. In incidents involving remote support tooling, those questions are almost always about session creation, unusual admin actions, new accounts, token reuse, and unexpected outbound connections.

Credential rotation is about acknowledging that even if an attacker didn’t fully “own” the box, they may have achieved what they needed: a foothold, a token, or a way to re-enter.

Hunting strategy: look for primitives you can observe, not exploit strings you can’t trust

Early in an incident, detection content tends to oscillate between too vague and too brittle. The way out is to hunt for primitives that attackers can’t avoid if they’re using the reported flows.

The Hacker News reference post describes researcher observations that include probing for portal information and then establishing a WebSocket channel, which is the kind of sequence that leaves a footprint in reverse proxy logs, WAF telemetry, and application access logs—especially if you log response codes and upgrade headers. (Noticias Hacker)

GreyNoise’s reconnaissance reporting doesn’t tell you what payloads look like, but it does confirm the reality of broad scanning pressure, which means you should expect bursts of requests with high error rates, repeated attempts across multiple paths, and a subset that progresses into more “session-like” behavior. (GreyNoise)

That combination leads to a simple hunting posture: you pivot on suspicious request sequences, unusual header transitions, and protocol upgrades. You don’t need to know the exploit to recognize that a portal-probing sequence followed by a WebSocket upgrade is worth scrutiny in the context of this CVE story.

Here is a KQL query template that keeps its intent clear: it tries to find hosts where portal-like probing and WebSocket-ish upgrades cluster by source IP. You will need to adapt the field names to your log normalization.

let lookback = 7d;

CommonSecurityLog

| where TimeGenerated > ago(lookback)

| extend url = tostring(coalesce(RequestURL, RequestUri, Url))

| extend ua = tostring(coalesce(UserAgent, HttpUserAgent))

| extend hdr = tostring(coalesce(AdditionalFields, DeviceCustomString1, DeviceCustomString2))

| extend ws_hint = iif(hdr has_any ("websocket", "Upgrade", "Switching Protocols"), true, false)

| where url has_any ("get_portal_info", "portal", "ws", "websocket") or ws_hint

| summarize

hits=count(),

firstSeen=min(TimeGenerated),

lastSeen=max(TimeGenerated),

urls=make_set(url, 25),

codes=make_set(ResponseCode, 25),

uas=make_set(ua, 10)

by SourceIP, DestinationHostName

| where hits > 10

| order by hits desc

If your world is Splunk, the same logic can be expressed without pretending you know exact paths. The goal is to surface suspicious clusters, then let an analyst drill down.

(index=proxy OR index=nginx OR index=alb OR index=waf) earliest=-7d

| eval url=coalesce(uri_path, uri, request, url)

| eval ua=coalesce(user_agent, http_user_agent)

| eval up=coalesce(http_header_upgrade, upgrade, req_header_upgrade)

| where like(url, "%portal%") OR like(url, "%get_portal_info%") OR like(url, "%websocket%") OR up="websocket"

| stats count as hits min(_time) as first max(_time) as last values(url) as urls values(status) as codes values(ua) as uas by src_ip, dest_host

| where hits > 10

| sort - hits

These are not “exploit detection” queries. They are “incident triage” queries. The difference matters because exploit detection tends to fail the moment a minor variation appears, while triage queries remain useful as long as the attacker’s behavior has to pass through the same gate.

SIEM field mapping: make sure the data can actually support the hunt

One reason teams struggle in incidents like this is that logs exist, but they’re not aligned. Your reverse proxy calls a field remote_addr; your WAF calls it src_ip; your SIEM calls it SourceIP but only sometimes. It’s boring work, but it’s the work that turns “we think we’re fine” into “we can prove we checked.”

Here is a compact mapping table that’s designed to be useful across common edge logging stacks:

| Detection objective | Reverse proxy fields (examples) | WAF/edge fields (examples) | Suggested normalized field |

|---|---|---|---|

| Source IP | remote_addr, client_ip | src_ip | SourceIP |

| Destination host | host, server_name | dst_host, host | DestinationHostName |

| URL/path | uri, uri_path, request | url, ruta | RequestURL |

| HTTP method | request_method | method | HttpMethod |

| Response code | status | status_code | ResponseCode |

| Usuario-Agente | http_user_agent | user_agent | UserAgent |

| WebSocket upgrade signal | header capture / $http_upgrade | upgrade flag | IsWebSocket |

| Custom headers (if logged) | http_* captures | header KV | CustomHeaders |

If public reporting highlights that certain portal-info probing and channel establishment steps are part of observed exploitation behavior, having upgrade header visibility is an easy win. (Noticias Hacker)

The “related CVEs” angle: why CVE-2026-1731 should sit in a gateway-class playbook

A good incident response program doesn’t treat every CVE as a new story. It groups them by operational class.

CVE-2026-1731 belongs in the same mental bucket as the remote support and gateway vulnerabilities that taught the industry what “internet-facing management plane” really means. That bucket includes, for example, ScreenConnect’s authentication-bypass era incidents and the Citrix gateway wave where session materials became the story rather than the patch itself. Even when the mechanics differ, the response posture converges: prioritize exposure, patch with proof, assume high scanning pressure, and conduct compromise assessment for any system that remained reachable during the high-risk window.

When you standardize that response posture, you stop reinventing the wheel every time a boundary product ships a critical advisory.

If you run security programs at scale, you already know that the hardest part is not learning what the CVE is. The hardest part is making sure the same sequence of actions happens every time: exposure validation, remediation, proof capture, and reporting that is persuasive to both engineers and leadership.

This is where a workflow-oriented platform can actually help, if it’s used correctly. The practical value is in turning playbooks into repeatable work and ensuring the output includes evidence artifacts: which hosts were tested for external reachability; what version state was recorded; what change was applied; what logs were queried; what anomalies were found; what was concluded.

Penligent’s own CVE-2026-1731 write-up is already positioned in this “triage like an internet-exposed identity system” frame, and that’s the right framing if you’re using the product to help standardize verification and reporting across environments. (Penligente)

If you want an internal knowledge base that helps engineers prove they’re not exposed—rather than merely claiming “we updated”—the pattern across Penligent’s incident-style articles is consistent: verification, segmentation, hardening controls, and detection that hunts for primitives when the specific CVE changes. That pattern is exactly what makes gateway-class incidents survivable. (Penligente)

Referencias

BeyondTrust Security Advisory BT26-02 (vendor source of truth for CVE-2026-1731). (BeyondTrust)

NVD entry for CVE-2026-1731 (standard record used by many vulnerability management pipelines). (nvd.nist.gov)

Rapid7 ETR analysis for CVE-2026-1731 (ops-focused interpretation aligned to vendor guidance). (Rápido7)

The Hacker News reference article on observed exploitation activity (your provided reference). (Noticias Hacker)

GreyNoise reconnaissance reporting for CVE-2026-1731 (time-to-probe perspective). (GreyNoise)

SecurityWeek coverage on targeting shortly after PoC release (incident context). (SecurityWeek)

SC Media coverage framing risk to identity infrastructure (context). (SC Media)

Darktrace write-up discussing observed exploitation wave (telemetry perspective). (Darktrace)

Cybersecurity Dive coverage connecting early exploitation signs to broader identity/perimeter risk (context). (Cybersecurity Dive)

Penligent internal: CVE-2026-1731 BeyondTrust RS/PRA triage article (required internal link). (Penligente)

Penligent internal: CVE-2026-1868 GitLab AI Gateway RCE article (required internal link). (Penligente)

Penligent internal: CVE-2026-20841 Notepad link-to-code risk article (required internal link). (Penligente)