When the average executive reads about a cybersecurity assessment nyt often covers—usually involving a state-sponsored breach or a massive data leak—they imagine a binary outcome: secure or compromised. But for the hard-core security engineer, the reality is a nuanced, continuous battle of graph theory, race conditions, and deserialization flaws that no mainstream article can fully capture.

Alors que la New York Times focuses on the geopolitical fallout, we need to focus on the kill chain. This guide bridges the gap between public perception and technical execution, focusing on the shift from static scanning to agentic AI operations in 2026.

The Gap Between Mainstream Reporting and Engineering Reality

In a typical cybersecurity assessment nyt might describe, the focus is often on “phishing” or “weak passwords.” While valid, this ignores the systemic architectural rot that allows attackers to pivot from a low-privileged web shell to a domain controller.

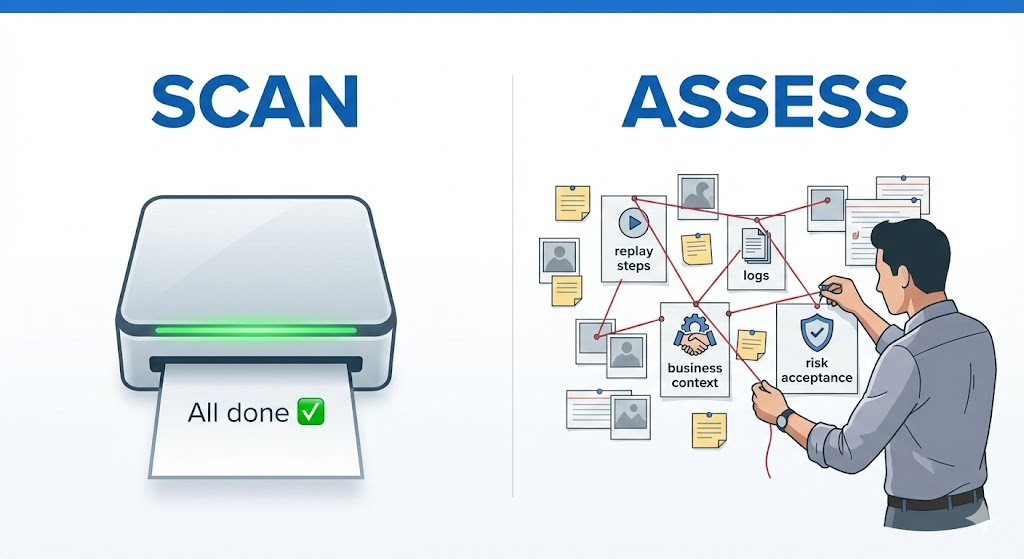

For the modern security engineer, an assessment is no longer just running Nessus or Qualys and handing over a PDF. It involves three distinct layers of depth that define the modern attack surface:

- Static Application Security Testing (SAST): Finding dead code and known signatures.

- Dynamic Application Security Testing (DAST): Fuzzing endpoints for runtime errors.

- Agentic Red Teaming: The new frontier where AI agents autonomously chain vulnerabilities to prove impact.

The Failure of Legacy Scanners in 2026

Traditional vulnerability scanners are deterministic. They send a payload, check the HTTP response code, and move on. They fail to understand logique.

| Fonctionnalité | Legacy Scanner (Nessus/OpenVAS) | Modern AI Agent (Penligent/Custom Scripts) |

|---|---|---|

| Sensibilisation au contexte | None. Treats every URL as isolated. | High. Understands that /login leads to /dashboard. |

| Exploitation | Theoretical (“This might be vulnerable”). | Proof-based (“Here is the /etc/passwd file”). |

| Logic Flaws | Misses IDOR and business logic errors. | Can infer logic gaps (e.g., buying items for $0). |

| Faux positifs | High (often >30%). | Near Zero (validates exploitability). |

Analyzing High-Impact CVEs: The Engineering Deep Dive

To understand what a real assessment looks like, we must look at the vulnerabilities that defined the 2025-2026 landscape. These are not simple buffer overflows; they are complex logic chains.

CVE-2025-24813: Apache Tomcat RCE via Race Condition

One of the most critical vulnerabilities ignored by mainstream media is the recent Tomcat RCE. Unlike standard RCEs, this relies on a race condition in the HTTP/2 stream handling.

A standard cybersecurity assessment nyt readers are familiar with would simply say “patch your servers.” An engineer needs to know comment to test for it safely.

Here is a conceptual Python snippet demonstrating how an asynchronous check might look for this timing attack (sanitized for safety):

Python

`import asyncio import h2.connection import h2.events

Conceptual Proof of Concept for Timing Analysis

NOT for malicious use. For educational assessment only.

async def check_race_condition(target_url): print(f”[*] Initiating HTTP/2 stream race check on {target_url}”)

# Simulate parallel stream reset frames

headers = [(':method', 'POST'), (':authority', target_url), (':scheme', 'https'), (':path', '/vulnerable-endpoint')]

async with aiohttp.ClientSession() as session:

# The vulnerability triggers when RST_STREAM is processed

# simultaneously with a DATA frame writing to the buffer.

tasks = []

for _ in range(50):

tasks.append(send_malformed_stream(session, headers))

responses = await asyncio.gather(*tasks)

for resp in responses:

if resp.status == 500 and "Memory Leak" in resp.text:

print("[!] Potential Race Condition Detected (Heap Corruption Signature)")

return True

return False

This script helps engineers validate if their patch actually mitigates the race condition

rather than just relying on version numbers.`

CVE-2025-55182: “React2Shell” (React Server Components)

Another vector that bypasses traditional WAFs is “React2Shell.” This vulnerability affects React Server Components (RSC) where improper serialization allows attackers to inject server-side code through client-side props.

The complexity here is that the payload is valid JSON. A regex-based WAF will miss it. A true assessment requires an engine that parses the React tree structure to identify tainted sinks—something manual testing struggles to scale.

The Rise of Agentic AI in Penetration Testing

This is where the industry is pivoting. The sheer volume of code commits makes manual review impossible. Engineers are turning to AI-driven platforms that act as “autonomous hackers.”

This is not about asking ChatGPT to write a phishing email. It is about Multi-Agent Systems (MAS).

In a cutting-edge assessment, you deploy a “Recon Agent” that maps the subdomains. It passes its findings to an “Exploit Agent,” which reads the documentation of the specific technology stack found (e.g., Django or Next.js) and crafts a bespoke payload.

Intégrer Penligent.ai into the Workflow

For teams overwhelmed by the disparity between “compliance scanning” and “actual security,” tools like Penligent.ai have bridged the gap. Unlike generic LLM wrappers, Penligent utilizes a specialized multi-agent architecture designed specifically for penetration testing.

If you are running a cybersecurity assessment nyt style—surface level and manual—you are missing the critical paths. Penligent allows engineers to automate the “boring” parts of the kill chain. Its “Safe Exploitation Mode” is particularly relevant for production environments; instead of dropping a destructive shell, it proves the RCE by echoing a harmless string or calculating a math operation, providing the evidence needed for remediation without the risk of downtime.

By integrating Penligent, security teams can move from “monthly scans” to “continuous, autonomous red teaming,” effectively keeping pace with the rapid weaponization of new CVEs like React2Shell.

Improving Your Assessment Reporting

The final deliverable of a technical assessment is not a scare-tactic headline. It is a remediable artifact.

When writing your reports:

- Map to MITRE ATT&CK: Don’t just list bugs. Show the tactic (e.g., T1190 Exploit Public-Facing Application).

- Provide Reproduction Scripts: As shown in the code block above, give the developers a way to see the bug.

- Prioritize by Context: A SQL injection in an internal, read-only analytics DB is less critical than an XSS in the admin panel.

Conclusion

The gap between a cybersecurity assessment nyt covers and the one you perform is defined by depth. While the world worries about “hackers,” your job is to worry about unpatched libraries, race conditions, and logic flaws.

By leveraging agentic AI tools and staying current with complex CVEs, you transform your role from a compliance checker to a true defense architect.