Why engineers keep landing on CVE-2025-4517

When someone searches “cve-2025-4517”, they’re usually trying to answer operational questions—not learn trivia:

- Am I vulnerable right now? Which Python versions, which APIs, which parameters. (CVE)

- Is this an actual boundary break or just a benign edge case? In particular: can extraction escape the destination directory and write elsewhere. (CVE)

- What does “filter=data” change and why does it matter in Python 3.14? Because defaults are shifting, and “safe by default” is a moving target. (Python Enhancement Proposals (PEPs))

- How do I prove I’ve fixed it? “We upgraded Python” is not proof; you need verifiable controls and evidence.

This article is written for security and platform engineers who need a defensible answer: where the bug is, when it bites, how to validate exposure safely, and how to harden so this class of issue can’t silently return.

What CVE-2025-4517 is precisely

CVE-2025-4517 is a vulnerability in CPython’s standard library module tarfile that can allow filesystem writes outside the intended extraction directory when extracting untrusted tar archives in a specific way. The CVE record is unusually explicit about the trigger condition:

- Vulnerable APIs:

TarFile.extractall()또는TarFile.extract() - Trigger: extracting an untrusted tar archive with

filter="data"또는filter="tar" - 영향을 받는 버전: Python 3.12+ (earlier versions are not in scope for this specific “filter” feature) (CVE)

Red Hat’s CVE entry states the impact in operational terms: arbitrary filesystem writes outside the extraction directory via extraction of untrusted archives. (Red Hat Customer Portal)

Why “filter=data” and “filter=tar” are central

Python introduced extraction filters via PEP 706 to make tar extraction safer and more controllable. The filter mechanism can reject dangerous members or adjust metadata during extraction. (Python Enhancement Proposals (PEPs))

But CVE-2025-4517 is a cautionary case: a safety mechanism is only as safe as its edge-case correctness.

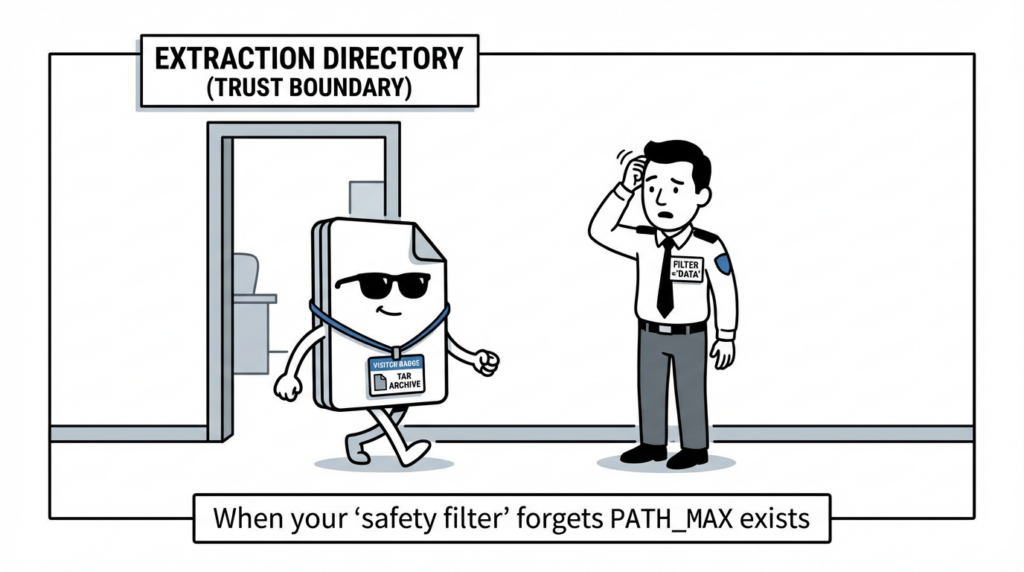

Root cause that matters to defenders

Google Security Research published a technical advisory describing the core bug pattern:

os.path.realpath()is used by the"tar"그리고"data"filters to validate paths- Under certain conditions involving PATH_MAX,

realpath()does not throw an error when the fully expanded path would exceed PATH_MAX - Later, during

extractall()/extract(), paths are used without being passed throughrealpath()again, creating a mismatch between check 그리고 use (GitHub)

In practical terms, this is a “validation gap” problem: what the filter believes is safe can diverge from what the filesystem resolves during extraction—which is exactly how you get “write outside destination” behavior even when you think you’re using a safer mode.

A number of public PoC repositories discuss this class of bypass in terms of PATH_MAX and symlink expansion behavior. Those are useful for defenders to understand the mechanics, but you do not need exploit code to fix the problem. (GitHub)

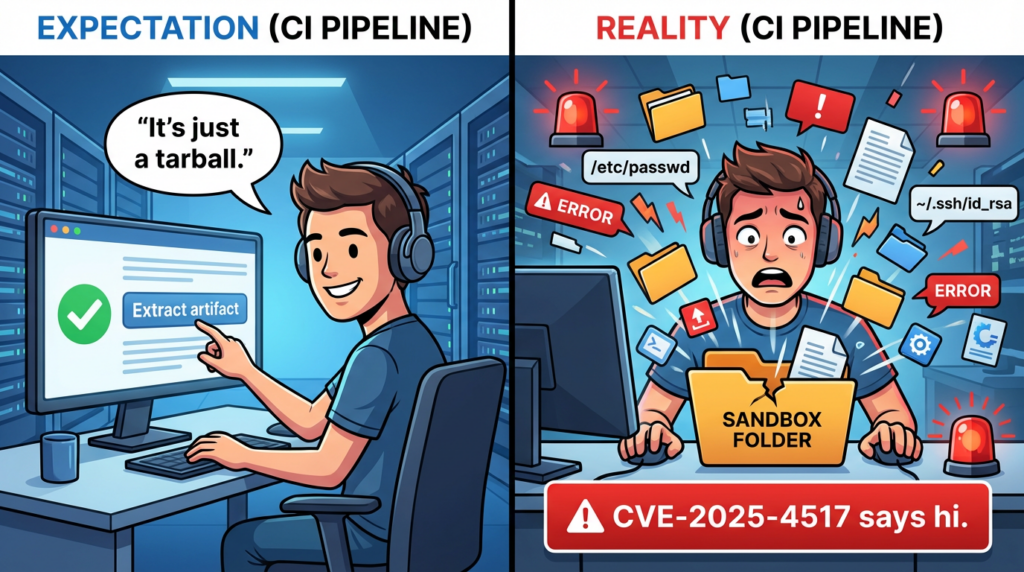

Why CVE-2025-4517 is worse in CI/CD and AI pipelines

This CVE is not “Python-only” in the way many teams assume. It’s better understood as a routine automation risk:

1) Tar extraction is a primitive in modern automation

Archives are everywhere:

- CI jobs unpack dependencies and build artifacts

- Release pipelines unpack vendor bundles

- Data engineering jobs unpack datasets

- ML pipelines unpack model tarballs and training snapshots

If extraction happens without a strict trust boundary, an “arbitrary write outside dest” primitive becomes immediately relevant.

2) “Untrusted” includes internal sources

Teams often treat “internal artifact store” as trusted by default, even when:

- any engineer can publish artifacts

- PR builds pull artifacts from forked contexts

- partner feeds land in shared buckets

- automated systems fetch “latest” artifacts without verification

For boundary-break vulnerabilities, “untrusted” means “outside the control plane you can audit end-to-end.”

3) The blast radius depends on mounts and permissions, not just code

Even in a container, what matters is:

- what host paths or persistent volumes are mounted

- whether the process has write access to sibling directories

- whether later pipeline stages execute from writable paths

An arbitrary write is often enough to poison:

- build scripts

- configuration files

- dependency caches

- runner workspaces

- “next step” inputs (which can lead to code execution later)

The Python tarfile filter landscape you need to understand

Python’s own documentation for tarfile makes two changes explicit:

- In Python 3.12, extraction uses a filter mechanism (and there is a security-motivated shift underway). (Python documentation)

- In Python 3.14, the default extraction filter becomes

데이터, whereas previously behavior was effectivelyfully_trusted. This is a breaking change in some ecosystems. (Python documentation)

PEP 706 describes the design rationale: extraction filters exist because tar archives can encode features that are surprising or dangerous, and a stricter default was planned after a deprecation period. (Python Enhancement Proposals (PEPs))

This matters for CVE-2025-4517 in two ways:

- If you explicitly pass

filter="data"또는filter="tar", you’re directly in the affected condition described by the CVE record. (CVE) - If you rely on default behavior, Python 3.14 moves the default to

데이터, which can change both compatibility and risk posture. (Python documentation)

So your remediation is not just “patch the CVE”—it’s also “avoid being surprised by default semantics changes.”

Am I vulnerable A quick decision tree

You can use this as an on-call triage checklist.

You are at high risk if all are true

- Python runtime is 3.12+ (CVE)

- You extract tar archives from untrusted or weakly-trusted sources

- Your code uses:

TarFile.extractall(..., filter="data")또는filter="tar"- OR

TarFile.extract(..., filter="data")또는filter="tar"(CVE)

- The extraction process can write to a filesystem where sensitive paths exist outside the intended dest

You may still be exposed even if you “upgraded Python”

Because enterprise reality includes:

- embedded Python runtimes in vendor images

- long-lived CI runner images not rebuilt frequently

- base images pinned in internal registries

- downstream tooling that performs extraction indirectly

Safe validation without weaponizing the issue

The goal here is defensive validation: confirm whether your systems have the vulnerable call pattern, and whether your controls prevent out-of-destination writes.

Step 1 Find risky call sites

Search for explicit filter usage first—because the CVE trigger is explicit.

rg -n "tarfile\\.open\\(" .

rg -n "\\.extractall\\(" .

rg -n "\\.extract\\(" .

rg -n "filter\\s*=\\s*[\\"'](data|tar)[\\"']" .

If you find wrappers or shared utility functions, treat them as your “choke points”: fixing those yields outsized risk reduction.

Step 2 Create a single blessed safe extraction helper

Even after patching, you want to centralize policy. The lesson of CVE-2025-4517 is not “filters are bad,” it’s “do not bet everything on a single safety layer.”

Below is a conservative safe extraction helper that blocks common escape primitives. It does not depend on exploitation details; it enforces invariants you can reason about.

import os

import tarfile

from pathlib import Path

class UnsafeTarMember(Exception):

pass

def _within(base: Path, target: Path) -> bool:

# Resolve best-effort. If target doesn't exist yet, absolute() still normalizes.

try:

base_r = base.resolve()

except FileNotFoundError:

base_r = base.absolute()

try:

target_r = target.resolve()

except FileNotFoundError:

target_r = target.absolute()

# commonpath is robust for directory containment checks

return os.path.commonpath([str(base_r)]) == os.path.commonpath([str(base_r), str(target_r)])

def safe_extract_tar(tar_path: str, dest_dir: str, *, allow_links: bool = False) -> None:

dest = Path(dest_dir)

dest.mkdir(parents=True, exist_ok=True)

with tarfile.open(tar_path, mode="r:*") as tf:

members = tf.getmembers()

for m in members:

name = m.name

# Reject absolute paths

if name.startswith("/") or name.startswith("\\\\"):

raise UnsafeTarMember(f"Absolute path blocked: {name}")

# Reject traversal segments

if ".." in Path(name).parts:

raise UnsafeTarMember(f"Traversal blocked: {name}")

# Block symlinks/hardlinks unless explicitly allowed

if (m.issym() or m.islnk()) and not allow_links:

raise UnsafeTarMember(f"Link blocked: {name}")

# Ensure destination containment

target = dest / name

if not _within(dest, target):

raise UnsafeTarMember(f"Escapes dest: {name}")

# Extract only after validation

tf.extractall(path=str(dest))

이것이 중요한 이유: Even if a future CVE hits tarfile filtering again, your extraction is still gated by containment rules and link policies that match your environment’s threat model.

Step 3 Produce evidence, not just “we think it’s fine”

Add structured logs at your extraction choke point:

- input source and hash

- dest path

- member count

- any blocked member and why

- runtime Python version

That gives you a defensible audit trail.

Patch and mitigation strategy that survives enterprise reality

The patch baseline

The CVE record defines affected versions and trigger condition. Start there. (CVE)

Then align with your platform’s vendor guidance. Red Hat’s CVE page is useful for enterprise tracking and patch status in distributions. (Red Hat Customer Portal)

But patching is not sufficient by itself

Because:

- extraction can happen in multiple runtimes you don’t inventory

- defaults shift in Python 3.14, which can cause compatibility breaks and new call paths (Python documentation)

- teams may “fix” by removing

filter="data"and unknowingly revert to more permissive behavior, depending on version and defaults

A robust strategy has three layers:

- Upgrade runtimes across images, runners, batch workers, and embedded Python

- Centralize safe extraction so new code cannot reintroduce risky patterns

- Monitor for out-of-destination writes during extraction workflows, especially in CI runners and pipeline workers

Related CVEs and why you should treat tar extraction as an evolving attack surface

CVE-2025-4517 is part of a broader arc: tar extraction hardening has been evolving (PEP 706), and there have been multiple CVEs around filter semantics and bypass conditions.

For example, NVD’s CVE-2025-4138 notes that in Python 3.14 the default filter changes to 데이터 and warns that relying on that default can affect exposure. (NVD)

The takeaway is not “never extract tar.” It’s:

- treat extraction as a privileged operation

- enforce invariants at your own boundary

- add regression tests around your extraction helper

- be deliberate about Python 3.14 default changes to avoid surprise breakage (Python documentation)

Practical detection and guardrails you can deploy this week

Repository guardrail

Fail builds when risky patterns are introduced:

- direct calls to

extractall()without going through your safe helper - explicit

filter="data"또는filter="tar"in unreviewed paths

Runtime guardrail

Alert when extraction processes write outside a whitelisted directory tree.

On Linux, consider auditing write events to sensitive locations during CI jobs, and correlate with extraction logs. This is not CVE-specific; it’s a control for the whole class of “boundary break via extraction.”

Proof-oriented operations checklist

Here’s a compact table you can copy into a ticket template.

| 제어 | What you implement | What you can prove |

|---|---|---|

| Inventory | Find every Python runtime that can extract archives | A list of images/runners/workers with versions and owners |

| Patch | Upgrade affected runtimes | SBOM/build logs + runtime version checks |

| Safe extraction | One blessed helper with containment + link policy | CI guardrail shows no direct extraction calls |

| Telemetry | Log extraction, block rules, and write events | Evidence that no out-of-dest writes occurred |

| Regression tests | Unit test crafted edge-case paths and links | A test suite that fails on boundary escapes |

Where Penligent fits when the goal is proof, not promises

If your pain is “we keep patching but can’t prove we’re safe,” the most valuable output is an evidence chain:

- identify every place your platform extracts tar

- validate behavior under controlled tests

- generate a report that ties runtime version + code path + controls + logs into one defensible narrative

If you want a Penligent-relevant deep dive specifically on defensive validation patterns for this CVE, these two internal references are directly on-topic and already written in a “don’t weaponize it” posture: (펜리전트)

참조

- CVE.org record: CVE-2025-4517 https://www.cve.org/CVERecord?id=CVE-2025-4517

- Google Security Research advisory: GHSA-hgqp-3mmf-7h8f https://github.com/google/security-research/security/advisories/GHSA-hgqp-3mmf-7h8f

- Python docs tarfile module https://docs.python.org/3/library/tarfile.html

- PEP 706 Filter for tarfile.extractall https://peps.python.org/pep-0706/

- CPython issue discussing default filter behavior in Python 3.14 https://github.com/python/cpython/issues/121999

- Red Hat CVE page https://access.redhat.com/security/cve/cve-2025-4517

- CVE-2025-4517 PoC Without Weaponizing It Proving the Tarfile Extraction Boundary Breaks in Real Automation https://www.penligent.ai/hackinglabs/cve-2025-4517-poc-without-weaponizing-it-proving-the-tarfile-extraction-boundary-breaks-in-real-automation/

- CVE-2025-4517 PoC Without Weaponizing It Defensive Validation Patch Lines and the Tarfile Trap Inside Automation https://www.penligent.ai/hackinglabs/cve-2025-4517-poc-without-weaponizing-it-defensive-validation-patch-lines-and-the-tarfile-trap-inside-automation/