CVE-2025-62164는 다음에서 심각도가 높은 취약점입니다. vLLM가장 널리 배포된 오픈 소스 LLM 추론 엔진 중 하나입니다. 이 문제는 완료 API 서버가 다음을 처리할 때 트리거됩니다. 사용자 제공 프롬프트 임베딩. 영향을 받는 버전(0.10.2부터 0.11.1까지(단, 0.11.1은 포함되지 않음))를 사용하여 텐서를 역직렬화합니다. torch.load() 를 사용할 수 없습니다. 만들어진 스파스 텐서를 통과하여 밀도화 중 범위를 벗어난 쓰기를 사용하여 워커를 안정적으로 충돌시키고 적절한 조건에서 원격 코드 실행으로 에스컬레이션할 수 있습니다. 이 프로젝트는 vLLM 0.11.1. (NVD)

이 CVE가 일반적인 AI 스택 버그와 다른 점은 두 가지입니다. 첫째, 이 버그는 데이터 플레인 결함관리자 UI나 잘못된 구성이 아니라 사용자가 완료를 위해 누른 것과 동일한 추론 엔드포인트를 통해 도달할 수 있는 익스플로잇 경로입니다. 둘째, 이 익스플로잇은 다음과 같은 정확한 교차점에 위치합니다. 안전하지 않은 역직렬화 및 업스트림 동작 드리프트이 조합은 LLM 인프라가 성숙해짐에 따라 계속 나타나고 있습니다.

스택에서 vLLM의 위치 - 그리고 이러한 배치가 위험을 증폭시키는 이유

vLLM은 사실상 처리량에 최적화된 추론 계층입니다. 팀은 이를 공용 SaaS API, 엔터프라이즈 게이트웨이 뒤 또는 멀티테넌트 에이전트 시스템의 서빙 백엔드로 배포합니다. 이러한 모든 레이아웃에서 vLLM은 인터넷에 가깝고 GPU 리소스에 가깝습니다. 이는 성능 엔지니어링처럼 들리지만 다음과 같은 의미도 있습니다. 낮은 권한의 API 호출자가 권한이 있는 코드 경로에 도달할 수 있습니다.. (wiz.io)

따라서 폭발 반경은 미묘하지 않습니다. 충돌 가능한 단일 엔드포인트는 GPU 고갈, 대기열 누적, 오토스케일러 이탈, 노이즈 이웃 인시던트를 생성할 수 있습니다. 익스플로잇이 RCE로 안정화되면 추론 함대는 공급망 침입을 위한 합법적인 발판이 됩니다.

한 문단으로 요약한 취약점

취약한 vLLM 버전의 Completions 엔드포인트는 클라이언트가 다음을 전달할 수 있도록 허용합니다. 프롬프트 임베딩 를 사용하여 텐서를 재구성합니다. vLLM은 원시 텍스트 대신 torch.load() 충분한 무결성, 유형 또는 구조적 검사 없이. 이후 PyTorch 2.8.0은 기본적으로 스파스 텐서 무결성 검사를 비활성화합니다.악의적인 스파스 텐서는 내부 경계 보호를 우회하여 범위를 벗어난 메모리 쓰기 to_dense() 는. 즉각적이고 반복 가능한 결과는 원격 DoS (워커 크래시). 유리한 메모리 레이아웃과 제어를 사용하면 동일한 프리미티브가 다음과 같이 그럴듯하게 바뀔 수 있습니다. 호스트의 RCE. (NVD)

근본 원인 해부: '편리한 임베딩 패스스루'가 메모리 손상이 된 방법

공용 엔드포인트의 역직렬화 싱크

torch.load() 는 설계부터 강력합니다. 신뢰할 수 있는 소스(체크포인트, 내부 파이프라인)에서 텐서 및 객체 그래프를 복원하기 위한 것입니다. vLLM의 경우, API 호출자가 채울 수 있는 필드에 사용됩니다. 이는 신뢰 경계를 '내부 모델 아티팩트'에서 '신뢰할 수 없는 인터넷 입력'으로 이동시키는데, 이는 역사적으로 안전하지 않은 역직렬화 문제가 발생했던 곳입니다. (NVD)

이 문제는 기존의 피클-RCE 체인이 아닌 메모리 손상으로 나타나지만, 근본적인 실수는 동일합니다: 복잡한 이진 구조를 마치 다른 요청 매개변수처럼 취급합니다..

PyTorch 2.8.0의 행동 변화가 촉발점이 되었습니다.

이제 기본적으로 스파스 텐서 무결성 검사가 꺼져 있습니다. vLLM 권고와 NVD는 모두 PyTorch 변경 사항에 대한 에스컬레이션을 고정합니다. 이전에는 코드 경로가 고밀도화에 도달하기 전에 잘못된 스파스 텐서가 거부될 가능성이 높았습니다. 검사를 비활성화하면 vLLM의 사전 검증 부족을 일관된 방식으로 악용할 수 있게 되었습니다. (NVD)

이는 AI 인프라 보안에 유용한 멘탈 모델입니다: 업스트림 기본값은 "안전하지 않지만 휴면 상태"를 "안전하지 않고 무기화 가능한 상태"로 조용히 바꿀 수 있습니다.

영향 현실 점검: DoS는 보장, RCE는 한계

모든 공개 글은 다음 사항에 동의합니다. 신뢰할 수 있는 원격 DoS. 한 번의 잘못된 요청으로 작업자가 사망할 수 있으며, 반복되는 요청으로 인해 차량이 불안정해질 수 있습니다. (제로패스)

RCE는 다음과 같이 설명됩니다. 잠재력 를 공격할 수 있습니다. 메모리 손상은 경로를 제공하지만, 무기화는 할당자 동작, 강화 플래그, 컨테이너 경계, 공격자가 손상된 영역을 얼마나 제어할 수 있는지에 따라 달라집니다. 다음과 같은 것들이 있습니다. CISA KEV 목록 없음 2025년 11월 25일 현재 널리 확인된 익스플로잇 체인은 없지만, 데이터 플레인 메모리 손상을 "DoS 전용"으로 취급하는 것은 실수일 수 있습니다. (wiz.io)

영향을 받는 버전 및 수정 상태

| 항목 | 세부 정보 |

|---|---|

| 구성 요소 | vLLM 완성 API(프롬프트 임베딩 처리) |

| 영향을 받는 버전 | 0.10.2 ≤ vLLM < 0.11.1 |

| 패치된 버전 | 0.11.1 |

| 트리거 | 제작 프롬프트 임베딩(스파스 텐서) |

| 영향 | 안정적인 DoS; 잠재적 RCE |

| CVSS | 8.8 높음(AV:N/AC:L/PR:L/UI:N/S:U/C:H/I:H/A:H) |

(NVD)

누가 먼저 패닉에 빠질까요? 중요한 위협 모델

실용적인 우선순위 지정 렌즈를 원한다면 임베딩이 시스템에 들어갈 수 있는 위치를 생각해 보세요.

공용 vLLM 엔드포인트는 명백한 고위험 사례입니다. 호출자에게 API 키가 필요하더라도 기본 액세스 권한이 있는 일반 사용자만으로도 작업자에게 충돌을 일으킬 수 있습니다. (wiz.io)

다음은 멀티테넌트 '서비스형 LLM' 플랫폼이 등장할 것입니다. 위험은 임베딩이 유입될 수 있다는 것입니다. 간접적으로 - 툴체인, 플러그인, 에이전트 프레임워크 또는 최적화를 통해 임베딩을 통과하는 업스트림 서비스를 통해. 텍스트가 아닌 페이로드를 허용하는 곳이 많아질수록 신뢰 경계는 더욱 복잡해집니다.

마지막으로 커뮤니티 데모 및 교육 배포를 무시해서는 안 됩니다. 커뮤니티 데모는 인증되지 않고, 모니터링이 제대로 이루어지지 않으며, 소유자가 그 존재를 잊은 지 오래 후에 노출되는 경우가 많습니다.

노출을 안전하게 확인하는 방법(위험한 프로빙 없이)

가장 빠른 분류는 버전 기반입니다.

python -c "import vllm; print(vllm.__version__)"

0.10.2 <= 버전 < 0.11.1의 경우 #가 영향을 받습니다.

(NVD)

운영적으로는 다음과 같은 패턴을 찾습니다. 작업자 세그폴트 또는 갑작스러운 재시작 비정상적으로 크거나 구조적으로 이상한 완료 요청에 묶여 있습니다. 실제로는 크래시 스파이크가 먼저 나타나고 정교한 익스플로잇은 나중에 발생합니다. (제로패스)

무해한 카나리아 검사(표준 완료, 임베딩 통과 없음)는 패치와 관련된 안정성의 기초를 다지는 데 유용합니다:

가져오기 요청, JSON, 시간

HOST = "https:///v1/completions"

헤더 = {"권한 부여": "무기명 "}

payload = {

"모델": "귀하의 모델 이름",

"프롬프트": "상태 확인",

"max_tokens": 4

}

for i in range(5):

r = requests.post(HOST, headers=headers, data=json.dumps(payload), timeout=10, verify=False)

print(i, r.status_code, r.text[:160])

time.sleep(1)

빠른 패치, 그다음 데이터 플레인 강화

진정한 해결 방법은 다음과 같이 업그레이드하는 것입니다. vLLM 0.11.1 이상. 다른 모든 것은 임시방편입니다. (NVD)

그 후에는 '이진 추론 입력'을 고위험 싱크로 처리하세요. 제품에 임베딩 통과가 진정으로 필요한 경우 엄격한 스키마 유효성 검사를 통해 게이트화하세요. 예상 텐서 dtype, 모양, 최대 크기를 적용하고 명시적으로 지원하지 않는 한 스파스 형식을 금지하세요. 덤 허용 목록조차도 이 CVE가 의존하는 특정 클래스의 잘못된 구조를 차단합니다. (wiz.io)

인프라 측면에서는 폭발 반경을 잠그세요. vLLM 워커는 최소 권한, 가능한 경우 읽기 전용 파일 시스템, 민감한 호스트 마운트, 컨테이너 seccomp/AppArmor 프로파일을 사용하지 않고 실행해야 합니다. 누군가 메모리 손상을 코드 실행으로 연결시키는 경우, 시크릿이나 측면 경로에 도달할 수 없는 상자에 갇혀 있어야 합니다.

CVE-2025-62164가 AI 보안 분야에 중요한 이유

이 사건은 AI 보안이 기존의 웹 앱 플레이북에서 어떻게 멀어지고 있는지를 잘 보여주는 사례입니다.

새로운 영역은 다음과 같습니다. 모델 서비스 데이터 평면텐서, 임베딩, 멀티모달 블롭, 직렬화된 아티팩트는 빠르고 편리하기 때문에 API를 통해 이동합니다. 또한 구조적으로 풍부하고 취약하기 때문에 편집증 없이 역직렬화할 경우 손상 버그에 적합합니다.

또한 LLM 스택의 위험 표면은 다음과 같다는 점을 상기시켜 줍니다. 구성vLLM이 스파스 텐서 불안정성을 '발명'한 것이 아니라 PyTorch 기본값이 변경되었고, 다운스트림 검증 계층이 누락되어 그 변경 사항이 CVE로 전환된 것입니다. 이제 추론 엔지니어링에도 커널 팀에서 당연하게 여기는 것과 동일한 수준의 종속성 조사가 필요합니다.

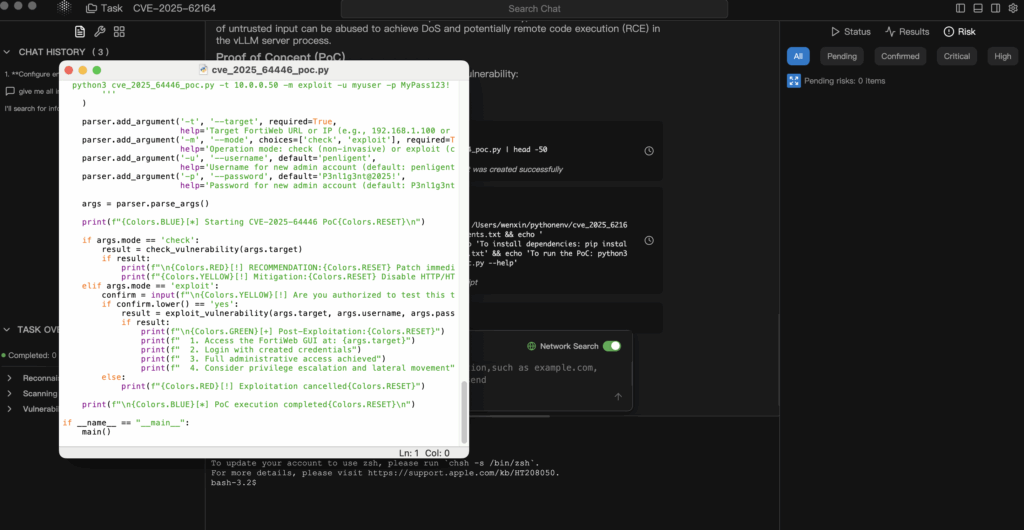

PoC가 지저분하거나 늦어질 때 제어된 검증

AI 인프라 CVE는 종종 안정적인 공개 PoC 이전에 발생하거나 프로덕션 서비스 클러스터를 가리키기에는 너무 위험한 PoC와 함께 도착합니다. 방어 가능한 접근 방식은 권위 있는 정보 → 가설 → 실험실 전용 검증 → 감사 가능한 증거라는 보다 안전한 루프를 산업화하는 것입니다.

펜리젠트 스타일의 에이전트 워크플로에서는 에이전트가 vLLM 어드바이저 및 NVD 레코드를 수집하고 정확한 노출 조건(버전, 임베딩 경로, PyTorch 가정)을 도출하고 최소 위험 검증 계획 격리된 복제본에서만 실행할 수 있습니다. 이를 통해 프로덕션 GPU로 도박을 하지 않고도 버전 지문, 크래시 서명, 패치 전/후 델타 등 실제 증거를 확보할 수 있습니다. (NVD)

마찬가지로 중요한 것은 증거 우선 보고를 통해 운영팀 경영진에게 긴급성을 더 쉽게 설명할 수 있다는 점입니다. "블로그에서 그렇게 말했기 때문에 패치를 적용했다"는 식의 설명은 인시던트 검토에서 살아남지 못합니다. "취약한 임베딩 경로를 통해 실험실 복제본이 크래시될 수 있기 때문에 패치했으며, 0.11.1로 업그레이드한 후의 타임라인과 차이점은 다음과 같습니다." does.CVE-2025-62164 PoC: 임베딩을 공격 표면으로 전환하는 vLLM의 데이터 플레인 버그가 완료되었습니다.