OpenClaw Log Poisoning Vulnerability: When “Just a Log Line” Becomes Model Input

The OpenClaw “log poisoning” vulnerability is a good example of a modern agent-security failure mode: data that was historically “low risk” (diagnostic logs) becomes security-relevant the moment it’s reused as LLM context. In this case, attacker-controlled strings could be written into OpenClaw logs and later appear inside an AI-assisted debugging workflow, increasing the risk of indirect prompt injection—not by breaking the host, but by influencing what the agent believes and suggests. (GitHub)

This is not headline-grabbing remote code execution. In fact, the advisory and the researcher writeups emphasize that the impact depends heavily on how you run OpenClaw—especially whether you feed logs back into the model during troubleshooting. (GitHub)

What exactly was vulnerable?

According to the GitHub advisory, OpenClaw versions prior to 2026.2.13 logged certain WebSocket request headers—explicitly including Origem e Agente do usuário-without neutralization/sanitization and without length limits along a path where the WebSocket connection “closes before connect.” (GitHub)

Eye Security’s technical writeup adds practical color: they confirmed the header values were stored verbatim in logs and that they could inject nearly ~15 KB of content via these headers in their testing. (Eye Security)

So the vulnerability is not “logs exist.” The vulnerability is: untrusted, attacker-controlled text lands in a privileged diagnostic artifact that the agent may later treat as reasoning input.

Affected versions and the fix

The vendor advisory in GitHub Advisory Database is explicit:

- Affected:

< 2026.2.13(also stated as<= 2026.2.12) - Patched:

2026.2.13 - Fix behavior: sanitize and truncate header values written to gateway logs (including removing control/format characters and applying length limits). (GitHub)

If you run OpenClaw in production, the first and most important action is to upgrade to 2026.2.13 or later. (GitHub)

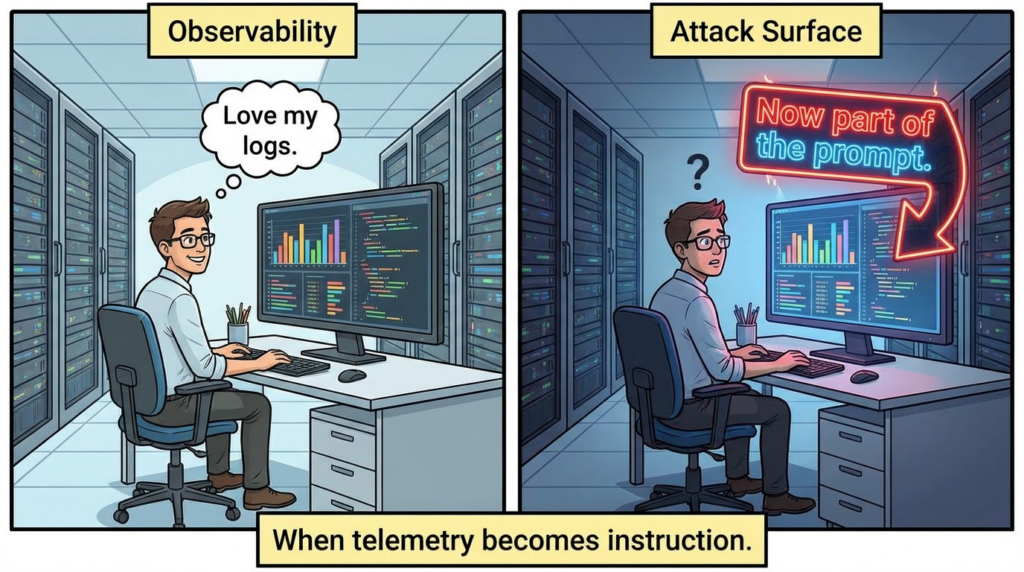

Why “log poisoning” is an AI-agent problem

In classic software security, logging untrusted data is often discussed under log injection / log forging / CWE-117 style integrity concerns. It’s serious, but usually scoped to forensics, alerting accuracy, and downstream parsing.

Agentic systems change the stakes because many of them implement something like:

- error happens →

- “AI-assisted debugging” starts →

- agent fetches recent logs →

- logs become part of the prompt context →

- model produces recommendations or takes actions.

The GitHub advisory explicitly frames the risk as indirect prompt injection (log poisoning) in environments where logs are later interpreted by an LLM as part of debugging or automation workflows. (GitHub)

Eye Security articulates the same boundary shift: logs and system output stop being “just artifacts” and become “reasoning context.” (Eye Security)

That is the core lesson: if logs are ever fed back into a model, then logs are an untrusted input channel—even if they weren’t designed that way.

How exploitation would work in the real world

Eye Security describes a realistic chain as follows:

- An OpenClaw instance is reachable from the internet (they note this is commonly on TCP port

18789). - An attacker sends a crafted WebSocket request with structured content embedded in headers.

- The content gets written into OpenClaw logs.

- Later, a user asks OpenClaw to debug an issue, and the agent reads those logs (via

openclaw logsor from/tmp/openclaw/*.log). - The injected text enters the model context and may influence reasoning. (Eye Research)

Importantly, the researchers report that this did not behave like traditional RCE. They were not able to directly execute arbitrary commands; in their sandbox, OpenClaw’s guardrails detected the injection attempt and refused to comply. (Eye Security)

So the credible, defensible framing is: this bug created a context injection surface that could manipulate how the agent interprets events and what it recommends. If your deployment grants the agent high privilege (email access, cloud keys, shell), “mere reasoning influence” can still become operationally dangerous in downstream workflows. (Eye Research)

Severity: why CVSS looks “low,” but security teams still care

GitHub’s advisory rates severity as Baixa (CVSS 3.1 base score shown as 3.1/10) and highlights that exploitation requires conditions like gateway reachability and downstream log consumption by an LLM. (GitHub)

This is a good example of the gap between:

- traditional vulnerability scoring (focused on confidentiality/integrity/availability impacts that are direct and technical), and

- agent risk (where the impact is probabilistic, workflow-dependent, and mediated by human trust + automation).

If your org uses AI agents for troubleshooting and allows them to suggest or perform actions based on logs, you care even when CVSS is low—because the vulnerability is upstream of human decision-making and automation pathways.

Who is actually at risk?

Your risk is meaningfully higher if most of these are true:

Your gateway is reachable by untrusted clients

The advisory explicitly notes risk when an unauthenticated client can reach the gateway and send crafted headers. (GitHub)

Your operations feed logs into an LLM

The advisory is very direct: if you don’t feed logs into an LLM or automation, the impact is limited. The risk spikes when “AI-assisted debugging” turns logs into model input. (GitHub)

Your agent has high-privilege integrations

Eye Security highlights that tools like OpenClaw commonly hold access to email accounts, API keys, cloud environments, and local shell access; a reasoning manipulation event can matter more when the agent can take consequential actions. (Eye Research)

If your deployment is local-only, not exposed externally, and logs never enter model context, you should still patch—but your immediate risk is substantially lower based on the stated threat model.

Remediation: what to do right now

1) Patch: upgrade to 2026.2.13+

This is the vendor’s primary fix and includes header sanitization and truncation. (GitHub)

2) Treat logs as untrusted input in AI workflows

Even after patching, build muscle memory around a simple policy:

If the model can see it, an attacker can try to influence it.

The GitHub advisory explicitly recommends treating logs as untrusted input when using AI-assisted debugging, and avoiding auto-execution of instructions derived from logs. (GitHub)

3) Reduce gateway exposure

The advisory also recommends restricting gateway network exposure and applying reverse-proxy limits on header size where applicable. (GitHub)

In practice, the “best” hardening is boring and effective: keep the gateway off the public internet, force authenticated access, and enforce strict input size limits at the edge.

Hardening guidance for AI-agent deployments

Patching fixes this specific injection point, but if you’re deploying agents, you want systemic controls that assume similar issues will recur.

Separate “human-readable logs” from “model-readable context”

A common failure mode is sending raw logs into the prompt because it’s convenient. Instead, consider a pipeline where:

- raw logs stay raw and are never exposed directly to the model, and

- a sanitizer/normalizer produces a model-safe “telemetry summary” that is stripped of attacker-controlled fields, capped in size, and annotated as untrusted.

Even if OpenClaw sanitizes Origem / Agente do usuário now, your environment likely has other attacker-controlled strings: request paths, referers, usernames, message bodies, headers from upstream proxies, and so on.

Require explicit user confirmation for sensitive actions

If an agent can do things like rotate keys, modify cloud policies, download binaries, or run shell commands, treat every action as needing a high-friction confirmation gate—especially when the “reason” for the action traces back to logs or external text.

Add “prompt injection suspicion” signals to monitoring

Eye Security shows a screenshot of prompt injection detection and notes guardrails can identify suspicious injected content. If your agent stack emits such alerts, treat them as security-relevant telemetry: they’re effectively “attempted compromise” indicators. (Eye Security)

Detection and monitoring: what defenders can look for

This vulnerability’s footprint is conceptually simple: unusually structured or long header values in a WebSocket context, particularly on paths associated with early connection termination.

Even if you don’t have a perfect signature, you can monitor for indicators consistent with the described behavior:

- spikes in “closed before connect” WebSocket events; (GitHub)

- anomalously long

Origem/Agente do usuáriovalues (especially near size limits described by researchers); (Eye Security) - repeated prompt-injection alerts during debugging sessions (if your agent/guardrail stack emits them). (Eye Security)

Keep expectations realistic: this isn’t an exploit that drops a payload. It’s an influence attempt. So “detection” often looks like pattern anomalies + operational correlation (agent suddenly suggesting odd steps after viewing logs, repeated refusals, or strange troubleshooting narratives).

PERGUNTAS FREQUENTES

Is this a remote code execution vulnerability?

No. Both the GitHub advisory and Eye Security describe it as indirect prompt injection risk and report they were not able to directly execute arbitrary commands in their testing; guardrails detected and refused the injected content in their sandbox scenario. (GitHub)

What makes it “critical” in some headlines?

“Critical” here is more editorial than technical. Traditional scoring is low because the direct CIA impact is limited and the attack depends on workflow. (GitHub)

Security teams still care because in agentic environments, reasoning influence can be operationally significant when the agent has real privileges and humans trust its output.

What’s the single best mitigation?

Atualize para openclaw 2026.2.13+ and ensure you don’t feed raw logs directly into LLM prompts without a sanitization layer and strict action gating. (GitHub)

Conclusão final

This OpenClaw issue is a compact case study in why agent security needs a different mental model. The bug is “only” unsanitized WebSocket headers written into logs. But once logs become LLM context, that’s effectively an unauthenticated prompt channel riding inside your observability pipeline. (GitHub)

Patch it, harden your gateway, and—most importantly—design your agent workflows so that untrusted text never becomes “trusted reasoning context” without strong filtering, provenance, and human-in-the-loop control.