Güvenlik alanında yeterince uzun süre kalırsanız, sonunda kötü bir alışkanlık geliştirirsiniz: "özellikleri" görmeyi bırakır ve potansiyel saldırı yollarını görmeye başlarsınız. "ChatGPT, sırları açığa çıkarmak için bir SSRF açığından yararlanan Özel GPT'ler kullanılarak hacklendi" hakkındaki son hikaye bir ders kitabı örneğidir. Özel GPT'ler için kullanışlı bir Eylem özelliği gibi görünen şeyin doğrudan OpenAI'nin bulut ortamına açılan bir tünel olduğu ortaya çıktı. Egzotik bir komut istemi büyüsü ya da yanlış giden bir "yapay zeka sihri" yok; sadece çok eski bir hata, sunucu tarafı istek sahteciliği, çok yeni bir platformda yeniden keşfedildi.

Bu olay işte tam da bu yüzden önemlidir. Bu olay, yapay zekanın kötü yola düşmesiyle ilgili bir başka abartılı başlık değildir. LLM'leri gerçek altyapıya yerleştirdiğinizde, geleneksel uygulama ve bulut güvenliği kurallarının intikamla geri döndüğünü hatırlatıyor. Modeller yeni olabilir; ancak altta yatan hatalar yeni değildir.

İstismarın yeniden yapılandırılması: "Eylem Ekle "den bulut belirtecine

Ne olduğunu anlamak için Özel GPT'lerin nasıl çalıştığına bakmanız gerekir. OpenAI'nin arayüzü, GPT'nizin bir OpenAPI şemasına dayalı olarak çağırabileceği harici HTTP API'leri olan Eylemleri tanımlamanıza olanak tanır. Kullanıcılar için bu, GPT'nize "süper güçler" vermek gibidir: veri alabilir, iş akışlarını tetikleyebilir, dahili sistemlere bağlanabilir. Kaputun altında, OpenAI'nin altyapısı içinde çalışan, tanımladığınız URL'leri mutlu bir şekilde çağıracak ve yanıtları modele geri besleyecek bir arka uç HTTP istemcisi olduğu anlamına gelir.

Open Security'den bir araştırmacı tam olarak bunu fark etti. Özel GPT'lerle oynarken, tanıdık bir model gördüler: kullanıcı tarafından kontrol edilen URL'ler, canlı istekler gönderen bir "Test" düğmesi ve açıkça bir bulut ortamında çalışan bir sunucu. Bulutta pen-test yapan herkes şu içgüdüyü tanıyacaktır: bu sunucuyu sizin adınıza dahili adresleri araması için kandırıp kandıramayacağınızı kontrol edin. SSRF'nin özü budur.

Bulut ortamlarında, en değerli dahili hedef neredeyse her zaman meta veri hizmetidir. Azure'da, diğer bulutlarda olduğu gibi, link-local adresinde bulunur 169.254.169.254. Bir VM veya konteynerin içinden bu uç nokta, örnekle ilgili ayrıntıları ortaya çıkarabilir ve daha da önemlisi, iş yüklerinin bulut yönetimi API'lerini çağırmasına olanak tanıyan kısa ömürlü belirteçler verebilir. Bulutun dışından ise buna ulaşamazsınız. SSRF'nin bu kadar önemli olmasının nedeni tam da budur: sunucunun bakış açısını ele geçirir ve onu harici bir saldırgan olarak sizin konuşamayacağınız şeylerle konuşmaya zorlarsınız.

Araştırmacının karşılaştığı ilk engel, Özel GPT Eylemlerinin yalnızca HTTPS URL'lerine izin vermesi, oysa meta veri hizmetinin yalnızca HTTP olmasıydı. İlk bakışta bu kısıtlama bir savunma gibi görünüyor, ancak pratikte sadece bir bulmaca parçası daha. Geçici çözüm basitti: araştırmacının kontrolü altında harici bir HTTPS alan adı kaydettirmek, bu alan adının HTTP URL'sine işaret eden bir 302 yönlendirmesi ile yanıt vermesini sağlamak. http://169.254.169.254 meta veri URL'sini seçin ve Eylemler arka ucunun yönlendirmeyi takip edip etmediğine bakın. Öyle de oldu. Birdenbire, Özel GPT yapılandırmasındaki masum görünen bir HTTPS çağrısı, dahili bulut meta veri uç noktasına yapılan bir HTTP çağrısıyla sonuçlandı.

Ancak Azure'un meta veri hizmeti tamamen naif değildir. Sıradan kötüye kullanımları önlemek için özel bir başlık talep eder, Metadata: trueher istekte. Başlık eksikse, hizmet gerçek verileri ifşa etmeyi reddeder. Bu noktada sistem yine güvende gibi görünebilir, çünkü Eylemleri tanımlamak için kullanılan OpenAPI şema arayüzü keyfi başlık yapılandırmasını açığa çıkarmaz. Ancak büyük sistemler nadiren yalnızca bir yapılandırma yüzeyine sahiptir. Bu durumda, Eylemler özelliği, harici bir hizmeti bağlarken tanımlayabileceğiniz "API anahtarları" ve diğer kimlik doğrulama başlıkları fikrini de destekler. Bu başlıklar daha sonra giden isteklere otomatik olarak eklenir.

Bu, zinciri tamamlamak için yeterliydi. Başlık adı tam anlamıyla şu olan sahte bir "API anahtarı" tanımlayarak Metadata ve değeri gerçekaraştırmacı, arka ucu Azure IMDS'nin beklediği tam başlığı eklemeye ikna etti. Bunu yönlendirme numarasıyla birleştirdiğinizde, artık Özel GPT Eylemleri arka ucundan meta veri hizmetine geçerli bir SSRF kanalınız var Metadata: true Başlık.

Bu kanal kurulduktan sonra gerisi neredeyse mekanikti. Araştırmacı, iyi bilinen IMDS yolunu kullanarak meta veri hizmetinden Azure Yönetim API'sine yönelik bir OAuth2 belirteci istedi. Yanıt, ChatGPT altyapısının kullandığı bulut kimliğine bağlı bir erişim belirteci içeriyordu. Bu belirteç, kimliğin ne kadar ayrıcalığa sahip olduğuna bağlı olarak en azından yönetim uç noktalarını sorgulayabilir ve potansiyel olarak hassas kaynaklara ulaşabilirdi. Bu noktada araştırmacı durdu, bulguları OpenAI'nin hata ödül programı aracılığıyla bildirdi ve OpenAI açığı yüksek önem derecesine sahip olarak sınıflandırdı ve yamalamak için harekete geçti.

Bu zinciri çarpıcı kılan şey, saldırganın kabuk erişimine, kaynak koduna veya HTTP yığınında herhangi bir klasik hataya ihtiyaç duymamasıdır. Her şey normal yapılandırma ekranlarının içinde gerçekleşti: URL'ler, kimlik doğrulama ayarları ve yaramazlığı itaatkar bir şekilde gerçekleştiren bir test düğmesi.

SSRF tarzı bir probun küçük bir kod taslağı

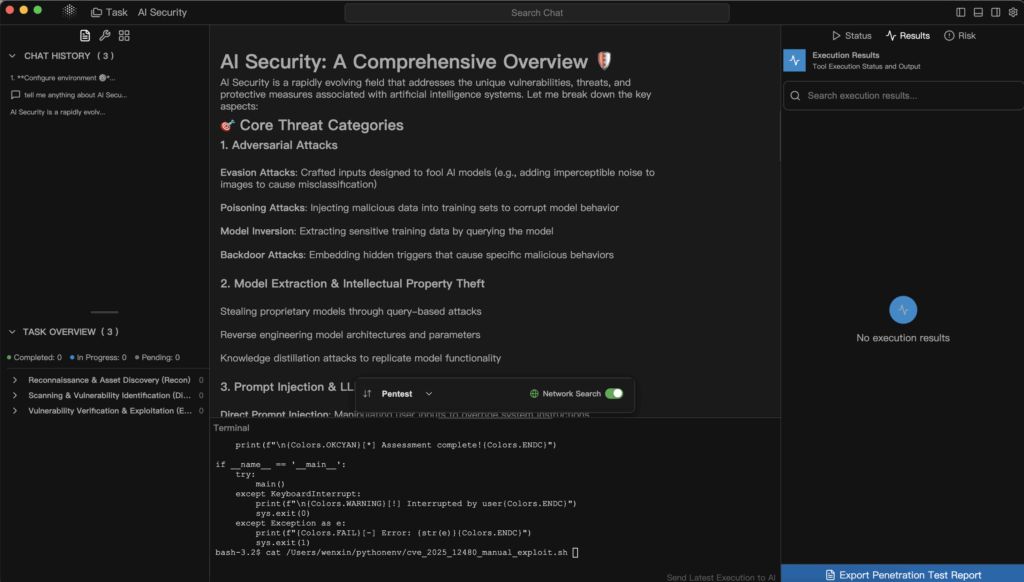

Bunu daha somut hale getirmek için, bir güvenlik mühendisinin "Eylemler tarzı" bir HTTP istemcisini sağlık açısından kontrol etmek için kullanabileceği çok küçük bir dahili yardımcı komut dosyası hayal edin. Amaç, üretimdeki gerçek meta veri hizmetlerini vurmak değil, hazırlık veya laboratuvar ortamlarında beklenmedik yönlendirmeleri ve dahili IP atlamalarını inceleme alışkanlığını kodlamaktır:

i̇thalat talepleri̇

from urllib.parse import urljoin

def trace_request(base_url: str, path: str = "/"):

url = urljoin(base_url, path)

print(f"[+] {url} isteniyor")

try:

resp = requests.get(url, timeout=3, allow_redirects=True)

except Exception as e:

print(f"[!] Hata: {e}")

dönüş

print(f"[+] Son URL: {resp.url}")

print(f"[+] Durum: {resp.status_code}")

print("[+] Yönlendirme zinciri:")

for h in resp.history:

print(f" {h.status_code} -> {h.headers.get('Location')}")

# Çok kaba sezgisel: dahili bir IP'ye inersek uyar

if resp.raw._connection and hasattr(resp.raw._connection, "sock"):

peer = resp.raw._connection.sock.getpeername()[0]

print(f"[+] Eş IP: {peer}")

if peer.startswith("10.") or peer.startswith("192.168.") or peer.startswith("169.254."):

print("[!] Uyarı: arka uç bir dahili adrese yönlendirmeyi takip etti")

if __name__ == "__main__":

# Örnek: kendi laboratuvarınızda kontrollü bir test uç noktası ile değiştirin

trace_request("")

Bunun gibi bir komut dosyası "ChatGPT'yi istismar etmez", ancak aynı araştırma şeklini yakalar: sözde güvenli bir harici URL'den başlayın, yönlendirmeleri takip edin ve HTTP istemciniz aniden kendisini dahili veya bağlantı yerel IP aralıklarıyla konuşurken bulursa yüksek sesle şikayet edin. Bu kalıbı otomasyona dönüştürmek ve kendi Yapay Zeka Eylemlerinize güç veren bileşenlere karşı çalıştırmak, olay hakkında sadece okumaktan çok daha yararlıdır.

Bu bir "yapay zeka hatası" değil; yeni bir sahnede eski usul bulut istismarı

Bunu "ChatGPT hacklendi" şeklinde okumak ve devam etmek çok cazip. Bu çerçeveleme daha derin dersi kaçırıyor. Modelin kendisiyle ilgili hiçbir şey hatalı davranmadı. Bir şekilde yasaklanmış yeteneklerin kilidini açan bir istem yoktu. LLM kendisine söyleneni yaptı: bir Eylem çağırdı, sonucu okudu ve özetledi. Güvenlik açığı tamamen LLM ile dış dünya arasındaki yapıştırıcıda yaşanıyordu.

Bu yapıştırıcı, güvenlik ekiplerinin tam olarak odaklanmaları gereken yerdir. Bir LLM'ye araçları, Eylemleri veya eklentileri çağırma yeteneği verdiğinizde, onu altyapınızda etkili bir şekilde programlanabilir bir istemciye dönüştürmüş olursunuz. Geçmişte bir kullanıcı API'nizi manuel olarak çağırır ve siz de onun girdilerini gözden geçirirdiniz. Şimdi ise kullanıcı bir modele talimatlar veriyor ve model de bunu onun adına API çağrılarına dönüştürüyor. Model, düşmanca niyetin arka ucunuza ulaşması için başka bir yol haline gelir.

Bu açıdan bakıldığında, bu olay basitçe OWASP SSRF'nin farklı bir kostüm giymiş halidir. Koşulların hepsi tanıdıktır: kullanıcıdan etkilenen URL'ler, dahili veya ayrıcalıklı uç noktalara ulaşabilen bir sunucu, eksik veya tamamlanmamış çıkış kontrolleri ve normal iş yüklerinden çok erişilebilir bir bulut meta veri hizmeti. Aradaki fark, giriş noktasının artık klasik bir web formu ya da bir JSON alanı değil; Özel GPT'leri daha güçlü hale getirmek için tasarlanmış bir yapılandırma bloğu olmasıdır.

Patlama yarıçapının önemli olmasının nedeni de budur. Etkilenen sunucu rastgele bir mikro hizmet değildi; ChatGPT'nin çok kiracılı altyapısının bir parçasıydı ve OpenAI'nin Azure ortamında yer alıyordu. IMDS aracılığıyla elde edilen herhangi bir token, zaten anlamlı erişime sahip olan bir iş yüküne aitti. Yerel savunmalar saldırganın yapabileceklerini sınırlasa bile, risk profili unutulmuş bir test sanal makinesinden temelde farklıdır.

Bir entegrasyon merkezi olarak yapay zeka: saldırı yüzeylerini genişletme ve güven sınırlarını taşıma

Bu hatanın arkasındaki daha ilginç hikaye ise mimari. Yapay zeka platformları hızla entegrasyon merkezleri haline geliyor. Bir satış ekibi için Özel bir GPT, bir CRM, bir faturalama sistemi ve bir belge deposuyla konuşabilir. Güvenlik odaklı bir GPT ise tarayıcılarla, biletleme sistemleriyle ve CI/CD ile konuşabilir. Her durumda, LLM varlık değildir; bu bağlayıcıların arkasındaki veriler ve eylemler varlıktır.

Bu gerçeği kabul ettiğinizde, zihinsel tehdit modelinizin de değişmesi gerekir. "Yapay zeka güvenliğini" yalnızca hızlı enjeksiyon, veri sızıntısı veya toksik çıktılar olarak düşünmeye devam edemezsiniz. Ayrıca ağ sınırları, bulut kimliği ve kiracı izolasyonu hakkında çok da göz alıcı olmayan sorular sormanız gerekir.

Eylemlerinizi çalıştıran altyapı ağ üzerinde gerçekte neyle konuşabilir? Birçok bulut ortamında varsayılan, "DNS çözümlendiği sürece giden her şeye izin verilir" şeklindedir. Hizmetler nispeten basitken ve mühendisler esneklik isterken bu mantıklıydı. Ancak araya bir LLM platformu koyduğunuzda, her kiracı birdenbire kod yerine yapılandırma yoluyla yeni giden hedefler önermenin bir yolunu bulur. Güçlü bir çıkış politikası yoksa, etkili bir şekilde programlanabilir bir SSRF başlatıcısı yaratmış olursunuz.

Bu iş yükleri tarafından kullanılan kimlikler gerçekte ne kadar ayrıcalığa sahip? ChatGPT vakasında, araştırmacılar Azure Yönetim API'si için bir belirteç talep edebildi. Bu belirteç rol atamalarıyla sınırlı olsa bile, yine de yüksek değerli bir sırrı temsil ediyor. Birçok kuruluşta, dağıtımı basitleştirdiği için "platform altyapısına" geniş izinler verme eğilimi güçlüdür. Kullanıcı girdisi tarafından dolaylı olarak yönlendirilebilecek herhangi bir şey için -özellikle yapay zeka aracılığıyla- bu cazibe tehlikelidir.

Kiracılar arasındaki, kontrol düzlemi ile veri düzlemi arasındaki ve YZ çalışma zamanı ile bulutun geri kalanı arasındaki güven sınırları tam olarak nerededir? İyi tasarlanmış bir sistem, herhangi bir kiracının yapılandırmasının düşmanca olabileceğini, o kiracı adına giden herhangi bir çağrının düşmanca olabileceğini ve Eylem'den meta verilere ve yönetim API'lerine kadar herhangi bir yükseltmenin gerçekçi bir saldırgan hedefi olduğunu varsaymalıdır. Bu bakış açısı, katı ağ segmentasyonu, politikaları uygulayan yardımcı yan arabalar ve özel hizmet kimlikleri gibi modelleri "olması güzel" yerine pazarlık dışı hale getirir.

Tek bir olaydan tekrarlanabilir bir test metodolojisine

Savunucular ve geliştiriciler için bu hikayenin asıl değeri belirli bir hata değil; gösterdiği test zihniyetidir. Araştırmacı esasen Özel GPT Eylemlerini yeni bir tür HTTP istemcisi olarak ele almış ve ardından tanıdık bir kontrol listesinden geçmiştir: URL'yi kontrol edebilir miyim, dahili ana bilgisayarlara ulaşabilir miyim, yönlendirmeleri kötüye kullanabilir miyim, başlıkları enjekte edebilir miyim, meta verileri vurabilir miyim, bunu bir bulut belirtecine dönüştürebilir miyim?

Bu zihinsel kontrol listesi, yapay zeka platformları için modern sızma testi iş akışları içinde tam olarak otomatikleştirilmesi gereken şeydir. Bir manşet ve ödül raporu beklemek yerine, ekipler rutin olarak kendi Özel GPT altyapılarını, eklenti ekosistemlerini ve araç zincirlerini hedef haline getirmelidir.

Bunu biraz daha somutlaştırmak için, bir "AI Eylemleri SSRF incelemesini" aşağıdaki gibi basit, tekrarlanabilir bir dizi olarak düşünebilirsiniz:

| Aşama | Anahtar Soru | ChatGPT durumunda örnek |

|---|---|---|

| URL etkisi | Kiracı URL'yi anlamlı bir şekilde kontrol edebilir mi? | Özel GPT Eylemleri kullanıcı tanımlı harici uç noktalara izin verir. |

| Yönlendirme davranışı | Bilinmeyen konumlara yönlendirmeleri takip ediyor muyuz? | HTTPS uç noktası yönlendirildi 169.254.169.254. |

| Başlık manipülasyonu | Kiracı dolaylı olarak hassas üstbilgiler ayarlayabilir mi? | Enjekte etmek için kullanılan API anahtarı yapılandırması Metadata: true. |

| Ayrıcalık ve belirteçler | Elde edilen herhangi bir token gerçekte ne yapabilir? | IMDS, iş yükü için bir yönetim API belirteci yayınladı. |

Ortamınız için bu tür bir tablo hazırladıktan sonra, yaptığınız testleri hem otomatikleştirmek hem de açıklamak çok daha kolay hale gelir. Bunu şirket içi çalışma kitaplarına ekleyebilir, tedarikçilerle paylaşabilir ve gelecekteki yapay zeka özelliklerinin aynı standartta olmasını sağlayabilirsiniz.

İşte bu noktada uzmanlaşmış, yapay zeka farkındalığına sahip güvenlik araçları önem kazanmaya başlar. Genel bir web tarayıcısı, ağ çağrılarını Eylemlerin arkasına gizleyen bir kullanıcı arayüzünde nasıl gezineceğini veya bir GPT tanımı içinde kullanılan OpenAPI şemaları hakkında nasıl mantık yürüteceğini bilemeyebilir. Buna karşılık, Penligent gibi yapay zeka odaklı bir pentest platformu, bu şemaları ve yapılandırmaları birinci sınıf girdiler olarak ele alabilir. Bir dizi Özel GPT veya diğer YZ araçları için Eylem yapılandırmasını dışa aktardığınız, bunları bir ajan test hattına beslediğiniz ve SSRF koşullarını, güvenli olmayan yönlendirmeleri, sınırsız ağ erişimini ve meta veri maruziyetini sistematik olarak araştırmasına izin verdiğiniz bir iş akışı hayal edebilirsiniz.

Penligent'ın otomasyonu döngü içinde insan kontrolüyle birleştirme felsefesi bu modele çok iyi uymaktadır. Bir ajan tüm araç tanımlarını listeleyebilir, URL'leri veya ana bilgisayar adlarını kabul eden uç noktalar için aday yükler oluşturabilir ve meraklı bir saldırganın deneyeceklerini simüle eden komut dosyası trafiğini yönlendirebilir. Sistem umut verici bir davranış keşfettiğinde - örneğin, görünüşte harici bir HTTPS uç noktasının dahili IP aralıklarına yönlendirmeleri takip ettiğini - bunu kanıt olarak ortaya çıkarabilir: istek günlükleri, yanıt parçacıkları ve çıkarılan dahili topoloji. Daha sonra bir insan operatör sonraki adımları yönlendirebilir, örneğin sistemden özellikle bulut meta veri rotalarına yönelmesini veya döndürülen belirteçlerin yönetim API'lerine karşı geçerli olup olmadığını doğrulamasını isteyebilir.

Bu tür bir iş akışı iki şeyi başarır. Yapay zeka platformlarını, web uygulamaları ve API'lerin zaten sahip olduğu kanıt odaklı güvenlik döngüsüne dahil eder ve saldırganların kaçınılmaz olarak kullanacağı aynı LLM yeteneklerinden savunucuların hizmetinde yararlanır. ChatGPT'yi vuran hata artık tek seferlik bir sürpriz değildir; yeni bir entegrasyon sunduğunuzda veya Eylemler altyapınızı değiştirdiğinizde çalıştırabileceğiniz bir regresyon paketinde bir test durumu haline gelir.

Yapay zeka platformları üzerinde çalışan ekipler için pratik dersler

YZ hizmetlerini oluşturmak yerine tüketen bir güvenlik mühendisi veya mimarsanız, bu olay hala oldukça önemlidir. Özel GPT'lere dahili olarak hiç dokunmasanız bile, muhtemelen dahili API'leri, gösterge tablolarını veya belge depolarını bir tür YZ aracılarına veya yardımcı pilotlara maruz bırakıyorsunuzdur. Fikirler aktarılabilir.

İlk adım, LLM'yi güvenlik incelemesi gerektiren tek şey olarak görmeyi bırakmaktır. İster açık araçlar, ister Eylemler veya dolaylı web kancaları aracılığıyla olsun, modellerin ortamınıza geri çağrılmasına izin veren her özellik potansiyel bir saldırı grafiği olarak görülmelidir. Bir yapay zeka bileşeninin hangi dahili hizmetlerle konuşabileceğini, hangi kimlikleri kullandığını ve düşmanca bir kullanıcı kasıtlı olarak bu yetenekleri genişletmeye çalışırsa ne olacağını biraz güvenle yanıtlayabilmelisiniz.

İkinci adım, test programlarınızı YZ tutkal kodunu kapsayacak şekilde genişletmektir. Bir sızma testi yaptırdığınızda veya dahili bir kırmızı ekip tatbikatı yürüttüğünüzde, kapsamın açıkça YZ entegrasyonlarını içerdiğinden emin olun: araçlar için yapılandırma yüzeyleri, URL'lerin ve başlıkların oluşturulma şekli, YZ çalışma zamanları ile hassas hizmetler arasındaki ağ yolları ve meta veri uç noktaları etrafındaki korumalar. Birilerinin, bir yerlerde, bunları gerçek bir saldırganın yapacağı gibi kötüye kullanmaya çalıştığına dair kanıt isteyin.

Üçüncü adım, bu saldırı yüzeyinin küçülmeyeceğini kabul etmektir. Daha fazla iş süreci LLM'lere bağlandıkça, daha fazla Eylem, daha fazla eklenti, istemler adına iş yapan daha fazla arka plan hizmeti olacaktır. Güvenliği bir dizi olay odaklı yama olarak ekleyebilir ya da tekrarlanabilir bir program oluşturabilirsiniz: net tehdit modelleri, temel mimari modelleri, otomatik test akışları ve ortamınız geliştikçe araştırmaya devam eden (muhtemelen Penligent gibi sistemler tarafından desteklenen) araçlar.

Manşetin ötesinde

Custom GPT SSRF hack'ini tek bir satıcı için tek seferlik bir utanç olarak yanlış okumak kolaydır. Bunu bir önizleme olarak okumak daha verimli olacaktır. Yapay zeka platformları hızla kullanıcıları, modelleri, API'leri ve bulut altyapısını birbirine bağlayan orkestrasyon katmanlarına dönüşüyor. Bu rol güçle birlikte gelir ve bir şeyler ters gittiğinde güç her zaman daha büyük bir patlama yarıçapıyla birlikte gelir.

Bu hikayenin cesaret verici yanı, ileriye dönük yolu da göstermesidir. Güvenlik açığı, yeni bir bağlamda eski içgüdülerini takip eden bir araştırmacı tarafından bulunmuştur. Standart bir hata ödül kanalı aracılığıyla rapor edildi. Düzeltildi. Artık geri kalanımız da aynı oyun kitabını alıp kendi sistemlerimize proaktif bir şekilde uygulayabiliriz, ideal olarak hem güvenlik hem de yapay zekadan anlayan araçların yardımıyla.

Bunu yaparsak, bu olayın mirası sadece "ChatGPT'nin bir zamanlar SSRF'si vardı" olmaz. Yapay zeka güvenliği hakkında nasıl düşünülmesi gerektiğine dair bir vaka çalışması haline gelir: modelleri daha büyük bir sistemin bir bileşeni olarak ele almak, entegrasyonları ciddi saldırı yüzeyleri olarak ele almak ve otomasyon artı insan içgörüsünü kullanmak - ister Penligent gibi platformlar ister kendi dahili boru hatlarınız aracılığıyla olsun - belirsiz endişeleri sürekli olarak somut, test edilebilir, kanıt destekli güvenceye dönüştürmek. Bu, Medium'da anlatmaya değer ve mühendislik organizasyonunuzda yaşamaya daha da değer bir hikayedir.