In recent months, security researchers have been stunned by the growing sophistication of machine-driven testing. Reports have circulated of long vulnerability lists appearing seemingly out of nowhere: full CVE references, exploit paths, and proof-of-concept scripts, all produced at a speed no human red team could match. What once required teams of specialists working for weeks is now being generated in hours. Whether these incidents are fully autonomous or partly guided doesn’t matter—the message is clear. A new era is here, and AI pentest tools are at its center.

For decades, penetration testing has been defined by scarcity. Skilled testers were few, their tools scattered, and their processes episodic. A typical assessment began with contract scoping, moved through reconnaissance and scanning, then into long cycles of manual exploitation and report writing. By the time results were delivered, systems often had already changed. This mismatch between pace of attack and pace of defense left organizations exposed. Today, the rise of autonomous pentesting promises to compress that gap, shifting the balance of power in ways we are only beginning to understand.

Why the Old Model Fail

Traditional penetration testing faces a structural problem: it is reactive, infrequent, and slow. Modern software, by contrast, moves continuously. New code is shipped daily, APIs are rolled out weekly, and cloud environments shift dynamically. Quarterly or annual tests can no longer keep up. The result is a widening window where vulnerabilities persist unseen, sometimes for months, even in organizations that invest heavily in security.

The problem is not just cadence. Legacy tooling floods testers with noise. A single scan can produce thousands of raw findings, most of them false positives. Human operators are left with the grinding work of triage—time that could have been spent investigating deeper flaws. Worse, these workflows often exist in silos: a scanner here, an exploit framework there, an improvised script in between. Very few environments achieve full coverage, and almost none can repeat their tests with the regularity that modern security demands.

What Autonomous Pentesting Looks Like

The next generation of AI pentest tools attempts to close this gap. They are not simple wrappers around old scanners. They combine orchestration, validation, and reasoning into cohesive systems that resemble the workflow of a human expert, but at machine scale and speed.

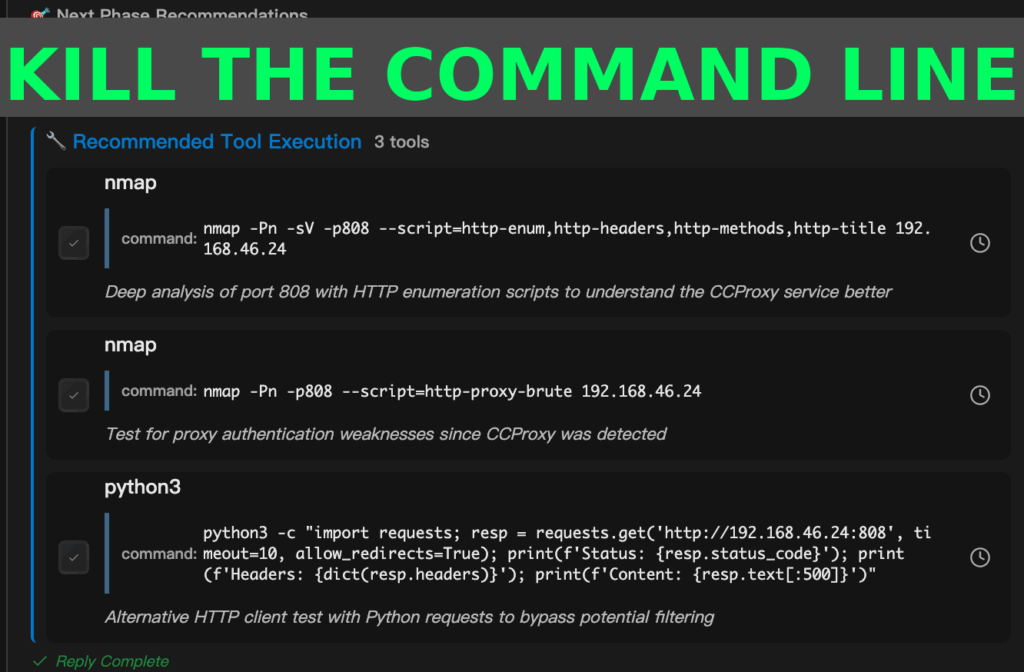

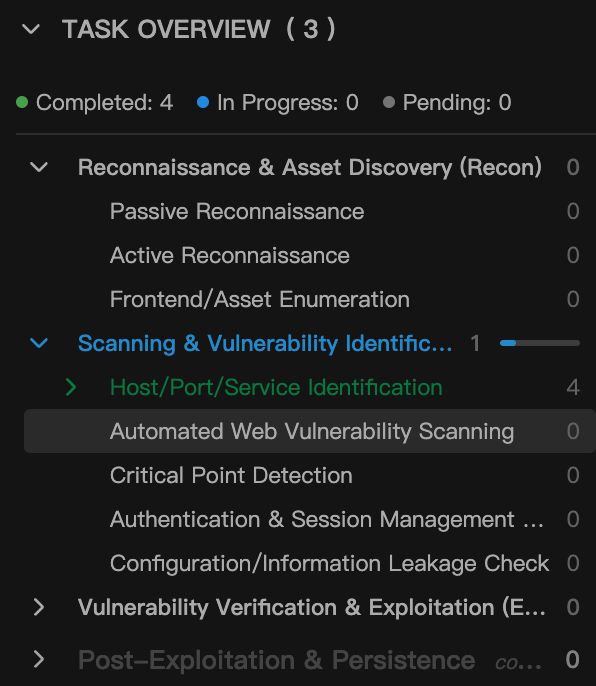

These tools begin with intent: a command such as “check this web application for SQL injection” is parsed into structured subtasks. Reconnaissance, scanning, exploitation, and validation are chained together automatically. When a vulnerability is suspected, the tool runs additional probes to confirm it is exploitable, filtering out false alarms before results are surfaced. Every step is logged, creating an auditable trail that explains not only what was found, but why.

Research prototypes already demonstrate what is possible. Frameworks like xOffense and RapidPen show how multiple agents can coordinate complex attack flows, achieving success rates in controlled settings that rival human testers. Benchmarks such as TermiBench expose where these systems still fail: messy real-world environments with session states, WAFs, and unpredictable defenses. But progress is undeniable. What once looked like science fiction now feels like an engineering problem on the cusp of practical reality.

Shifting the Advantage to Defenders

Skeptics argue that autonomous pentesting only arms attackers. But defenders have a structural advantage: access to their own systems, visibility into logs, and the authority to run tests safely and continuously. The same technology that could automate offensive exploitation can be repurposed to harden defenses, if deployed responsibly.

For organizations, this means pentesting is no longer a rare event. With the right toolchain, it can become continuous—a background process as integral as unit tests or CI/CD checks. Vulnerabilities can be discovered and remediated within hours of introduction, not months. The combination of reasoning, verification, and transparency makes these tools more than scanners; they become trusted copilots for security teams.

This is where Penligent comes in. Unlike academic prototypes, Penligent is built as a production-ready AI pentest tool. It integrates more than 200 industry-standard modules, runs automatic verification on each finding, and preserves decision logs that auditors and engineers can review. It is designed to fit into modern workflows, from DevSecOps pipelines to compliance reporting, so that continuous pentesting is not just a vision but a daily reality. You can even see it in action in this product demo, which shows how quickly a target can be tested, validated, and reported without human intervention in every step.

The Limits We Must Acknowledge

Of course, no tool is perfect. AI pentest tools face real limitations. They can miss logic flaws that require deep domain knowledge. They can generate false negatives if a vulnerability hides behind unusual workflows. They can be expensive to run at scale, consuming compute resources with repeated probes and validations. And without strict safeguards—authorization checks, throttling, kill switches—they risk being misused as automated attack engines.

These realities mean that human oversight will remain essential. Just as automated trading did not eliminate financial analysts, autonomous pentesting will not eliminate red teams. What it will do is change their role: less time spent sifting through noise, more time spent interpreting results, modeling threats, and designing defenses.

What Comes Next

The trajectory is clear. Autonomous systems will grow more capable. Multi-agent orchestration will allow complex attack paths to be executed reliably. Defensive AI will emerge in response, creating a cat-and-mouse game where systems probe and counter each other at machine speed. Regulatory frameworks will begin to define the boundaries of acceptable use, requiring audit logs and provenance for every automated test. Over time, penetration testing will shift from episodic audits to continuous, embedded practice.

For organizations willing to adopt these tools, the payoff is speed, coverage, and clarity. For attackers, it raises the bar. And for defenders, it offers a long-overdue chance to catch up with the pace of modern software.

The rise of autonomous hacking is not theoretical. It is happening now, and it will reshape the practice of security in the years ahead. AI pentest tools will not replace human testers, but they will transform how those testers work, what they focus on, and how often organizations can test themselves.

Penligent represents one of the first serious steps in bringing this vision into reality: a usable, transparent, continuously running system that blends automation with validation and accountability. To see where pentesting is going, you don’t need to wait for the future. You can watch it today—here.