Why pentestAI Matters Now

AI-driven offensive security has moved from proof-of-concept demos into enterprise security programs. Platforms now describe themselves as “AI automated penetration testing,” “autonomous red teaming,” or “continuous security validation,” and they’re being evaluated not just by security researchers but by CISOs and boards.

Pentera positions its platform as automated security validation that continuously executes attacker techniques across internal, external, and cloud environments, then prioritizes remediation and quantifies business risk for leadership. RidgeBot markets itself as an AI-powered autonomous penetration testing robot that behaves like a skilled ethical attacker, scanning enterprise networks, discovering assets and misconfigurations, attempting controlled exploitation, and generating evidence — all without needing a senior red team to babysit every run.

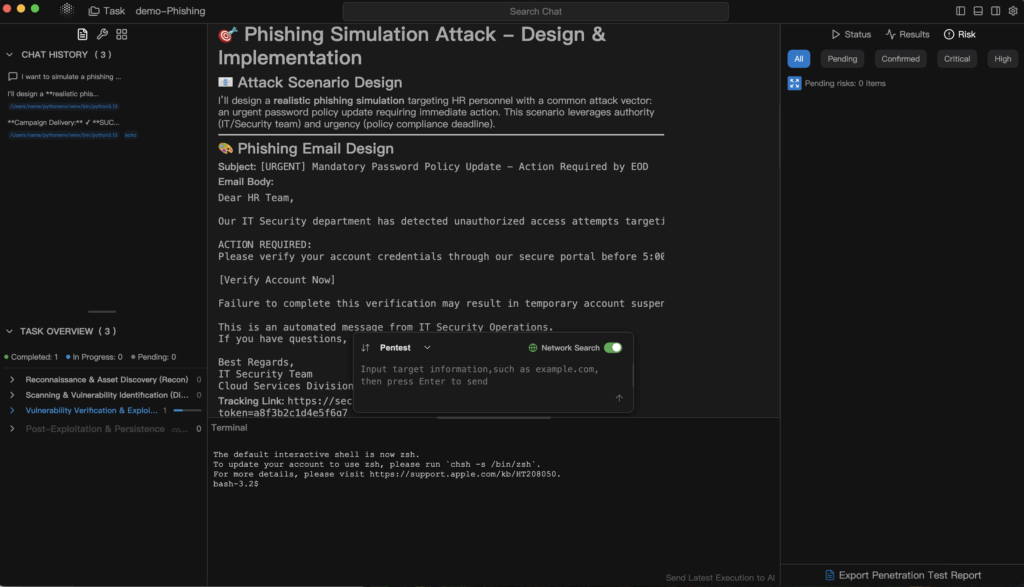

At the same time, lightweight assistants sometimes called “pentestGPT” are being used by security engineers to reason through exploit paths, generate payloads, assist with privilege escalation logic, and draft executive summaries. But these assistants still rely on a human operator to choose targets, run tooling, maintain scope boundaries, and assess real-world impact.

Penligent.ai is designed for teams that need something else entirely: a pentestAI layer that can 1) understand natural language objectives, 2) orchestrate a full offensive workflow across the tools you already run, and 3) generate compliance-grade, audit-ready reporting mapped to formal standards.

In other words, Penligent.ai is not “a scanner with AI on top,” and it’s not “LLM that writes scripts for you.” It’s an agentic attacker that you brief in plain English, that coordinates dozens or even hundreds of existing security tools, and that hands you an evidence-backed story you can take to engineering, legal, and leadership.

The Shift: From Annual Pentest to Continuous AI Offensive Pressure

Traditional penetration testing is episodic. You scope an engagement, wait for findings, and scramble to remediate before audit. That rhythm assumes your attack surface is fairly static. It is not.

Two things broke that model:

- Your perimeter changes more in one week than it used to in a quarter.

Cloud services spin up and die. Containers get exposed and then forgotten. Third-party APIs quietly expand privilege. Test instances leak into public space. Stolen credentials circulate. Pentera’s pitch is that “continuous security validation” is now mandatory, not optional — you need live attack-path mapping across internal, external, and cloud assets, updated with current TTPs. - Attack execution itself is being automated.

RidgeBot frames its value as an autonomous ethical attacker that can be deployed in enterprise environments, enumerate assets, attempt exploitation safely, confirm impact, and generate reports — compressing what used to be weeks of manual offensive work into hours.

This is why “pentestAI” is now a buying category. CISOs aren’t just asking “Did we test last quarter?” They’re asking “What’s testing us right now?” “Where are we actually breachable today?” and “Can I show that to my board in business terms?”

“pentestGPT,” by contrast, tends to mean: an LLM that helps a human pentester think faster, write faster, and communicate better — but it doesn’t actually run the kill chain end to end on its own.

Penligent.ai is built around that new expectation: persistent, AI automated penetration testing that behaves like an always-on internal red team lead, but without forcing security engineers to write custom scripts or glue together 30 tools manually every single time.

pentestAI vs pentestGPT vs Legacy Automation

pentestGPT-style assistants

- Strengths:

- Help reason through exploit chains.

- Suggest payloads for SQLi, IDOR, SSRF, RCE, etc.

- Walk through privilege escalation or lateral movement steps.

- Draft remediation guidance and executive summaries.

- Limitations:

- They do not autonomously execute.

- They do not coordinate scanners, fuzzers, credential stuffing frameworks, or cloud misconfig auditors on your behalf.

- They do not enforce scope guardrails or collect admissible evidence automatically.

In effect, pentestGPT is an “AI copilot for a human red teamer,” not a red team in itself.

Classic scanners / DAST / SAST stacks

- Strengths: Broad coverage and speed. They can spider web apps, fuzz APIs, and flag common classes of misconfigurations or injection points.

- Limitations: High noise, weak chaining logic, and limited post-exploitation reasoning. Most scanners do not deliver a credible narrative like “Here is how an attacker would actually pivot from this exposed endpoint into sensitive data.” They also do not map findings cleanly to PCI DSS, ISO 27001, or NIST requirements.

Modern pentestAI platforms

Pentera calls this automated security validation: emulate attackers across internal, external, and cloud assets; surface actual attack paths; quantify business impact; and prioritize fixes.

RidgeBot positions itself as an autonomous AI attacker that can enumerate IP ranges, domains, IoT nodes, credentials, and privilege escalation opportunities, then attempt controlled exploitation and ship evidence in near-real time — all without needing a human red team expert glued to the keyboard.

Penligent.ai aims to operate in that same class — but its focus is different:

- You tell it what you want in plain English.

- It federates and orchestrates an existing security toolchain (200+ tools, scanners, recon utilities, exploit frameworks, cloud posture analyzers, etc.).

- It executes a controlled kill chain.

- It assembles the evidence into a compliance-grade report.

That orchestration layer is critical. Most enterprises already have scanners, CSPM tools, API fuzzers, code analyzers, secret detectors, traffic recorders, and exploit proof-of-concept frameworks. The blocker is not “do you have security tools?” The blocker is “can you make them act like a coordinated attacker without hand-writing glue code every time?” Penligent.ai is designed to solve that.

A simplified interaction model looks like this:

# natural language → pentestAI orchestration

user: "Test our staging API cluster. Enumerate admin surfaces,

attempt session fixation or weak token reuse where allowed,

and generate PCI DSS / ISO 27001 mapped findings with screenshots."

agent:

1. Calls recon and OSINT tooling to discover subdomains, services, exposed panels.

2. Drives auth-testing utilities and replay tooling against allowed endpoints.

3. Captures evidence (HTTP traces, screenshots, replay logs).

4. Normalizes output across tools into one attack narrative.

5. Maps each validated issue to PCI DSS, ISO 27001, and NIST control language.

6. Produces an executive summary plus engineering fix list.

This is the fundamental leap from “tool sprawl” to “orchestrated kill chain.”

Capability Matrix: Pentera vs RidgeBot vs pentestGPT vs Penligent.ai

| Capability / Vendor | Pentera | RidgeBot | pentestGPT | Penligent.ai |

|---|---|---|---|---|

| Positioning | Automated security validation; continuous exposure reduction and risk-driven remediation for enterprise security teams. | AI-powered autonomous penetration testing robot that behaves like an ethical attacker at scale, including across large networks. | LLM co-pilot for human pentesters | pentestAI engine that runs a full offensive workflow based on natural language intent |

| Execution Model | Continuous validation across internal, external, and cloud assets | Autonomous scanning, exploitation simulation, and evidence capture in-scope | Human-driven; AI suggests next move | Agentic: recon → exploitation attempt → evidence capture → compliance report |

| Operator Skill Dependency | Low/medium; marketed as “no need for elite red team for every cycle.” | Low; marketed as “does not require highly skilled personnel,” essentially cloning expert attacker behavior. | High; requires an experienced tester | Low; you describe goals in plain English instead of writing custom exploit scripts or CLI pipelines |

| Surface Coverage | Internal networks, external perimeter, cloud assets, exposed credentials, ransomware-style attack paths. | IP ranges, domains, web apps, IoT, misconfigurations, privilege exposures, data leaks across enterprise infrastructure. | Whatever the operator chooses to test | Web/API, cloud workloads, internal services in scope, CI/CD surfaces, identity flows, and AI/LLM attack surfaces |

| Tooling Model | Bundled platform, prioritized remediation insights for leadership | Built-in autonomous attacker logic and exploit simulation engine | Uses whatever tools the human runs manually | Orchestration layer across 200+ security tools (scanners, fuzzers, secret finders, privilege escalation kits) |

| Reporting & Remediation | Risk prioritization, visual attack paths, remediation guidance consumable by execs and boards. | Automated reporting during tests; proves exploitability and impact to help remediation teams close real, not theoretical, issues. | Draft summaries; still needs human evidence curation | Compliance-grade reporting mapped to ISO 27001 / PCI DSS / NIST, plus attack-chain visualization and fix list |

| Primary Buyer / User | CISOs and security engineering teams that want ongoing validation | Security teams that want red-team pressure without hiring more senior red teamers | Independent pentesters / security researchers | Security, AppSec, platform, and compliance leads who want repeatable offensive testing they can explain upstream |

Where Penligent.ai differs in this matrix is not “we find more CVEs than you.” The differentiator is “you tell it what you want in English, it drives your own toolchain like a coordinated attacker, and it outputs something your engineering leadership, compliance team, and exec team can all consume.”

That hits three buying triggers simultaneously:

- Security wants validated exploit chains, not just vulnerability IDs.

- Engineering wants concrete, reproducible evidence and a fix list.

- Leadership and audit want: Which control failed? Which standard is implicated? Are we exposed in a way that’s reportable?

Penligent.ai: Three Core Differentiators

Natural language control instead of CLI friction

Most pentesting automation still expects an operator who can stitch together Nmap, Burp extensions, custom auth bypass scripts, replay tooling, and cloud misconfig scanners by hand. Penligent.ai is positioned to remove that barrier. You describe what you want (“Enumerate exposed admin portals on staging, attempt token replay where allowed, and generate PCI DSS impact”). The system interprets intent, manages session logic, handles rate limiting and login flows, and runs the attack flow.

That matters because it lowers the floor. You don’t need every squad to have a senior offensive engineer fluent in five different toolchains. The model is: “ask in normal language, get attacker-grade work.”

Orchestration of 200+ security tools into one kill chain

Most enterprises already own scanners, fuzzers, credential auditors, secret finders, CSPM, container scanners, CI/CD exposure analyzers, WAF bypass testers, replay tools, privilege escalation kits, OSINT collectors, and so on. The real cost is glue. Who stitches all of that into “this is how I got from an exposed debug endpoint to S3 credentials to production database access”?

Penligent.ai’s angle is orchestration at scale: coordinating over 200 security tools and feeding their outputs into a single exploitation narrative rather than 200 disconnected scan results. Instead of “Here are 38 medium-severity API issues,” you get:

- Entry point

- Method of authentication abuse or trust break

- Lateral step(s)

- Resulting access

- Blast radius if an attacker chained it in production

For leadership, that reads like “this is how ransom would land.”

For engineering, it reads like “this is the exact request/response pair that got us owned.”

Compliance-grade reporting and attack-chain visualization

Pentera emphasizes attack-path mapping, remediation priority, and business-language risk summaries for executives and boards. RidgeBot emphasizes evidence-backed exploitability to accelerate remediation (“not just a vuln, a real foothold”).

Penligent.ai takes that final step into formal compliance language. The output is structured around:

- Which control failed (ISO 27001 control family, PCI DSS requirement, NIST function).

- The exact technical chain that proves the failure.

- The blast radius.

- The recommended fix path and responsible team.

This lets security, compliance, and engineering all work off the same artifact. You can move directly from “we found it” to “we’re accountable for it” to “we can prove we addressed it.”

Where You Actually Use It – Typical Scenarios

- External web and API surfaces

Ask the agent to discover exposed admin panels, test for weak session handling or token replay (within allowed scope), and produce audit-ready evidence plus PCI DSS / NIST mapping for any finding that touches payment or sensitive data. - Cloud and container attack surface

Point pentestAI at ephemeral services, mis-scoped IAM roles, orphaned CI/CD runners, or exposed staging clusters. This is where “shadow infra” lives. Pentera explicitly markets continuous validation across internal, perimeter, and cloud surfaces as essential because attackers now pivot through misconfigurations, leaked credentials, and credential reuse rather than through a single monolithic perimeter. - Internal dashboards and privileged backends

Many of the worst breaches start from “it’s internal only, nobody outside will ever see this.” In practice, that assumption dies the moment credentials leak or a staging subdomain goes public. An agentic workflow can prove — with concrete evidence — that the assumption was wrong. - AI assistant / LLM / agent surfaces

As organizations ship internal copilots, autonomous support bots, and data-privileged internal assistants, attackers are experimenting with prompt injection, “invisible instruction” payloads, and tricking AI agents into exfiltrating secrets or executing actions. Pentera has publicly discussed intent-driven, natural-language-style validation to mirror human adversary behavior as it evolves in real time.

Penligent.ai targets this same layer: “Treat the AI assistant like a high-value microservice with privileges. Can we coerce it? Can we chain that coercion into lateral movement?” The result is a report your security and compliance teams can actually ship upstream.

Deployment and Workflow

Penligent.ai is intended to sit on top of what you already have, not replace it. The workflow looks like this:

1. You describe the test in plain English:

"Scan staging-api.internal.example for exposed admin panels.

Attempt session fixation / token replay where allowed.

Capture screenshots and request/response pairs.

Then generate ISO 27001 / PCI DSS / NIST findings."

2. The agent orchestrates:

- Recon and OSINT tooling (subdomain enum, service fingerprinting)

- Auth/session testing utilities

- Exploit attempt tooling within policy boundaries

- Lateral movement simulators (if in scope)

- Evidence capture and normalization

3. The platform assembles:

- Attack-chain diagram (entry point → lateral step → impact)

- Technical evidence packages (HTTP traces, PoC, screenshots)

- Compliance mapping: which requirement was broken, why it matters

- A prioritized remediation list aligned to responsible teams

The outcome is not “here’s a folder full of scanner output.” The outcome is a story:

- Here is how an attacker would get in.

- Here is what they would get.

- Here is which control that violates.

- Here is who needs to fix it.

- Here is how we will prove to audit and leadership that it’s fixed.

That is the real promise of pentestAI as Penligent.ai defines it: natural-language intent in, orchestrated multi-tool offensive action, compliance-mapped evidence out — without requiring every engineer to be a career red teamer.