Executive Summary

Deepfake fraud is no longer a theoretical AI abuse story. We now have documented cases where criminals used AI-generated video and voice clones to impersonate executives on live conference calls and pressure employees into wiring over $25 million. The same technology is being used to bypass KYC, defeat face liveness checks, impersonate banking customers, and social-engineer urgent transfers.(World Economic Forum)

This is not just a fraud problem. It is a core fintech security problem, a regulatory problem (GDPR / FCA / FTC), and a board-level risk for any organization that moves money at speed or allows remote account onboarding.(Fortune)

What “deepfake fraud” actually looks like in finance

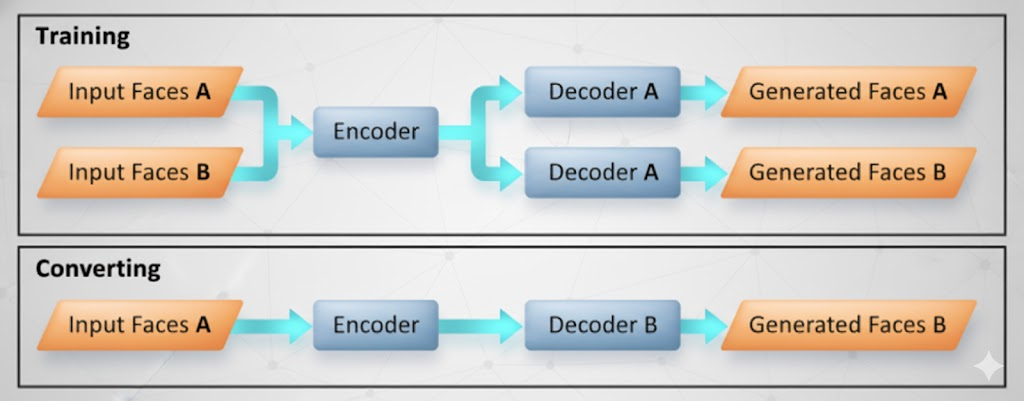

Deepfakes are AI-generated or AI-altered audio/video assets that convincingly mimic a real person’s face, voice, facial micro-movements, cadence, and speech pattern. Attackers now weaponize them to pose as:

- A company’s CFO or regional VP giving “urgent confidential transfer instructions.”

- A bank customer asking to reset account controls.

- A compliance officer asking for invoices, passports, or wire details.

- A trusted vendor’s finance lead requesting an “updated” payout account.

What changed is not just visual realism. It’s accessibility. Off-the-shelf voice synthesis tools can clone a voice from seconds of public audio, then read any script in that voice — enough to pass weak “voice verification” flows at some banks.(Business Insider)

That collapses the old assumption that “if I can hear them or see them live, it must be them.”

Case studies and why they matter

$25M deepfake executive order over video call

In early 2024, criminals staged a fake “urgent senior leadership meeting” over video with a multinational firm’s Hong Kong office. Every participant on screen — including what looked and sounded like the company’s CFO — was AI-generated. The finance employee on that call was pressured to authorize multiple transfers totaling roughly $25 million.(World Economic Forum)

This attack mixed social engineering with extremely convincing deepfake presence. The victim thought they were following direct executive orders, in real time, on camera. The fallout triggered incident-class forensics and law enforcement involvement, not just an internal “fraud ticket.”(Financial Times)

Voice cloning against banking workflows

Security researchers and reporters have demonstrated that with consumer-grade voice cloning, it’s possible to call a bank’s customer service line, sound like the account owner, and request changes to verification or limits. In tests, some financial institutions’ first-line voice-matching controls weren’t robust enough to reject the synthetic caller.(Business Insider)

This turns account takeover from a one-off social engineering stunt into something scalable and automatable.

KYC bypass and remote onboarding

Banks, lenders, and payment apps rely on remote KYC: “show us your face, read this phrase, hold your ID next to your face.” Attackers now generate high-quality face videos with realistic blinking, head turns, and depth cues, then overlay that onto a real human body to fool liveness checks and open fraudulent accounts. The result: instant money-mule accounts and new channels for laundering.(Cybernews)

These are not edge cases. Deloitte has projected that AI-driven fraud could cost banks and their customers up to tens of billions of dollars by 2027, as deepfake-enabled scams industrialize and scale.(Deloitte Brazil)

Attack playbook: how deepfakes are actually used

Executive impersonation / “urgent transfer now” fraud

- A finance employee is pulled into a video call with “the CFO,” who demands confidential, immediate transfers outside normal approval channels.

- The deepfake uses authority, secrecy, and time pressure to override policy.

- Single-incident exposure can exceed eight figures.(World Economic Forum)

KYC fraud and account seeding

- Synthetic “live face” video plus doctored ID passes onboarding.

- Attackers gain bank / fintech / exchange accounts under fake IDs, enabling fraud, money laundering, and credit abuse.(Cybernews)

Voice cloning scams

- Criminals imitate an executive or a high-net-worth client and issue instructions by phone.

- Call-center agents and junior finance staff often cannot challenge “the boss.”(Business Insider)

Phishing, now with video proof

- Old phishing emails looked sloppy.

- New phishing campaigns embed customized “video messages” or “voice notes” from what appears to be a known contact, dramatically increasing credibility.(Business Insider)

Vendor / invoice fraud

- Attackers pose as a long-term supplier’s CFO, with matching face/voice, and demand that Accounts Payable “update wire instructions.”

- Funds get silently rerouted to attacker-controlled accounts.

Why finance, fintech, and banking are uniquely exposed

- Everything is remote now.

Onboarding, loan pre-approval, high-limit changes, and treasury operations all run through remote channels. If deepfakes defeat the “are you really you?” step, the rest of the workflow assumes trust.(Cybernews) - Speed is a feature.

Fintech and challenger banks pride themselves on “open an account in minutes.” Manual review and multi-person signoff are treated as friction. Attackers weaponize that cultural bias toward speed. - Overreliance on biometrics.

Many orgs still treat “the voice sounds right” or “the face matches the ID photo” as strong proof. Deepfakes were literally built to kill that assumption.(Business Insider) - Cross-platform blast radius.

Once an attacker convinces Finance to move money, Legal to sign off, and Ops to share credentials — often in the same fake call — you’re looking at a synchronized, multi-department breach. This isn’t a single-channel phishing email anymore.(World Economic Forum) - Resource asymmetry.

Tier-1 banks can afford in-house anomaly detection, fraud analytics, and adversarial media forensics. A regional payments startup probably cannot. That gap is exactly where organized crime will focus next.(Deloitte Brazil)

Defense: move from “Do I recognize this face?” to “Is this transaction behaviorally consistent?”

Modern defense is layered. It blends technical detection, behavioral analytics, process controls, and regulatory readiness.

Layered verification (behavior + device + out-of-band challenge)

- Behavioral analytics: typing cadence, cursor dynamics, navigation rhythm.

- Device intelligence: device fingerprint, OS/browser consistency, geolocation vs account history.

- Out-of-band step-up authentication: OTP or secure in-app confirmation via a separate trusted channel before high-risk actions.(Deloitte Brazil)

Advanced liveness / anti-spoofing checks

- Video: blink rate and timing, micro-expression latency, 3D depth cues, light reflection on skin (nose bridge / forehead), edge shimmer, compression artifacts.

- Audio: breathing gaps, unnatural pauses, spectrogram anomalies, robotic noise floors.(Cybernews)

These are signals a pure “does the face match the ID photo?” pipeline won’t catch.

Process-level controls

- High-value wire transfers require dual or triple approval across independent channels (video + separately verified phone + internal messaging).

- “Emergency” or “confidential” doesn’t override policy; it triggers more verification, not less.(World Economic Forum)

Continuous threat intel and staff training

- Security, SOC, fraud, treasury, and accounts payable teams should drill realistic playbooks (“urgent CEO transfer,” “supplier banking info change,” “VIP client voice call”).

- Employees must be taught: a convincing face on Zoom is no longer proof of identity.(World Economic Forum)

Compliance and auditability

- Regulators (FCA in the UK, FTC in the US, GDPR in the EU) increasingly expect that institutions can prove they verified identity and authorized a transaction through layered controls, not just “someone sounded right on the phone.”(Fortune)

- Insurers are updating cybercrime and social engineering coverage. They now ask whether you have deepfake-aware verification and post-incident protocols before they underwrite losses.(Reuters)

Below is a sample fraud risk matrix (scenario vs required controls):

| Scenario | Primary Threat | Required Controls |

|---|---|---|

| Remote onboarding / KYC | Fake face + forged ID + synthetic “liveness” | Advanced liveness, behavioral analytics, document authenticity checks, device fingerprinting |

| High-value transfer authorization | Deepfake “CFO” orders urgent confidential wires | Multi-channel callback, dual-approver workflow, recorded verification trail |

| Call center limit increase | Voice clone of “the customer” | Voice analysis + challenge questions + out-of-band OTP |

| Vendor payment change | Fake supplier finance contact with cloned voice/video | Callback to pre-registered phone/email, invoice history verification, internal finance escalation |

Incident response: treat deepfake fraud like a breach

When deepfake fraud is suspected, your playbook should look like a security incident, not a routine customer dispute:

- Freeze the suspicious transfer(s) and block additional outbound wires.

- Preserve full call/video/chat evidence for forensics and legal chain-of-custody.

- Escalate to legal, compliance, fraud, and cyber concurrently — not sequentially.

- Trigger mandatory disclosures if required by FCA/FTC/GDPR or sector regulators; document exactly which controls failed.(Fortune)

- Reset credentials, re-verify high-risk accounts, and force multi-factor for any impacted entity.

At this point, many insurers and regulators already treat deepfake-enabled fraud like a cyber incident, not “just bad judgment by an employee.”(Reuters)

Closing argument: identity proof is no longer visual — it is behavioral, procedural, and auditable

Finance has historically trusted visual and audible confirmation: “I saw them on video,” “I heard their voice,” “They held their ID next to their face.” Deepfake fraud destroys that model.(Business Insider)

The new standard is layered, adversarial, and regulated. It blends advanced liveness detection, behavioral analytics, strict multi-party approval for high-value actions, and fast incident response with legal-grade evidence retention.(Fortune)

In other words: the question is no longer “Do I recognize this face?” The question is “Can this action survive audit, litigation, insurance review, and regulatory scrutiny tomorrow morning?”