Introduction

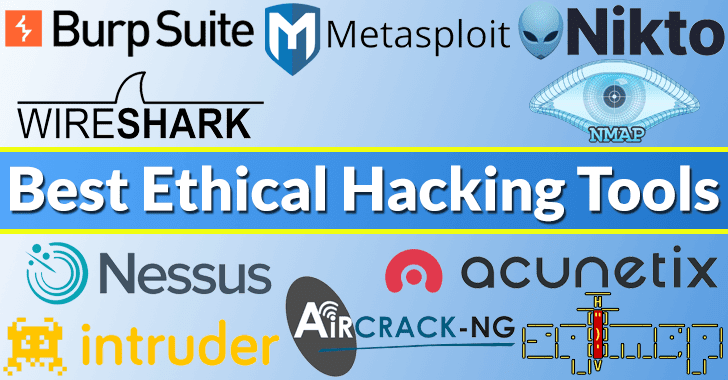

In the context of cybersecurity, Hacking Tools refer to the diverse technical instruments used to identify, test, and validate the security posture of systems. They are not synonymous with “hacker software,” but rather serve as essential components of penetration testing, vulnerability validation, and security assessment workflows. From Nmap’s port scanning to Metasploit’s exploitation framework and Burp Suite’s traffic interception, these tools form the operational backbone of modern offensive and defensive security.

Despite the rise of automation and AI, the value of Hacking Tools remains undiminished. They embody the “executable form” of security knowledge, grounding practitioners in empirical validation of system boundaries and attack surfaces. In fact, intelligent security systems of the future will be built atop these tools—where orchestration and semantic understanding turn command-line “black magic” into explainable, agent-driven security intelligence.

Why Hacking Tools Matter?

Within security operations, Hacking Tools bridge theory and practice. Experts rely on them to validate hypotheses, reproduce attacks, and uncover blind spots. AI-driven reasoning depends on the empirical data these tools generate, while defensive automation relies on their feedback to optimize protection strategies.

Their importance lies in three dimensions:

- Execution Layer of Knowledge – Security theories only gain value when operationalized through tools.

- Reference Frame for Defense – Tools define the offensive boundaries defenders must understand and emulate.

- Training Ground for AI Security – AI agents “understand risk” because their semantics are grounded in tool-generated empirical data.

Classification and Capabilities of Hacking Tools

The classification of Hacking Tools reflects the full lifecycle of security engineering—from reconnaissance and scanning to exploitation and reporting.

| Category | Core Function | Capability Traits | Example Tools |

|---|---|---|---|

| Reconnaissance | Enumerate domains, identify hosts, scan ports, detect service fingerprints | High-precision scanning, passive data correlation, low-noise detection | Nmap, Shodan, Recon-ng |

| Exploitation | Identify and exploit known vulnerabilities to achieve initial access | Automated payload management, scripted attack chains | Metasploit, ExploitDB, SQLmap |

| Privilege Escalation | Elevate from low-privilege accounts to system administrator level | Kernel exploits, credential harvesting, local privilege-escalation scripts | Mimikatz, LinPEAS, WinPEAS |

| Persistence & Evasion | Maintain access on target systems and evade detection | Rootkit injection, process obfuscation, antivirus evasion | Cobalt Strike, Empire, Veil |

| Post-Exploitation | Data collection, lateral movement, forensic evasion | Lateral-movement modules, data packaging and encrypted exfiltration | BloodHound, PowerView, SharpHound |

| Assessment & Defense | Detect vulnerabilities, conduct defensive exercises, analyze logs | Attack-defense simulation, AI-assisted detection, visual analytics | Burp Suite, Nessus, OWASP ZAP |

Engineering in Practice: Operationalizing Hacking Tools

The goals of operationalization are simple: make every scan and exploit reproducible, auditable, and scalable. Common practices include:

- Output Contracts: define structured outputs (JSON / SARIF / custom schema) that include parameters, version, and timestamps.

- Containerized Execution: package tools into container images to pin environments and deps.

- Microservice & Message Bus: wrap tools as remotely-invokable services or jobs and chain them via queues (Kafka/RabbitMQ) for scalability and retries.

- CI/CD Integration: incorporate scans into pipelines with staged depth (pre-merge, nightly, on-demand).

- Evidence & Audit Trail: capture command, params, stdout/stderr, exit code, host info, and image versions into an auditable evidence bundle.

Parallel Scanning & Output Normalization

Below is a pragmatic Python script template that demonstrates calling multiple tools in parallel (using Nmap and Nuclei as examples), wrapping runtime metadata, and outputting a unified JSON. In production, it’s recommended to invoke each tool as a containerized job or microservice; this example is a proof-of-concept.

# ops_runner.py — POC: parallel run + normalized JSON output

import subprocess, json, time, concurrent.futures, os, shlex

TARGETS = ["10.0.0.5", "10.0.0.7"]

RESULT_DIR = "./out"

os.makedirs(RESULT_DIR, exist_ok=True)

def run_cmd(cmd):

meta = {"cmd": cmd, "started_at": time.time(), "host": os.uname().nodename}

try:

# use shlex.split if cmd is string, here we pass list for safety

proc = subprocess.run(cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE, timeout=300, text=True)

meta.update({

"rc": proc.returncode,

"stdout": proc.stdout,

"stderr": proc.stderr,

"duration": time.time() - meta["started_at"]

})

except Exception as e:

meta.update({"rc": -1, "stdout": "", "stderr": str(e), "duration": time.time() - meta["started_at"]})

return meta

def scan_target(target):

nmap_cmd = ["nmap", "-sV", "-p-", target, "-oX", "-"] # sample: XML raw to stdout

nuclei_cmd = ["nuclei", "-u", f"http://{target}", "-silent", "-json"]

res = {"target": target, "runs": {}}

res["runs"]["nmap"] = run_cmd(nmap_cmd)

res["runs"]["nuclei"] = run_cmd(nuclei_cmd)

return res

def main():

out = {"generated_at": time.time(), "results": {}}

with concurrent.futures.ThreadPoolExecutor(max_workers=4) as ex:

futures = {ex.submit(scan_target, t): t for t in TARGETS}

for fut in concurrent.futures.as_completed(futures):

t = futures[fut]

out["results"][t] = fut.result()

with open(f"{RESULT_DIR}/scan_results.json", "w") as fh:

json.dump(out, fh, indent=2)

if __name__ == "__main__":

main()

Key notes

- Each invocation returns full runtime metadata (cmd, start time, duration, rc, stdout/stderr).

- In production parse stdout into structured fields (e.g., Nuclei JSON) and ingest into an index for aggregation.

- For containerized runs, use

docker runor Kubernetes Jobs for versioning, concurrency control, and isolation.

Embedding Scans in CI/CD

Key practical points:

- Layered scanning strategy: Use lightweight probes (port scans/basic templates) for pre-merge; run deep templates and validation on merge/main or nightly builds.

- Conditional triggers: Only trigger deep scans when changes touch “public-facing services” or specific IaC files.

- Failure policy: Block deployments on high-risk verification failures; for low-risk findings, create a ticket and allow the deployment to continue.

- Evidence archival: Upload results from each CI run to centralized storage and bind them to the run ID.

Example (GitHub Actions pseudo-configuration):

name: Security Scans

on:

push:

branches: [main]

jobs:

quick-scan:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Quick Recon

run: docker run --rm my-registry/nmap:latest nmap -sS $TARGET -oX - > recon.xml

- name: Upload Results

run: python3 upload_results.py recon.xml --job-id $GITHUB_RUN_ID

Tips

- Stage scans to manage cost and noise.

- Use change detection to trigger deeper scans only when relevant.

- Archive evidence with job IDs for traceability.

Containerization, Microservices & Message Buses

Wrapping tools as container images or microservices yields controllable envs, version traceability, and scale. Publish outputs to a message bus (Kafka/RabbitMQ) so analyzers, AI agents, or SIEMs can asynchronously consume, aggregate, and compute priorities.

Event flow:

Scheduler triggers container job → Tool runs and emits structured output → Result pushed to Kafka → Analysis consumer (AI/rule engine) ingests and produces validated report → Report written back and triggers alerts/tickets.

Evidence Bundles & Auditability

Each detection/exploit task should produce an evidence bundle (cmd, parameters, image hash, stdout, pcap, timestamps, runner node). Bundles should be retrievable, downloadable and linked to tickets for remediation and compliance.

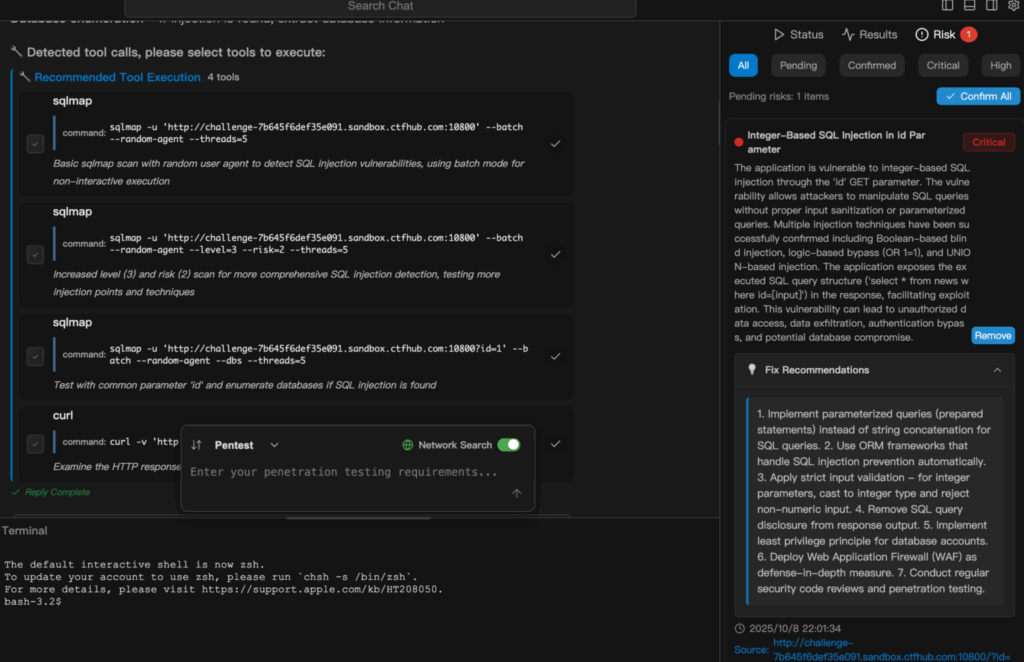

Penligent: Intelligent Pentesting with 200+ Hacking Tools

The next step after engineering is “intelligent orchestration” — using agents to chain tools, make decisions, and produce auditable results. Penligent exemplifies this evolution: it converts natural-language inputs into action sequences, auto-selects and executes appropriate tools, verifies findings, and generates exportable reports. Key technical elements: intent parsing, tool selection strategy, verification loop, and audit trails.

Scenario (cloud-native app test)

Given the command: “Check this subdomain for SQL injection and container exposure”, the system decomposes the task: asset discovery → web endpoint identification → template scanning → verification of suspicious findings → host/container fingerprinting. Every tool invocation, parameters, run logs, and evidentiary output are recorded; the final output is a prioritized remediation list with confidence and impact. For engineers, this saves repeat work while preserving reproducible technical detail.

Scenario (enterprise CI/CD)

Penligent can be embedded in CI/CD: when infra or code changes trigger scans, the platform runs targeted templates and verification, pushes high-priority findings to ticketing systems or remediation pipelines, turning pentesting from an offline event into a continuous feedback loop.

Conclusion

Hacking Tools are not merely instruments—they form the “operating system” of security engineering knowledge. When systematically integrated and intelligently orchestrated, security testing evolves from artisanal practice into a reproducible, scientific process. Penligent marks the beginning of this transformation—where tools form the skeleton and intelligence becomes the soul of modern cybersecurity.