The Glass Floor of AI Infrastructure: A Deep Forensic Analysis of CVE-2025-66566

In the rapid ascent of Generative AI, the security community has developed a form of tunnel vision. We have spent the better part of three years obsessing over Prompt Injection, Model Inversion, and Weight Poisoning—attacks that target the “brain” of the AI. However, CVE-2025-66566, a critical vulnerability disclosed this quarter, serves as a violent reminder that the “body” of our AI infrastructure—the boring, high-throughput data pipes—is rotting from the inside out.

For the hardcore AI security engineer, CVE-2025-66566 is not merely a library patch; it represents a systemic failure in how high-performance computing (HPC) prioritizes latency over memory safety. This article provides a comprehensive technical breakdown of the vulnerability, its specific devastation on RAG (Retrieval-Augmented Generation) architectures, and how intelligent automated defense systems like ペンリジェント are becoming mandatory for survival.

Anatomy of a Leak: Deconstructing CVE-2025-66566

To understand the gravity of CVE-2025-66566, we must look beneath the abstraction layers of Python and PyTorch, down to the byte-shuffling mechanisms of the JVM and C++ interop layers that power big data engines.

The vulnerability resides within the high-performance compression libraries (specifically affecting lz4-java implementations widely bundled in data ecosystem tools) used to optimize network traffic and disk I/O. In an effort to avoid the CPU overhead of garbage collection (GC) and memory allocation, these libraries aggressively utilize Buffer Recycling そして Off-Heap Memory (DirectByteBuffers).

The “Dirty Buffer” Mechanism

The flaw is a race condition between data validity and buffer reuse logic. When a high-throughput system—such as a Kafka broker or a Vector Database ingestion node—processes a stream of compressed records, it allocates a reusable “slab” of memory.

In a secure implementation, this slab is zeroed out (0x00) before new data is written. However, CVE-2025-66566 exploits a logic error in the safeDecompressor method where the outputLength check does not enforce a clean state for the remainder of the buffer.

Consider the following simplified breakdown of the vulnerable logic:

Java

`// Conceptual representation of the CVE-2025-66566 vulnerability public class VulnerableDecompressor { // A persistent, thread-local buffer reused to reduce GC pressure private byte[] sharedBuffer = new byte[1024 * 1024]; // 1MB buffer

public byte[] decompress(byte[] compressedInput) {

// Step 1: Decompress data into the shared buffer

// VULNERABILITY: The library assumes the caller will only read

// up to 'bytesWritten' and ignores the dirty data remaining in the buffer.

int bytesWritten = nativeDecompress(compressedInput, sharedBuffer);

// Step 2: The system returns a view of the buffer

// If the downstream application (e.g., a search indexer) reads beyond

// 'bytesWritten' due to a separate length-miscalculation bug,

// or if the buffer is serialized entirely, the LEAK occurs.

return Arrays.copyOfRange(sharedBuffer, 0, bytesWritten);

// Note: In many zero-copy frameworks (Netty/Spark), the copy is skipped,

// passing the raw 'sharedBuffer' reference downstream.

}

}`

もし Transaction A decompresses a sensitive prompt containing a user’s SSN (occupying bytes 0-500), and subsequently Transaction B (an attacker) sends a tiny payload that only occupies bytes 0-10, the bytes 11-500 of the buffer still contain the SSN from Transaction A.

An attacker can exploit this by sending “micro-payloads”—compressed packets that expand to very small sizes—effectively “scraping” the residue of the memory slab, chunk by chunk.

The Vector Database Crisis: Why AI is the Primary Target

Why is CVE-2025-66566 an AI security crisis rather than just a generic backend issue? The answer lies in the architecture of Modern AI Stacks, specifically RAG (Retrieval-Augmented Generation).

RAG systems rely heavily on Vector Databases (like Milvus, Weaviate, or Elasticsearch) and Feature Stores. These systems are designed for one thing: Extreme Speed. To achieve sub-millisecond retrieval of embeddings, they rely almost exclusively on memory-mapped files and aggressive compression.

The “Ghost in the Embedding” Scenario

Imagine a scenario in a Multi-Tenant SaaS platform hosting corporate knowledge bases:

- The Victim: A healthcare provider uploads a patient diagnosis PDF. The embedding model converts this to a vector and stores the raw text metadata in the Vector DB, compressed via LZ4.

- The Vulnerability: The Vector DB uses a thread pool for ingestion. The worker thread handling the healthcare data reuses a 4MB buffer.

- The Attacker: A malicious tenant on the same shared cluster sends a high-frequency stream of “nop” (no-operation) insert requests or malformed queries designed to trigger compression errors or partial writes.

- The Exfiltration: Due to CVE-2025-66566, the Vector DB’s response to the attacker (perhaps an error log or a query confirmation) inadvertently includes a “memory dump” trailing the actual response.

- The Impact: The attacker receives a hexadecimal string that, when decoded, contains fragments of the patient diagnosis from the Victim’s previous operation.

Table: Infrastructure at Risk

| コンポーネント | Role in AI Stack | CVE-2025-66566 Exploitation Risk | Impact Level |

|---|---|---|---|

| Apache Spark | Data Processing / ETL | Shuffle files (intermediate data) often contain PII and are compressed. | Critical (Massive Data Dump) |

| Kafka / Pulsar | Real-time Context Streaming | Topic logs leverage LZ4; consumers can read dirty bytes from brokers. | 高い (Stream Hijacking) |

| Vector DBs | Long-term Memory for LLMs | Index building processes reuse buffers aggressively. | Critical (Cross-Tenant Leak) |

| Model Serving | Inference API | HTTP payload compression (request/response). | ミディアム (Session Bleed) |

The Failure of Static Analysis (SAST)

One of the most frustrating aspects of CVE-2025-66566 for security engineers is the invisibility of the flaw to traditional tools.

Standard SAST (Static Application Security Testing) tools scan source code for known bad patterns (e.g., SQL injection, hardcoded keys). However, CVE-2025-66566 is not a syntax error. It is a state management error deeply buried in a transitive dependency (a library used by a library used by your framework).

Furthermore, Software Composition Analysis (SCA) tools might flag the library version, but they cannot tell you if the vulnerable code path is actually reachable in your specific configuration. You might patch the library, but if your JVM configuration forces a different memory allocator, you might still be exposed—or conversely, you might be panic-patching a system that isn’t actually using the vulnerable safeDecompressor method.

We need a paradigm shift from “Scanning Code” to “Testing Behavior.”

Intelligent Penetration Testing: The New Standard

This is where the concept of Intelligent Penetration Testing becomes not just a luxury, but a requirement for MLOps security. We can no longer rely on human pentesters to manually check every buffer boundary in a distributed cluster, nor can we rely on dumb fuzzers that just throw random garbage at an API.

We need agents that understand the semantics of the application.

Bridging the Gap with ペンリジェント

In the context of complex logic flaws like CVE-2025-66566, platforms like ペンリジェント represent the next evolution of offensive security. Penligent does not merely “scan”; it functions as an autonomous AI red-teamer.

How would an intelligent agent approach CVE-2025-66566 differently?

- Context-Aware Fuzzing: Instead of sending random bytes, Penligent’s engine understands the LZ4 framing protocol. It can deliberately construct valid frames that decompress to specific lengths, mathematically calculated to trigger the “dirty buffer” read capability. It targets the ロジック of the compression, not just the parser.

- Differential Response Analysis: A human analyst might miss that a 500-byte error response contains 50 bytes of random noise at the end. Penligent’s AI analyzes the entropy of the response. It recognizes that the “noise” has the statistical structure of English text or JSON, immediately flagging it as a potential memory leak (Memory Scraping).

- Supply Chain Graphing: Penligent maps the runtime execution of your AI stack. It identifies that while you are running

My-AI-App v1.0, the underlyingkafka-clientslibrary is invoking the vulnerable native code path oflz4-java, creating a prioritized remediation path.

By integrating ペンリジェント into the CI/CD pipeline, organizations move from “Patching on Tuesdays” to “Continuous Verification.” The platform proves whether the exploit is possible in your specific environment, saving hundreds of hours of triage time.

Remediation and Hardening Strategies

If you have identified that your infrastructure is vulnerable to CVE-2025-66566, immediate action is required. However, simply “bumping the version” is often insufficient in complex, shaded JAR environments.

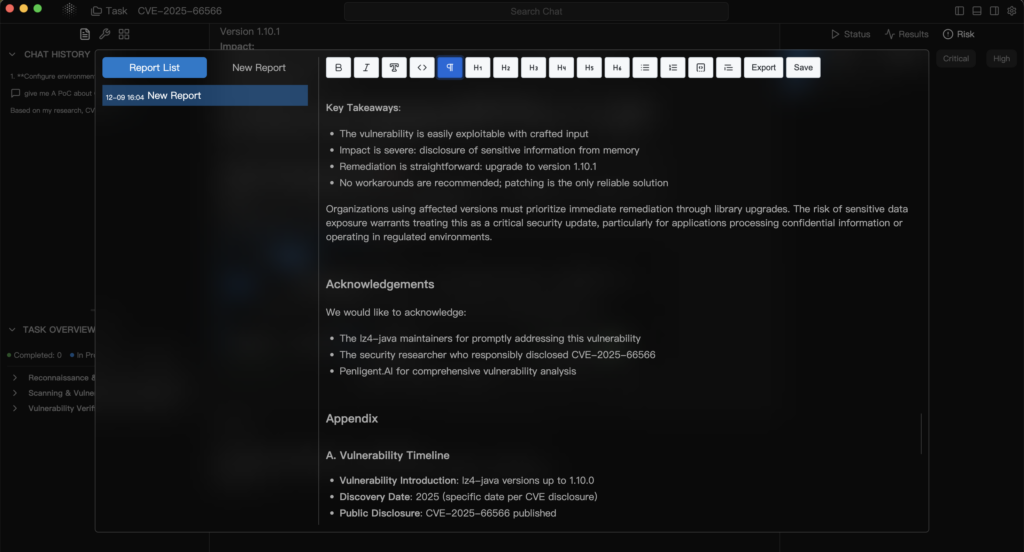

The Patch (And the Verification)

The primary fix is upgrading the affected LZ4 libraries (usually to versions 1.10.x or higher, depending on the vendor release).

- Action: Run

mvn dependency:tree -Dverboseまたはgradle dependencyInsightto find すべて instance. - Warning: Many Big Data frameworks “shade” (bundle/rename) dependencies. You might have a vulnerable LZ4 hidden inside a

spark-core.jarthat standard scanners miss.

Runtime Mitigation: Zero-Filling

If you cannot patch immediately (e.g., you are running a legacy Hadoop cluster), you must enforce memory hygiene at the application layer.

- Code Change: Wrap your decompression logic. Before passing a buffer to the decompressor, force a

Arrays.fill(buffer, (byte)0). - Performance Cost: This will introduce a 5-15% CPU overhead on ingestion nodes, but it neutralizes the data leak risk completely.

Network Segmentation (The Zero-Trust Approach)

Assume memory is leaking. Ensure the leak cannot leave the blast radius.

- Isolate Vector DBs in a VPC that has no egress to the public internet.

- Implement strict mTLS (Mutual TLS) between services. Even if an attacker compromises a web front-end, they should not be able to send arbitrary raw bytes to the internal storage layer.

Continuous Monitoring with eBPF

Advanced security teams should deploy eBPF (Extended Berkeley Packet Filter) probes to monitor memory access patterns. Tools that look for “out-of-bounds” reads at the kernel level can often detect the behavior of an exploit targeting CVE-2025-66566 before the data actually leaves the server.

Conclusion: The Era of Fragile Giants

The disclosure of CVE-2025-66566 is a pivotal moment for AI security. It strips away the glamour of Large Language Models and reveals the fragile, decades-old scaffolding that supports them. As we build systems that process trillions of tokens and store petabytes of vectors, the impact of a single “buffer overflow” or “memory leak” scales proportionally.

For the security engineer, the lesson is clear: The models are only as safe as the pipes that feed them. We must demand rigorous code signing, memory-safe languages (shifting from C++/Java JNI to Rust where possible), and most importantly, intelligent, automated validation tools like 寡黙 that can think faster than the attackers.

Secure your infrastructure. Verify your dependencies. And never trust a buffer you didn’t zero out yourself.

Related High-Authority Resources: