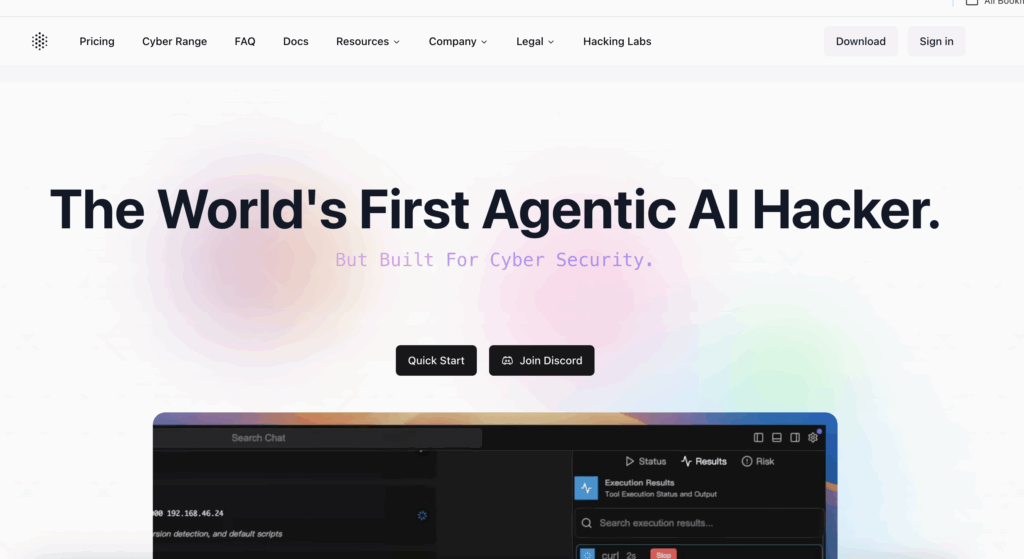

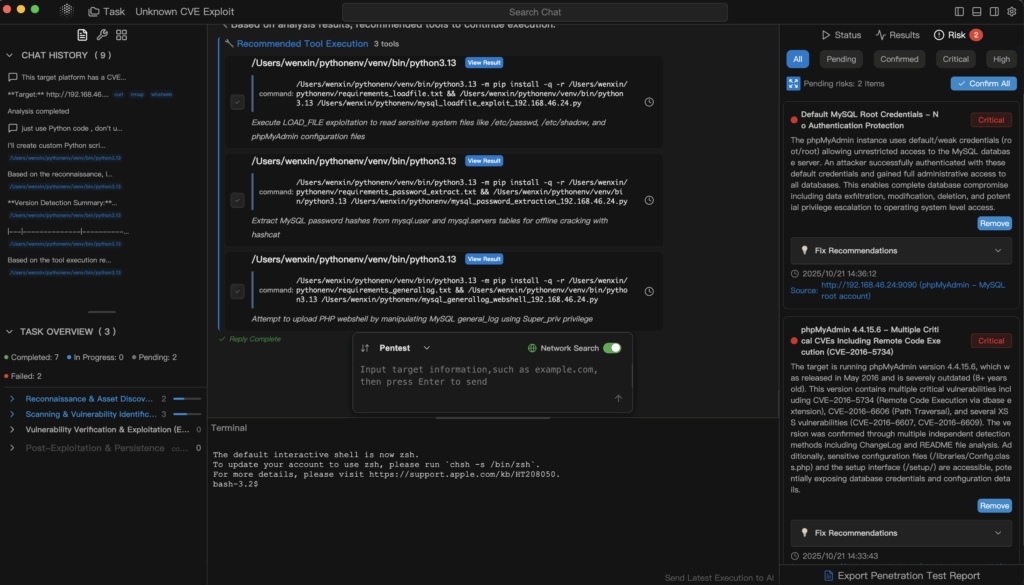

Most teams don’t need more scanners. They need a way to make the scanners, fuzzers, recon utilities, exploit kits, cloud analyzers, and traffic recorders they already own act like a single, coordinated attacker—and to produce evidence-backed, standards-aware output without weeks of manual glue. That is the problem Penligent.ai is designed to solve.

Penligent’s stance is simple: you speak in natural language; the system orchestrates 200+ tools end-to-end; the deliverable is a reproducible attack chain with evidence and control mappings. No CLI choreography. No screenshot scavenger hunt. No hand-stitched PDFs.

Why Orchestration (Not “Another Scanner”) Is the Next Step for pentestAI

- Tool sprawl is real. Security teams own Nmap, ffuf, nuclei, Burp extensions, SQLMap, OSINT enumerators, SAST/DAST, secret detectors, cloud posture analyzers, container/k8s baseline checkers, CI/CD exposure scanners—the list grows quarterly. The bottleneck isn’t tool capability; it’s coordination.

- Attackers chain, scanners list. Single tools report issues in isolation. What leadership wants is a story: entry → pivot → blast radius with proof. What engineering wants is repro: exact requests, tokens, screenshots, and a fix list. What compliance wants is mapping: which control failed (ISO 27001 / PCI DSS / NIST).

- LLM assistants ≠ automated execution. “pentestGPT” speeds up reasoning and writing, but still needs a human to choose tools, enforce scope, manage sessions, and build a credible artifact.

Penligent’s thesis: pentestAI must prioritize planning, execution, evidence management, and reporting—all driven by natural language—so the output is trusted by engineering and audit, not just interesting to researchers.

The Orchestration Architecture (How It Actually Works)

Think of Penligent as a four-layer pipeline that converts intent into an attack narrative:

A. Intent Interpreter

- Parses plain-English goals (scope, constraints, compliance targets).

- Extracts testing modes (black-box, gray-box), auth hints, throttling, MFA constraints.

- Normalizes to a structured plan spec.

B. Planner

- Resolves the plan into tool sequences: recon → auth/session testing → exploitation attempts (within policy) → lateral checks → evidence harvest.

- Chooses adapters for each step (e.g., ffuf for endpoint discovery, nuclei for templated checks, SQLMap for injection validation, custom replayers for token reuse).

- Allocates budgets (time, rate limits, concurrency) and idempotence rules (so retries don’t burn the app or rate limits).

C. Executor

- Runs tools with shared context (cookies, tokens, session lifecycles, discovered headers).

- Manages scope guardrails (host allowlists, path filters), safety (throttle, back-off), and audit trail (full command+params, timestamps, exit codes).

- Captures artifacts in standardized formats.

D. Evidence & Reporting

- Normalizes outputs into a unified schema; correlates to a single chain.

- Emits an engineering-ready fix list and compliance mappings (NIST/ISO/PCI), plus an executive summary.

A high-level plan object might look like:

plan:

objective: "Enumerate admin/debug surfaces and test session fixation/token reuse (in-scope)."

scope:

domains: ["staging-api.example.com"]

allowlist_paths: ["/admin", "/debug", "/api/*"]

constraints:

rate_limit_rps: 3

respect_mfa: true

no_destructive_actions: true

kpis:

- "validated_findings"

- "time_to_first_chain"

- "evidence_completeness"

report:

control_mapping: ["NIST_800-115", "ISO_27001", "PCI_DSS"]

deliverables: ["exec-summary.pdf", "fix-list.md", "controls.json"]

Why this matters: most “AI security” demos stop at clever payload generation. Reality is session state, throttling, retries, and audit trails. Orchestration wins by getting the boring parts right.

Old vs New: An Honest Comparison

| Dimension | Traditional (manual pipeline) | Penligent (natural language → orchestration) |

|---|---|---|

| Setup | Senior operator scripts CLI + glue | English objective → plan spec |

| Tool sequencing | Ad-hoc per operator | Planner chooses adapters & order |

| Scope safety | Depends on discipline | Guardrails enforced (allowlists, rate limits, MFA respect) |

| Evidence | Screenshots/pcaps scattered | Normalized evidence bundle (traces, screenshots, token lifecycle) |

| Report | Manual PDF + hand mapping | Structured artifacts + standards mapping |

| Repeatability | Operator-dependent | Deterministic plan; re-runnable with diffs |

From Request to Report: Concrete Artifacts

Natural-language in → Task creation

penligent task create \

--objective "Find exposed admin panels on staging-api.example.com; test session fixation/token reuse (in-scope); capture HTTP traces & screenshots; map to NIST/ISO/PCI; output exec summary & fix list."

Status & guardrails

penligent task status --id <TASK_ID> # Shows current stage, tool, ETA, and safety constraints

penligent task scope --id <TASK_ID> # Prints allowlists, rate limits, MFA settings, no-go rules

Evidence & reporting outputs

penligent evidence fetch --id <TASK_ID> --bundle zip

/evidence/http/ # sanitized request/response pairs (JSONL)

/evidence/screenshots/ # stage-labeled images (png)

/evidence/tokens/ # lifecycle + replay logs (txt/json)

/report/exec-summary.pdf # business-facing overview

/report/fix-list.md # engineering backlog (priority, owner, steps)

/report/controls.json # NIST/ISO/PCI mappings (machine-readable)

Normalized finding (sample JSON)

{

"id": "PF-2025-00031",

"title": "Token reuse accepted on /admin/session",

"severity": "High",

"chain_position": 2,

"evidence": {

"http_trace": "evidence/http/trace-002.jsonl",

"screenshot": "evidence/screenshots/admin-session-accept.png",

"token_log": "evidence/tokens/replay-02.json"

},

"repro_steps": [

"Obtain token T1 (user A, timestamp X)",

"Replay T1 against /admin/session with crafted headers",

"Observe 200 + admin cookie issuance"

],

"impact": "Privileged panel reachable with replay; potential lateral data access.",

"controls": {

"NIST_800_115": ["Testing Authentication Mechanisms"],

"ISO_27001": ["A.9.4 Access Control"],

"PCI_DSS": ["8.3 Strong Cryptography and Authentication"]

},

"remediation": {

"owner": "platform-auth",

"priority": "P1",

"actions": [

"Bind tokens to device/session context",

"Implement nonce/one-time token replay protection",

"Add server-side TTL with IP/UA heuristics"

],

"verification": "Replay attempt must return 401; attach updated traces."

}

}

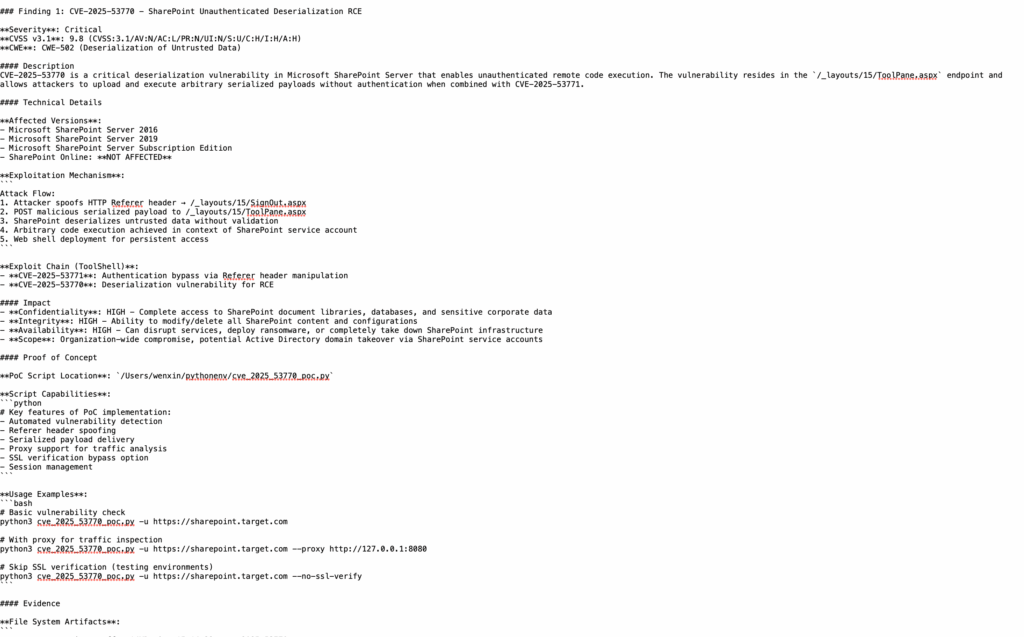

Capability Domains (What the System Actually Drives)

Web & API Perimeter

- Automated: admin/debug identification, auth boundary probing, session fixation / token reuse checks (in scope), fuzzing targeted to earlier recon.

- Outcome: request/response proof, screenshots, impact narrative → fix list.

Cloud & Containers

- Automated: ephemeral/“shadow” asset discovery, mis-scoped IAM detection, CI/CD runner exposure hints, stale tokens/keys signaling.

- Outcome: “entry → pivot → impact” chain—not 80 isolated “mediums”.

Auth, Session & Identity

- Automated: token lifecycle analysis, reuse/fixation, path-based isolation checks, mixed-auth surfaces.

- Outcome: low-noise findings with precise repro and control mapping.

OSINT & Exposure Mapping

- Automated: subdomain enumeration, service fingerprinting, third-party surfaces.

- Outcome: authorized discovery with durable audit trails.

Evidence & Reporting

- Automated: artifact capture → normalization → standards mapping → artifacts for security, engineering, compliance, leadership.

Methodology anchors:

NIST SP 800-115 – Technical Guide to Information Security Testing and Assessment

OWASP WSTG / PTES – phase-based pentest structure and terminology

The “AI Part” That Actually Helps (Beyond Payloads)

- Intent grounding: translates ambiguous instructions into scoped, testable steps (e.g., “do not exceed 3 rps,” “no destructive verbs,” “respect MFA”).

- Adaptive sequencing: switches tools based on intermediate results (e.g., if no admin headers found, pivot to alternative footprints; if token replay fails, test fixation).

- Evidence completeness: prompts the executor to re-capture missing artifacts to meet report quality floor (screenshot + trace + token log).

- Control language generation: transforms raw artifacts into NIST/ISO/PCI forms without losing technical precision.

This is where many “AI pentest” ideas fall short: they generate clever text, but do not enforce a minimum evidence standard. Penligent hardens the “last mile” by making evidence a first-class contract.

KPIs That Matter

| KPI | Why it matters | Orchestration effect |

|---|---|---|

| Time to first validated chain | Shows if the system can produce actionable intel quickly | Natural-language → immediate plan; adapters run in parallel; early chain materializes faster |

| Evidence completeness | Determines whether engineering can reproduce | Standardized capture; AI prompts executor to fill gaps |

| Signal-to-noise | Fewer false positives → faster fix | Cross-tool correlation yields fewer but stronger chains |

| Remediation velocity | Measured by time from finding to PR merged | Fix list is already structured; no translation latency |

| Repeatability | Needed for regression & audit | Plans are deterministic; re-runs generate deltas |

Realistic Scenarios

- Public admin panel drift on staging: prove replay/fixation, attach traces, map to controls, and ship a P1 task with clear “done” criteria.

- CI/CD exposure: discovered runners with permissive scopes; chain to secrets access; advise scoping and evidence TTL checks.

- Cloud “shadow” asset: a forgotten debug service; show entry → IAM pivot; quantify blast radius.

- AI assistant surface: validate prompt-injection-driven exfiltration or coerced actions within allowed scope; record artifacts and control impacts.

Integration Patterns (Without Hand-Wiring Everything)

Penligent treats tools as adapters with standardized I/O:

adapters:

- id: "nmap.tcp"

input: { host: "staging-api.example.com", ports: "1-1024" }

output: { services: ["http/443", "ssh/22", "..."] }

- id: "ffuf.enum"

input: { base_url: "https://staging-api.example.com", wordlist: "common-admin.txt" }

output: { paths: ["/admin", "/console", "/debug"] }

- id: "nuclei.http"

input: { targets: ["https://staging-api.example.com/admin"], templates: ["misconfig/*","auth/*"] }

output: { findings: [...] }

- id: "sqlmap.verify"

input: { url: "https://staging-api.example.com/api/search?q=*", technique: "time-based" }

output: { verified: true, trace: "evidence/http/sqlmap-01.jsonl" }

- id: "token.replay"

input: { token: "T1", endpoint: "/admin/session" }

output: { status: 200, issued_admin_cookie: true, screenshot: "..." }

No operator scripting. The planner composes adapters; the executor shares context (headers, cookies, tokens) across them; evidence is captured automatically.

Limitations & Responsible Use (Candid Reality)

- Not a human red-team replacement. Social, physical, highly novel chains still benefit from expert creativity.

- Scope must be explicit. The system will enforce allowlists and constraints; teams must define them correctly.

- Evidence is king. If an integration cannot produce high-quality artifacts, the planner should fall back to another adapter or mark the step as “non-confirming.”

- Standards mapping ≠ legal advice. NIST/ISO/PCI mappings assist audit conversations; program owners retain responsibility for interpretation and attestation.

- Throughput varies by surface. Heavy auth/multi-tenant flows require longer runs; rate limits and MFA respect are deliberate trade-offs for safety and legality.

A Practical Operator’s Checklist

- State the objective in plain English. Include scope, safety, and compliance targets.

- Favor “chain quality” over raw count. A single, well-evidenced chain beats 30 theoretical “mediums.”

- Keep adapters lean. Prefer fewer, well-understood tools with strong artifacts over many noisy ones.

- Define “done.” For each P1, pre-declare the verification trace expected after a fix.

- Rerun plans. Compare deltas; hand the before/after to leadership—this is how you show risk moving down.

References & Further Reading

- NIST SP 800-115 – Technical Guide to Information Security Testing and Assessment

https://csrc.nist.gov/publications/detail/sp/800-115/final - OWASP Web Security Testing Guide (WSTG)

https://owasp.org/www-project-web-security-testing-guide/

Conclusion

If your reality is “ten great tools and zero coordinated pressure,” pentestAI should mean orchestration:

- You speak.

- The system runs the chain.

- Everyone gets the evidence they need.

Penligent.ai aims squarely at that outcome—natural language in, multi-tool attack chain out—with artifacts you can hand to engineering, compliance, and leadership without translation overhead. Not another scanner. A conductor for the orchestra you already own.