지금 이것이 중요한 이유

Google Threat Intelligence Group’s February 2026 update is not a “robots are hacking you” headline. It’s a field report about a familiar reality: attackers already know how to break things; what changes is how cheaply they can iterate. When a model can rapidly synthesize OSINT, localize phishing, generate tooling glue code, and troubleshoot payloads, the operator’s bottleneck shifts from “time and skill” to “access and intent.” (Google 클라우드)

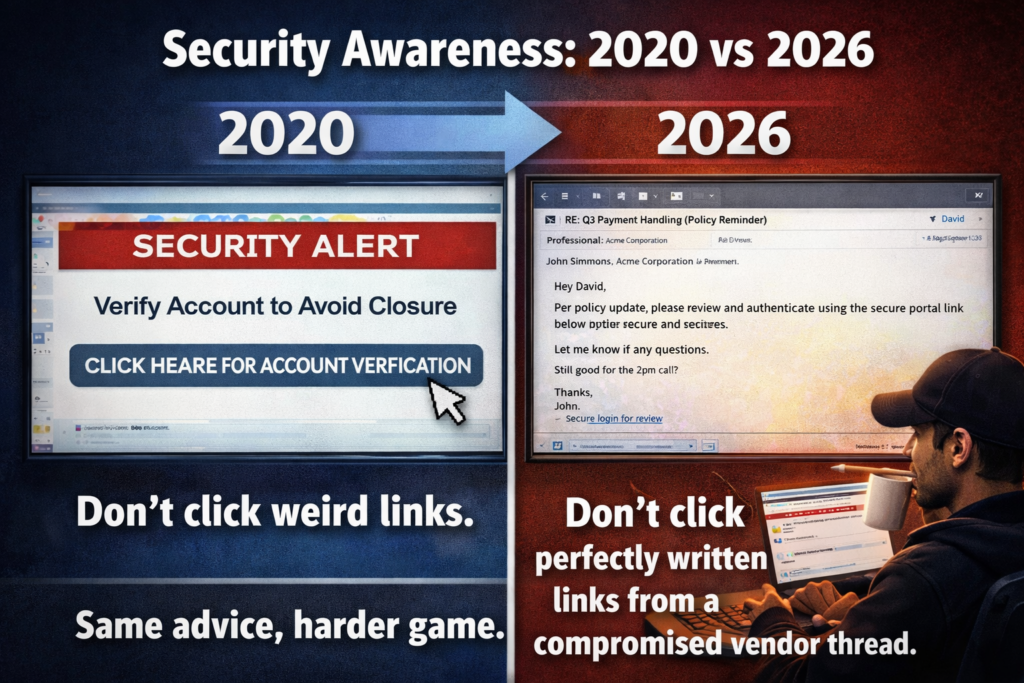

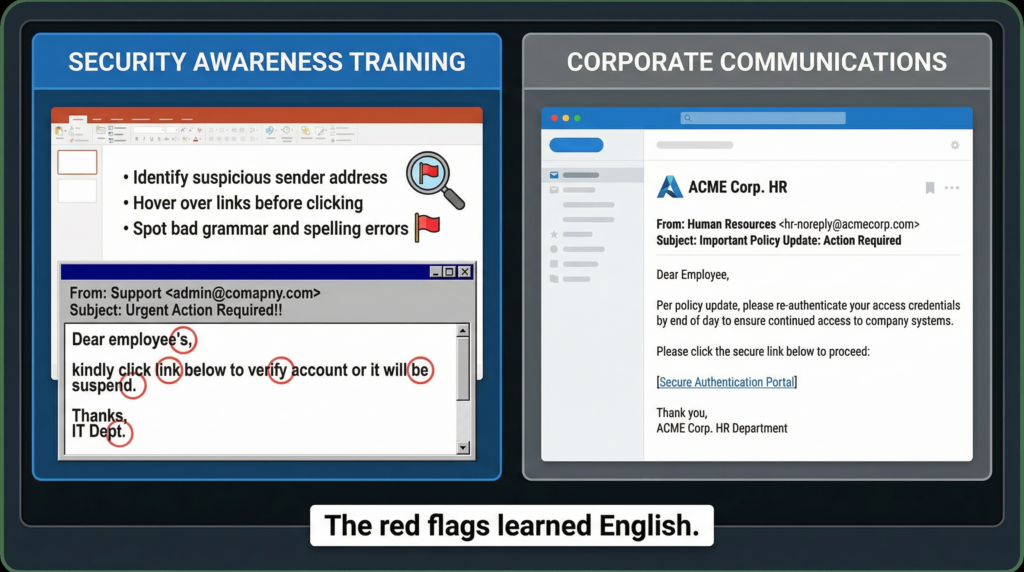

That shift is especially painful for defenders who still depend on weak heuristics:

- “Phish emails have bad grammar.”

- “Commodity malware drops obvious files.”

- “Suspicious traffic goes to obviously suspicious domains.”

GTIG’s message is that those assumptions are less reliable, and the defensive answer is behavioral correlation 그리고 control planes that can be proven effective. (Google 클라우드)

What Google reported, in defensible terms

Across GTIG’s Threat Tracker and corroborating coverage, four points are consistently stated:

- Threat actors are using Gemini for multiple phases of the attack lifecycle—not only writing emails, but also victim profiling, translation, coding assistance, troubleshooting, and post-compromise tasks. (Google 클라우드)

- Phishing quality improves when language and context are optimized at scale, reducing the detection value of grammar and cultural mismatch. (Google 클라우드)

- Examples of malware workflows that involve AI services are emerging in the reporting ecosystem, including a case described as using Gemini API prompts to fetch compilable code (discussed as HONESTCUE / HonestCue in secondary reporting). (컴퓨터)

- Model extraction and distillation attempts are a parallel risk—with GTIG explicitly calling out systematic prompting patterns aimed at replicating model capability. (Google 클라우드)

How Gemini fits into the attacker workflow

The most useful way to operationalize this is to map each phase to what defenders can actually observe and control.

| Attack phase | Typical AI usage described in GTIG coverage | Defender pivot that still works |

|---|---|---|

| 정찰 | Summarize OSINT, build target dossiers, map org charts and tool stacks | Reduce public exposure, harden identity flows, monitor recon sequences and enumeration patterns (Google 클라우드) |

| Initial access via social engineering | Draft localized lures, translate, craft multi-turn “rapport” scripts | Shift from language heuristics to identity telemetry: OAuth grants, login anomalies, new device fingerprints, domain age and sender infrastructure (Google 클라우드) |

| Exploitation planning | Generate test plans, interpret error responses, suggest payload variations | Watch for suspicious request choreography and brute enumeration; rate limit and instrument WAF signals (Google 클라우드) |

| Tooling and payload engineering | Write or refactor code modules, debug build/runtime errors | Separate dev/prod secrets; enforce signed artifacts; monitor compilation and LOLBin chains (Google 클라우드) |

| Post-compromise operations | Speed up discovery, persistence ideas, command sequences, evasion tweaks | Detect by behavior: process tree anomalies, credential access, lateral movement patterns (컴퓨터) |

| Model extraction | Systematic prompt patterns to clone capability | API abuse controls: rate limits, similarity detection, tiered auth, anomaly scoring (Google 클라우드) |

The defensive takeaway is blunt: you do not need to classify prompts. You need to correlate “who talked to an AI service” with “what happened next on the endpoint.”

Case study: HonestCue and the “benign API traffic” problem

Multiple sources describe a pattern where a downloader/launcher queries a generative API using hard-coded prompts and receives self-contained source code that can be compiled and executed. In the reporting, the code path is framed as C# and compilation/execution that reduces conventional static signatures. (컴퓨터)

Even if you treat the exact implementation details cautiously (because secondary writeups can differ), the defensive lesson remains stable:

Why defenders miss it

- The outbound connection can look like normal API usage.

- Payload material can be generated at runtime, reducing hash-based detections.

- Second stage can be hosted on common platforms, lowering the ROI of domain blocklists. (컴퓨터)

What to hunt instead

- Process-to-network correlation Which processes are making outbound calls to AI endpoints, and do they match your baseline?

- Just-after behavior Within minutes of an AI API call, do you see dynamic compilation, script hosts, LOLBins, new services, scheduled tasks, or in-memory loaders?

- Execution chain integrity Who launched the compiler or script engine? Is it a developer toolchain or an odd parent process (browser, office app, temp binary)?

This is where most organizations can win, because the attacker can change text, but can’t easily avoid leaving process and network relationships—unless you have no telemetry.

Detection engineering you can deploy today

1) Microsoft Defender for Endpoint KQL: suspicious dynamic compilation and staging

Use this as a starting point and tune it by environment. The goal is to surface rare, high-leverage patterns.

// Suspicious dynamic compilation / script staging patterns

// Tune allowlists for your build fleets and admin tooling.

DeviceProcessEvents

| where Timestamp > ago(7d)

| where FileName in~ ("csc.exe","vbc.exe","msbuild.exe","dotnet.exe","powershell.exe","wscript.exe","cscript.exe","mshta.exe","rundll32.exe","regsvr32.exe")

| extend cmd = tostring(ProcessCommandLine)

| extend parent = tostring(InitiatingProcessFileName)

| where

// Compilers/build tools launched from unusual parents

(FileName in~ ("csc.exe","vbc.exe","msbuild.exe") and parent !in~ ("devenv.exe","msbuild.exe","dotnet.exe","visualstudio.exe","jenkins.exe","teamcity-agent.exe")) or

// Script hosts performing network retrieval

(FileName in~ ("powershell.exe","wscript.exe","cscript.exe","mshta.exe") and cmd has_any ("http","https","Invoke-WebRequest","WebClient","DownloadString","curl","bitsadmin"))

| project Timestamp, DeviceName, InitiatingProcessAccountName,

parent, InitiatingProcessCommandLine,

FileName, cmd, ProcessId, InitiatingProcessId

| order by Timestamp desc

2) Sigma rule skeleton: script hosts fetching second stage

title: Script Host Network Retrieval Pattern

id: 46f6a1e2-cc51-4d3b-9b10-7f5e2b5c5b1f

status: experimental

description: Detects common script hosts and LOLBins used to fetch or stage payloads.

logsource:

product: windows

category: process_creation

detection:

selection_img:

Image|endswith:

- '\\powershell.exe'

- '\\wscript.exe'

- '\\cscript.exe'

- '\\mshta.exe'

- '\\rundll32.exe'

- '\\regsvr32.exe'

selection_cmd:

CommandLine|contains:

- 'http'

- 'https'

- 'DownloadString'

- 'Invoke-WebRequest'

- 'WebClient'

- 'bitsadmin'

- 'curl '

condition: selection_img and selection_cmd

falsepositives:

- legitimate admin scripts

- software deployment tooling

level: medium

3) Lightweight correlation: AI endpoint calls followed by suspicious execution

If you have proxy logs and EDR process logs, this correlation is often more valuable than any single IOC.

"""

Correlate outbound requests to GenAI API endpoints with suspicious process events.

Inputs:

- proxy.csv columns: timestamp, src_host, user, process, dest_host, url

- proc.csv columns: timestamp, host, user, parent, image, cmdline

"""

import pandas as pd

proxy = pd.read_csv("proxy.csv", parse_dates=["timestamp"])

proc = pd.read_csv("proc.csv", parse_dates=["timestamp"])

GENAI_HOST_HINTS = (

"generativelanguage.googleapis.com",

"ai.google.dev",

"googleapis.com",

)

SUSP_IMAGES = {

"csc.exe","vbc.exe","msbuild.exe","powershell.exe",

"wscript.exe","cscript.exe","mshta.exe","rundll32.exe","regsvr32.exe"

}

p = proxy[proxy["dest_host"].str.contains("|".join(GENAI_HOST_HINTS), case=False, na=False)].copy()

q = proc[proc["image"].str.lower().isin(SUSP_IMAGES)].copy()

p["key"] = p["src_host"].astype(str) + "|" + p["user"].astype(str)

q["key"] = q["host"].astype(str) + "|" + q["user"].astype(str)

alerts = []

for key, pgrp in p.groupby("key"):

qgrp = q[q["key"] == key]

if qgrp.empty:

continue

for _, row in pgrp.iterrows():

window = qgrp[

(qgrp["timestamp"] >= row["timestamp"]) &

(qgrp["timestamp"] <= row["timestamp"] + pd.Timedelta(minutes=10))

]

if not window.empty:

first = window.sort_values("timestamp").iloc[0]

alerts.append({

"host_user": key,

"genai_time": row["timestamp"],

"dest_host": row["dest_host"],

"proc_time": first["timestamp"],

"proc": first["image"],

"cmd": str(first["cmdline"])[:200],

})

out = pd.DataFrame(alerts).sort_values("genai_time", ascending=False)

print(out.head(50).to_string(index=False))

Control planes that actually reduce risk

Email and identity controls that scale against AI-augmented phishing

If the attacker can generate perfect English, you must win on controls the model cannot easily fake:

- Enforce phishing-resistant MFA for privileged users and for any externally exposed admin portals.

- Monitor OAuth app grants and consent events. Attackers increasingly prefer authorization abuse because it survives password resets.

- Alert on impossible travel, new device enrollment spikes, and abnormal sign-in risk. (Google 클라우드)

Endpoint controls that break common staging chains

- Constrain script engines and LOLBins with WDAC/AppLocker where feasible.

- Require code signing for internal scripts in high-risk fleets.

- Turn on Attack Surface Reduction rules appropriate for your environment, especially those targeting Office/child process creation and credential theft behaviors.

Network controls that make AI-integrated malware expensive

- Route AI API traffic through explicit egress gateways with identity tagging.

- Maintain an allowlist of sanctioned AI endpoints per business unit.

- Log and alert on AI endpoint use from non-browser, non-developer processes.

The theme is consistency: policy + telemetry + correlation.

CVE reality: AI accelerates operators, but CVEs still provide footholds

A practical way to keep this article grounded is to anchor remediation to “exploited in the wild” signals rather than speculative AI threat narratives.

CISA’s February 10, 2026 alert added six vulnerabilities to the Known Exploited Vulnerabilities Catalog, indicating evidence of active exploitation and establishing a defensible “patch first” list for many enterprises. (CISA)

SecurityWeek’s February 2026 Patch Tuesday coverage also calls out actively exploited zero-days fixed by Microsoft during that cycle, reinforcing the idea that attacker productivity gains still land on the same classic chokepoint: keeping systems current and validating remediation. (보안 주간)

If you want a single sentence that security engineers accept immediately, it’s this:

AI makes the attacker faster at choosing and operationalizing a weakness, but the breach still happens because a weakness exists and stayed reachable.

A practical defender playbook

Step 1: Establish what “normal” AI usage looks like

- Which teams are allowed to call AI APIs?

- From which networks and endpoints?

- Under which identities and service accounts?

If your answer is “we don’t know,” you are operating blind.

Step 2: Build a detection triangle

Pick three signals and correlate them:

- AI endpoint access

- suspicious process execution (compiler/script/LOLBin)

- outbound download or new persistence mechanism

False positives drop dramatically when you require two of three.

Step 3: Add a containment path that does not depend on content inspection

When you find a suspicious chain:

- isolate host

- collect process tree and network flow

- pull persistence points

- review identity events and OAuth grants

- rotate exposed secrets

Step 4: Prove the fix

After patching or policy changes, replay the scenario in a controlled environment and confirm the chain is broken.

If you operate https://penligent.ai/ as an AI-powered penetration testing and verification platform, the natural insertion point is verification discipline.

In an environment where adversaries iterate faster, defenders need the same advantage: the ability to validate that controls work in reality, not only on paper. A strong “prove the fix” loop can include:

- automated checks for externally reachable high-risk services and misconfigurations,

- repeatable validation that KEV-aligned patching removed the exploit surface,

- evidence-first reporting that shows which links in the chain still hold.

If you want two on-theme internal reads that support this article’s argument about attacker manipulation and provable defense:

(펜리전트)

결론

The strongest way to think about GTIG’s warning is not “AI is a new exploit class.” It’s “AI lowers iteration costs.” That means the defensive posture that wins is the one that remains robust when content looks perfect and payloads change every run:

- correlate behavior instead of parsing language,

- instrument AI egress like any other high-value SaaS,

- patch what is demonstrably exploited,

- and validate remediation with repeatable tests.

That’s how you keep “Gemini-accelerated attackers” from becoming “Gemini-enabled breaches.” (Google 클라우드)

출처

https://thehackernews.com/2026/02/google-reports-state-backed-hackers.html

https://www.cisa.gov/known-exploited-vulnerabilities-catalog